Category:Scraping Basics

How Exactly Websites Catch Scrapers (7 detection techniques)

R&D Engineer

Web scrapers don’t browse like real users.

They move faster, act differently, and leave behind patterns that websites can spot.

But how exactly are they different and can websites really tell?

Let’s unpack what makes scrapers stand out, how detection works, and what you can do to avoid getting blocked when web scraping.

Starting with the basics:

How Web Scrapers Are Different From Real Users

Web scrapers behave nothing like you.

They move faster, follow predictable paths, and never touch a mouse. These subtle (and not-so-subtle) signals are what let websites tell humans and bots apart.

Let’s break down the most common giveaways:

Speed and Volume of Requests

No human reads 300 product pages in 60 seconds.

Bots do.

Scrapers can send hundreds of requests within seconds, far beyond what a real user could ever do manually.

It’s not just the speed; it’s also the lack of natural pauses between pages.

High-frequency requests like these are one of the easiest tells for automated behavior.

Consistent and Repetitive Navigation Patterns

People browse randomly. They bounce between links, skip around, and rarely follow a linear path.

Scrapers, on the other hand, are structured.

If a client hits every page on a site in order (like one request per second, in perfect rhythm) that’s obviously not a person; that’s a bot.

This kind of regularity is a red flag for many detection systems.

Interaction Signals

Human activity generates noise like mouse movements, scrolls, taps, and keystrokes.

Bots don’t, unless customized to do so.

Simple HTTP scrapers (and even some headless browsers) don’t trigger any interaction events.

No scrolling. No clicking. No hover states. Just cold, clean requests.

It's a big difference between you taking a few seconds to guide your mouse cursor to the corner of a button and click it and a bot's cursor moving to the exact middle of a button in 0.1 seconds.

Technical Fingerprints in Headers and Environment

Your browser reveals a lot:

- which OS you're on,

- what languages you accept,

- whether JavaScript is enabled,

- which plugins are installed.

Scrapers often have weird or missing details in these headers.

A bot might use an outdated or default user agent, forget to load images or fonts, or return identical fingerprints across sessions.

Or, in a better case scenario, a bot might have its user agent, fingerprints, and rotating proxies set up to mimic an actual user; but it might be using mobile proxies with desktop headers, which is a very obvious inconsistency.

So as you can see, it's not very hard to tell a bot from a real user when you look into it, but can websites do that?

Can Web Scraping Be Detected?

Yes, web scraping can easily be detected by websites. Servers track IP addresses, traffic patterns, catch high request rates, and analyze headers or browser fingerprints. High-security detection systems can detect and block you even if there's a slight inconsistency in your bot's appearance.

But detection doesn’t mean defeat.

Websites aren’t blocking scrapers "just because", they’re trying to protect their data, reduce server load, and stop abuse.

And while some defenses are aggressive, most follow a clear logic. If something moves too fast, looks too perfect, or skips over things real users would load, it starts raising flags.

The trick is to blend in.

That means thinking less like a bot and more like a browser. Slow things down, randomize behavior, and give your scraper a believable fingerprint.

If you can mimic how real people browse, you’re far more likely to stay undetected.

So you need to learn how exactly websites detects bots to stay ahead in the game.

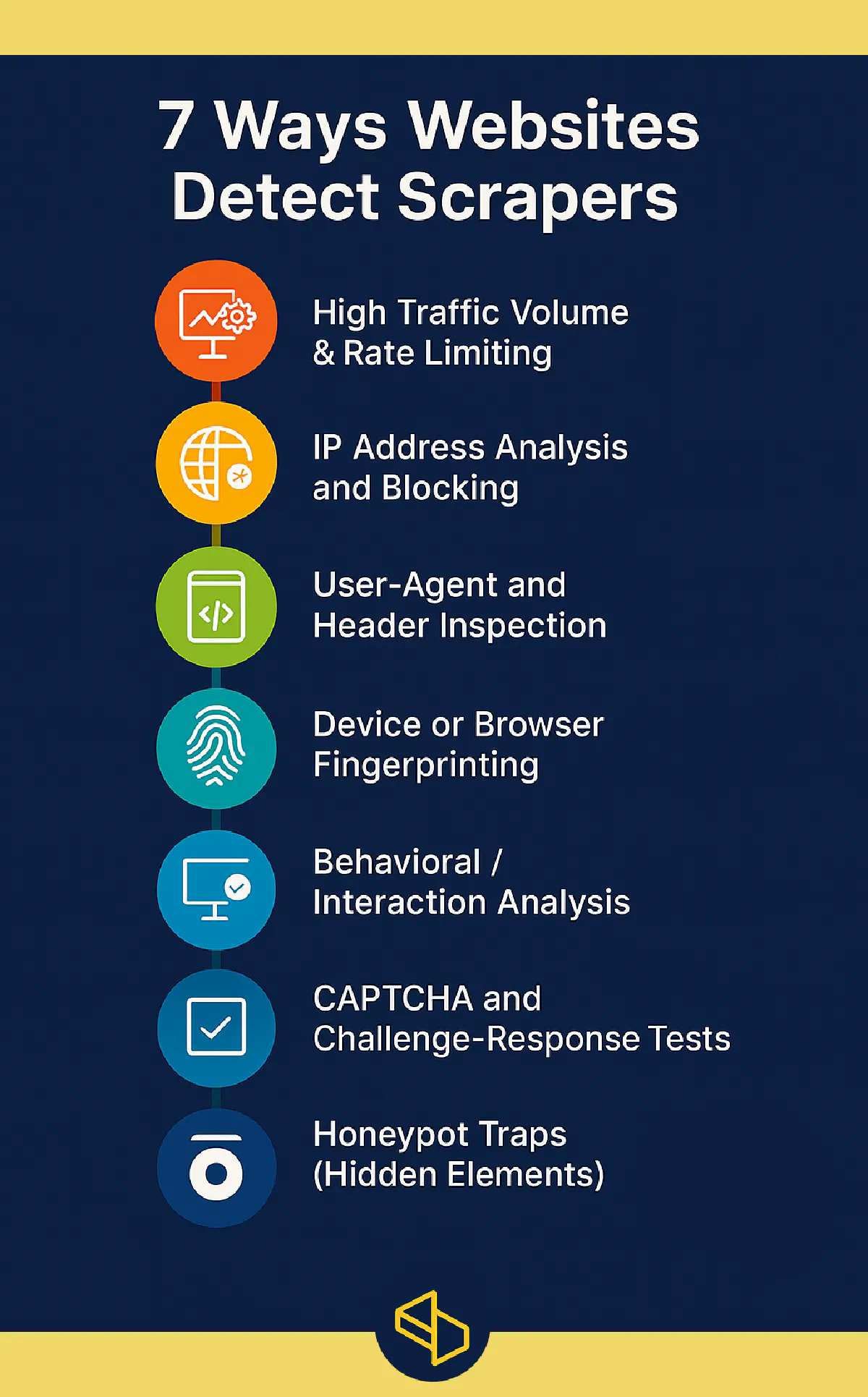

Here are 7 ways websites detect scrapers and exactly what you can do to get around each one.

7 Ways Websites Detect Scrapers (and How to Bypass Them)

Even if your scraper is disguised as a browser, websites aren’t easily fooled.

Modern detection systems use layered techniques; some simple, others surprisingly advanced, to spot automation.

But for every detection method, there’s a countermeasure.

1. High Traffic Volume & Rate Limiting

Scrapers are fast. Too fast for their own good.

And they have to be. If you scraped at the speed it takes YOU to browse, you would never be able to scrape thousands of pages in a day.

Although speed is an upside of web scraping in general, it's the MOST obvious way websites detect bots.

When a site sees a single IP firing off dozens or hundreds of requests per minute, it knows something’s up. Real users don’t open 50 pages in 30 seconds.

That’s why most websites implement rate limiting.

They set a cap (like 10 or 20 requests per minute) and if you exceed it, your IP gets throttled, challenged, or outright blocked.

This method is great at stopping basic bots that try to scrape everything all at once.

🔑 How scrapers get around it:

By slowing down.

Adding randomized delays between requests (say, 2–7 seconds) makes traffic look more human. This is called throttling.

Advanced setups also rotate IP addresses using proxy networks so no single IP ever crosses the limit. The goal isn’t speed, it’s invisibility.

Here's an example code in Python that uses throttling and proxy rotation to avoid being flagged:

import requests

import time

import random

# Sample proxy list (usually much longer in real scenarios, or taps into a paid proxy pool with millions of IPs)

proxies = [

"http://proxy1.example.com:8000",

"http://proxy2.example.com:8000",

"http://proxy3.example.com:8000",

]

urls_to_scrape = [

"https://example.com/page1",

"https://example.com/page2",

"https://example.com/page3",

# ... more URLs

]

for url in urls_to_scrape:

proxy = {"http": random.choice(proxies)}

headers = {"User-Agent": "Mozilla/5.0"}

# Error handling so you know why you've been blocked if it happens.

try:

response = requests.get(url, headers=headers, proxies=proxy, timeout=10)

print(f"{url} - {response.status_code}")

except Exception as e:

print(f"Request failed: {e}")

time.sleep(random.uniform(2, 7)) # random delay between 2–7 secondsFree and cheap rotating proxies are listed here.

2. IP Address Analysis and Blocking

Your IP address says a lot about you.

Websites monitor where requests are coming from, and if a single IP sends too many, too often, or at odd hours, it gets flagged.

Some systems go a step further and check your IP’s reputation; if it belongs to a cloud datacenter or a known proxy provider, it might be blocked instantly.

They also block traffic by country, or deny access to anonymizers and VPNs.

🔑 How scrapers get around it

By using residential rotating proxies.

These are real IPs from consumer ISPs, not cloud providers. They blend in with normal traffic and bypass IP reputation checks.

Smart scrapers or proxy providers also monitor response codes and if an IP gets blocked, they swap it out.

3. User-Agent and Header Inspection

Every time you visit a site, your browser tells the server who it is, using a User-Agent string.

Scrapers often forget this step or use the default one from their library.

That’s a dead giveaway.

Sites also check other headers like Accept-Language, Referer, and Cookie. If your User-Agent says “Chrome on Windows” but your headers don’t match… you're busted.

🔑 How scrapers get around it

For this bypass, spoof real browser headers.

Instead of sending one static User-Agent, they use a rotating pool of real ones.

Same for other headers like adding Accept-Language: en-US,en;q=0.9 or realistic Referer values. The goal is consistency and variety.

Here's an example code for Python web scraping that spoofs real browser headers:

import requests

import random

user_agents = [

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 Chrome/120.0",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 13_2) AppleWebKit/605.1.15 Safari/605.1.15",

"Mozilla/5.0 (Linux; Android 11) AppleWebKit/537.36 Chrome/96.0",

]

headers = {

"User-Agent": random.choice(user_agents),

"Accept-Language": "en-US,en;q=0.9",

"Referer": "https://google.com",

"Accept": "text/html,application/xhtml+xml",

}

response = requests.get("https://example.com", headers=headers)

print("Status:", response.status_code)4. Device or Browser Fingerprinting

Headers aren’t the only way a site knows who you are.

Modern detection tools build a fingerprint of your browser and device; everything from your screen size and time zone to the order of your TLS ciphers, installed fonts, and how your browser draws a canvas.

Headless browsers like Selenium or Puppeteer, which are used for most scraping operations, often leave subtle traces.

They might misreport graphics properties or expose automation flags that give you away. And once your fingerprint is flagged, it can be blocked even if your IP and headers look clean.

🔑 How scrapers get around it

By masking or spoofing fingerprints.

This can take a lot of time done manually, so tools like Puppeteer Extra Stealth or patched Selenium drivers hide automation traits and make the browser behave more like a real one.

5. Behavioral / Interaction Analysis

It’s not just what you send, it’s how you behave too.

Websites run scripts that monitor user behavior in real time.

Do you move your mouse?

Scroll gradually?

Click around like a human would?

Bots tend to skip all of that and load a page and grab the content instantly.

Anti-bot systems track these patterns. If your scraper never scrolls, clicks, or pauses, it’ll look too perfect.

Real users are messy; bots aren’t.

🔑 How scrapers get around it

By simulating human behavior.

For this, you'll need to use a headless browser, which can move the mouse, scroll the page, and even inject typing delays.

The goal isn’t to fake perfection; it’s to add just enough randomness to seem human.

Here's a basic way to implement this when web scraping using Playwright and Python:

from playwright.sync_api import sync_playwright

import time

import random

with sync_playwright() as p:

browser = p.chromium.launch(headless=True)

context = browser.new_context()

page = context.new_page()

page.goto("https://example.com")

# Simulate scrolling

for _ in range(3):

page.mouse.wheel(0, random.randint(200, 600))

time.sleep(random.uniform(1.5, 3.5))

# Simulate mouse movement

page.mouse.move(100, 100)

time.sleep(1)

page.mouse.move(200, 300)

time.sleep(2)

print("Page title:", page.title())

browser.close()6. CAPTCHA and Challenge-Response Tests

When websites get suspicious, they don’t block you right away, they test you.

This way, real users who look or acted suspicious somehow have a chance to still access the website after confirming they're not bots.

Yes, I'm talking about CAPTCHA.

Those “click all the traffic lights” puzzles or the familiar “I’m not a robot” checkbox.

More advanced sites like Google or Cloudflare use invisible JS challenges that look at your browser environment or require proof-of-work to pass.

If your scraper doesn’t see or respond to the test, it’s blocked.

🔑 How scrapers get around it

Some simply avoid pages with CAPTCHAs.

Others use CAPTCHA-solving services, either AI-based tools or human-powered services that solve challenges in real time.

These solutions aren’t perfect, but they work.

Just know they come with extra cost and complexity.

7. Honeypot Traps (Hidden Elements)

If you're being detected through a honeypot trap, you're not the hunter anymore, but the hunted.

Honeypots are hidden fields or links embedded in web pages and they're things a human would never see or interact with.

A real user ignores them completely but a careless bot that fills out every field or clicks every link?

Caught. 🎯

Some forms include invisible <input> fields that must be left blank. Others hide links that act like tripwires in analytics.

If your scraper touches them, it marks you as a bot.

🔑 How scrapers get around it

They get smarter about what to ignore.

Honeypots often have suspicious names (homepage_url, hidden_input) or CSS that hides them (display:none).

A good scraper inspects the DOM carefully, only interacts with visible and expected elements, and skips anything that smells like bait.

Here's a scraper example that is instructed to detect any hidden input fields:

from bs4 import BeautifulSoup

import requests

url = "https://example.com/form"

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

form = soup.find("form")

inputs = form.find_all("input")

visible_fields = {}

for input_tag in inputs:

if input_tag.get("type") in ["hidden", "submit"]:

continue

if input_tag.get("style") == "display:none":

continue

name = input_tag.get("name")

if name and "url" not in name: # simple honeypot keyword check

visible_fields[name] = "example"

print("Safe form fields:", visible_fields)Conclusion

Protected sites, which are the crushing majority of where insightful data is, are watching everything.

Your IP, your headers, your clicks, your browser fingerprint, even how you scroll.

But none of this means scraping is impossible; it just means you need better tools and smarter strategies.

We've built Scrape.do for this exact challenge.

It helps you bypass all these detection layers and MORE with:

- Rotating residential proxies to avoid IP bans

- Dynamic header and fingerprint management

- JavaScript rendering and CAPTCHA handling

- Reliable performance across hard-to-scrape sites

Get started for free with 1,000 credits and see how effortless scraping can be.

R&D Engineer