How to Scrape Instagram Profiles, Posts, and Comments

Disclaimer: All data scraped in this article was publicly accessible on Instagram as of May 2025; Instagram’s policies and public data accessibility may change over time.

Instagram is a goldmine of actionable business intelligence.

From spotting trending products before they explode to instantly measuring public sentiment about a brand, scraping Instagram puts powerful insights at your fingertips.

In this comprehensive guide, you’ll learn precisely how to scrape public Instagram data using Python and Scrape.do, avoiding common pitfalls, legal risks, and technical challenges along the way.

⚙ Find ready-to-use code for scraping IG on Github.

Let’s go:

Why Scrape Instagram?

Instagram isn’t just a social media app; it’s arguably the richest public data source on the web.

With over a billion active users, Instagram generates endless streams of valuable data every second. Tapping into this manually is straight-up impossible.

Scraping Instagram lets you quickly transform this flood of information into actionable insights.

Most common use cases are:

Product Discovery

Quickly identify emerging product trends, consumer interests, and niche markets before competitors notice them.

Especially if you’re doing e-commerce and drop shipping, this is a source of unlimited revenue.

Social Listening

Track trending hashtags, uncover viral content, and pinpoint influential profiles shaping public conversations.

This helps you tap into trends before any other competitors or other influencers and get more engagement.

Competitor Analysis

Monitor competitor engagement metrics, follower growth, content strategies, and promotional activities in real time.

Sentiment Analysis

Analyze comments instantly to gauge user sentiment towards brands, products, or events to make the right decisions based on qualitative data.

Although more than 90% of scraping ops. done on Instagram will fall into one of these four use cases, the possibilities are endless and gets more diversified each day.

Does Instagram Allow Scraping?

Instagram explicitly discourages scraping activities in its Terms of Service; however, there’s a crucial distinction to consider: Scraping public data (content you can see without logging in) generally falls within legal and ethical boundaries, while attempting to scrape private or login-protected data can quickly cross into prohibited territory.

So unless you’re scraping logged in, you’re mostly risk-free.

What’s Allowed to Scrape (No Login Required)

You can legally and ethically scrape the following types of data from Instagram because they’re publicly accessible:

- Public user profiles such as usernames, bios, follower counts, and profile images.

- Publicly visible posts and reels, including captions, images, and the number of preview likes shown.

- Top comments on public posts that appear without logging in or clicking “see more”.

- All locations in the “Location” feature and public posts that are tagged with a certain location.

- Posts connected to specific audio clips.

What’s Not Allowed (Login Required)

Because you need to log in to scrape following data, you will be accepting Instagram’s terms of service which will restrict you from scraping anything, so you’re liable to legal action:

- Full comment threads beyond the initially visible comments.

- Complete lists of followers or users someone follows.

- Detailed lists of users who liked specific posts.

- Stories, highlights, and any form of private content.

- Hashtag-specific content pages (which typically require authentication).

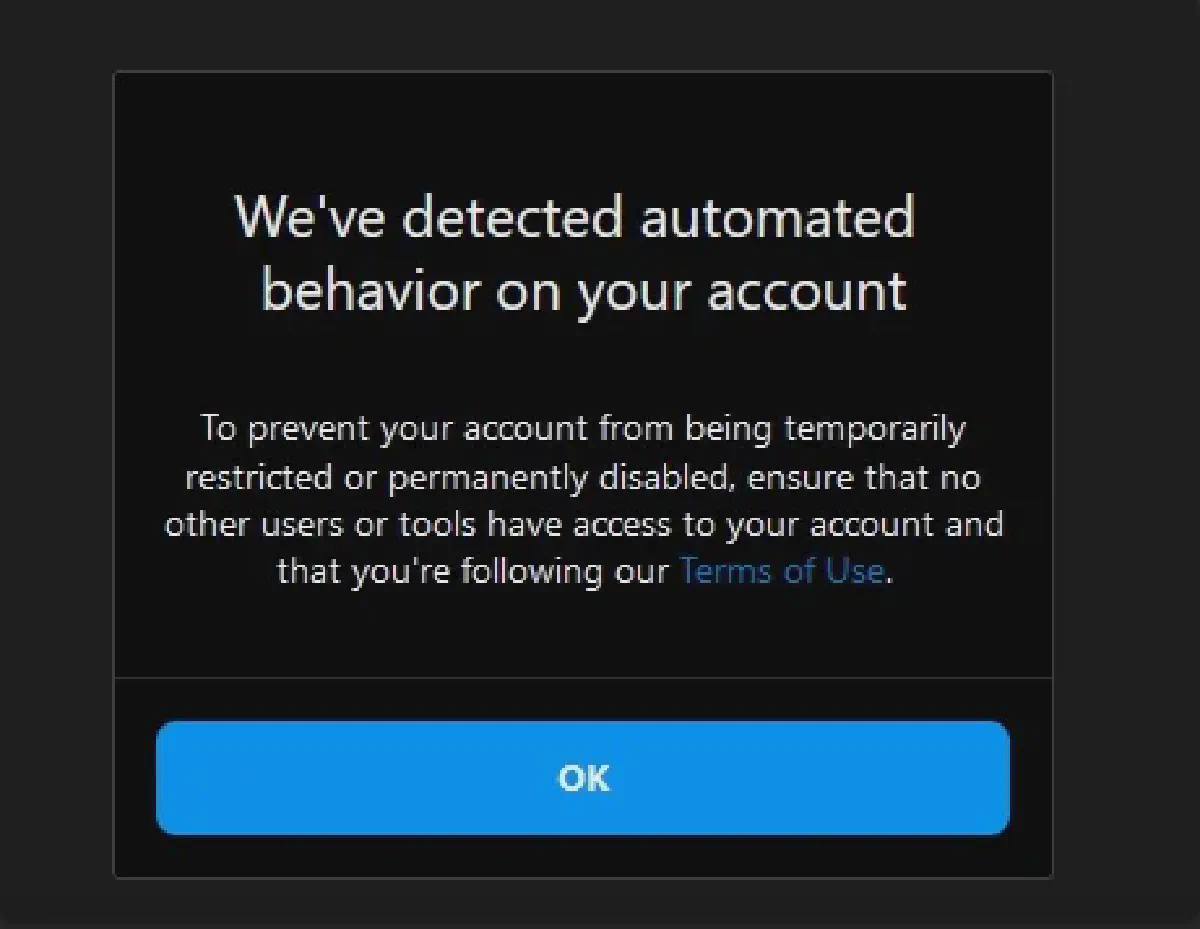

What Happens If You Scrape Data While Logged In?

If Instagram detects automated scraping while logged in, it issues clear warnings and may temporarily or permanently block your account.

Repeated violations can escalate quickly, resulting in permanent bans or even potential legal action.

💡 Some users attempt to bypass these restrictions by rotating multiple fake accounts to distribute scraping activities. While technically possible, this practice explicitly violates Instagram’s Terms of Service, poses ethical concerns, and carries significant risks. If you want to stay on the good side of the law when scraping, this is not a direction you should explore.

Getting Started: Setup and Tools

For this guide, we’ll use Python along with the requests library (and built-in JSON parser) combined with Scrape.do; the most powerful scraping API.

While everything we’ll scrape here is publicly viewable, Instagram typically redirects automated visitors to the login page or will block after browsing for a short time without logging in.

This is known as a soft block; to avoid it, continuous user-agent rotation is essential, but that’s not where the blocks stop.

If you keep sending one-too-many requests back-to-back, your IP will get blocked, restricting you from accessing Instagram completely. In addition to user-agent rotation you’ll need proxy rotation, which is where Scrape.do will come in.

What is Scrape.do?

Scrape.do is a web scraping API that simplifies the scraping process by handling complex challenges automatically.

Basically, it’s your swiss knife AND skeleton key when scraping the web:

- Automatic IP and header rotation to prevent blocks and bans.

- JavaScript rendering for scraping dynamic, interactive content.

- Built-in anti-bot detection bypass.

- The ability to interact with page elements and simulate user actions (e.g., scrolling, clicking).

And completely FREE to get started with 1000 monthly successful API credits.

Prerequisites

Before getting started, ensure you have the following ready:

- Sign up at Scrape.do and retrieve your API key from the dashboard.

- Install

requestsusing pip:

pip install requests

With these installed and your Scrape.do token at the ready, you’re all set to start scraping:

Scraping Instagram Profiles: Profile Information and Top Posts

Instagram profiles hold valuable public data: usernames, bios, follower counts, up to 12 recent posts. Whether you’re tracking influencers, monitoring brand engagement, or analyzing trends, scraping this data without logging in is completely possible using Scrape.do.

With a single request, you can basically analyze how popular a profile is and how its recent content has been performing, giving you a glimpse of their growth trend.

In this section, we’ll first scrape basic profile information and confirm that our request is working properly. Then, we’ll move on to extracting top posts from the profile.

I’m picking the profile of the best bagel shop in New York as the target URL, simply because I think bagels are the best breakfast items in the world 🥯

Scraping Profile Information

Before extracting any data, we need to send our first request to Instagram’s public API.

If everything is set up correctly, we should receive a 200 OK response, confirming that the request was successful:

import requests

import urllib.parse

# Your Scrape.do API token

token = "<your-token>"

# Instagram's public profile endpoint (replace the username)

username = "bkbagelny"

profile_url = f"https://www.instagram.com/api/v1/users/web_profile_info/?username={username}"

encoded_url = urllib.parse.quote_plus(profile_url)

# Construct the Scrape.do request URL

api_url = f"https://api.scrape.do/?token={token}&url={encoded_url}"

# Send the request through Scrape.do

response = requests.get(api_url)

# Print response status to verify success

print(response) # Expect <Response [200]> if successful

If you see <Response [200]>, it means Scrape.do successfully bypassed Instagram’s restrictions, and we can now extract profile details.

Extracting Profile Data

Once we’ve confirmed that our request is working, we’ll parse the JSON response to extract key profile details.

Because the official API of Instagram responds with structured data, it’s pretty easy to parse using only the requests library:

import requests

import urllib.parse

# Your Scrape.do API token

token = "<your-token>"

# The Instagram username we want to scrape

username = "bkbagelny"

# Construct the Instagram API URL for fetching profile information

profile_url = f"https://www.instagram.com/api/v1/users/web_profile_info/?username={username}"

# Encode the URL so it can be safely passed as a parameter

encoded_url = urllib.parse.quote_plus(profile_url)

# Construct the Scrape.do request URL to avoid blocks and login restrictions

api_url = f"https://api.scrape.do/?token={token}&url={encoded_url}"

# Send the request through Scrape.do

response = requests.get(api_url)

# Parse the JSON response

data = response.json()

# Extract user data from the JSON response

user_data = data["data"]["user"]

# Print detailed Instagram profile information

print("=== Detailed Instagram Profile Information ===")

print("Username:", user_data["username"]) # The Instagram handle of the user

print("Full Name:", user_data["full_name"]) # The full name of the user

print("Biography:", user_data["biography"]) # The bio text from the profile

# Extract biography with any embedded entities (e.g., mentions, hashtags)

print("Biography with Entities:", user_data["biography_with_entities"]["raw_text"])

# External URL (e.g., website link in profile)

print("External URL:", user_data["external_url"])

# High-resolution profile picture URL

print("Profile Picture URL:", user_data["profile_pic_url_hd"])

# Business-related information

print("Business Category:", user_data["business_category_name"]) # Business category if applicable

print("Category Name:", user_data["category_name"]) # General category associated with the profile

print("Is Business Account:", user_data["is_business_account"]) # Boolean flag for business accounts

# Privacy and verification status

print("Is Private:", user_data["is_private"]) # Boolean flag for private accounts

print("Is Verified:", user_data["is_verified"]) # Boolean flag for verified accounts

# Engagement statistics

print("Follower Count:", user_data["edge_followed_by"]["count"]) # Number of followers

print("Following Count:", user_data["edge_follow"]["count"]) # Number of people the account follows

print("Total Posts Count:", user_data["edge_owner_to_timeline_media"]["count"]) # Total number of posts

Here’s the output you’ll get:

=== Detailed Instagram Profile Information ===

Username: bkbagelny

Full Name: Brooklyn Bagel & Coffee Co 🥯

Biography: <---redacted--->

Business Category: None

Category Name: Bagel Shop

Is Business Account: False

Is Private: False

Is Verified: False

Follower Count: 108057

Following Count: 7283

Total Posts Count: 1457

You’ve already extracted plenty of valuable information, but we can extract even more insights by analyzing the 12 most recent posts which load with every profile request:

Scraping Top Posts From a Profile

Instagram’s public API also provides metadata for a user’s posts, including captions, media URLs, like counts, and timestamps.

The posts are stored inside edge_owner_to_timeline_media["edges"], where each item contains post details like caption, media URL, and engagement data.

Modify our existing code a bit with the following code to also extract all top posts from a profile:

<--- Setup the same as previous section --->

# Parse the JSON response

data = response.json()

# Extract posts data

posts = data["data"]["user"]["edge_owner_to_timeline_media"]["edges"]

# Display all posts found

print("\n=== Extracted Posts ===")

for i, post in enumerate(posts, 1):

post_data = post["node"]

# Get the text of the first caption edge if it exists

caption = post_data["edge_media_to_caption"]["edges"][0]["node"]["text"] \

if post_data["edge_media_to_caption"]["edges"] else "No caption"

# This is the unique piece of the link you can use:

# instagram.com/p/<shortcode> or instagram.com/reel/<shortcode>

shortcode = post_data.get("shortcode", "N/A")

print(f"\nPost #{i}")

print("Shortcode (for link):", shortcode)

print("Caption:", caption)

print("Media URL:", post_data["display_url"])

print("Likes:", post_data["edge_liked_by"]["count"])

print("Comments:", post_data["edge_media_to_comment"]["count"])

The output should print something like:

=== Extracted Posts ===

Post #1

Shortcode: DG8BLeyR8Hc

Caption: <---redacted--->

Media URL: <---link--->

Likes: 93

Comments: 8

Post #2

<--- 11 more posts --->

Combining all the code in this section based on your needs will give you a scraper that will extract key information from hundreds of instagram profiles in a matter of minutes.

Scrape All Posts of an Instagram Profile

The profile API we used earlier only returns the first page of media. To paginate through every post on a public profile, use Instagram’s GraphQL endpoint with the user timeline document. As of August 12, 2025, the timeline query uses doc_id="9310670392322965" and returns a connection called xdt_api__v1__feed__user_timeline_graphql_connection that includes edges and page_info with end_cursor for pagination.

We will keep the same stack (Python requests; Scrape.do). Scrape.do forwards your POST body to the target; set content type to form encoded and send variables=<json>&doc_id=<id>; Scrape.do supports POST transparently, so you do not need extra proxy code.

Note: Instagram occasionally rotates internal GraphQL document IDs. If you start getting 400 or 401 on this call, the doc id likely changed; confirm the current ID with a quick network trace; alternative IDs have been observed in the wild over 2024 to 2025.

Full script (requests + Scrape.do; paginated)

import json

import urllib.parse

import requests

from typing import List, Dict, Optional

TOKEN = "<your-token>"

INSTAGRAM_GRAPHQL = "https://www.instagram.com/graphql/query"

DOC_ID = "9310670392322965" # user timeline posts (verified 2025-02 to 2025-08)

def scrape_all_posts(username: str, page_size: int = 12, max_pages: Optional[int] = None) -> List[Dict]:

"""

Fetch all public posts for a username via Instagram GraphQL (no login).

Returns a list of post 'node' dicts. Uses Scrape.do for anti-bot handling.

"""

# Build the Scrape.do API URL that will forward our POST to Instagram

encoded_target = urllib.parse.quote_plus(INSTAGRAM_GRAPHQL)

api_url = f"https://api.scrape.do/?token={TOKEN}&url={encoded_target}"

# Initial GraphQL variables (mirrors the payload Instagram sends on web)

variables = {

"after": None,

"before": None,

"data": {

"count": page_size,

"include_reel_media_seen_timestamp": True,

"include_relationship_info": True,

"latest_besties_reel_media": True,

"latest_reel_media": True

},

"first": page_size,

"last": None,

"username": f"{username}",

"__relay_internal__pv__PolarisIsLoggedInrelayprovider": True,

"__relay_internal__pv__PolarisShareSheetV3relayprovider": True

}

headers = {"content-type": "application/x-www-form-urlencoded"}

all_posts: List[Dict] = []

page_num = 0

prev_cursor = None

while True:

body = f"variables={urllib.parse.quote_plus(json.dumps(variables, separators=(',', ':')))}&doc_id={DOC_ID}"

r = requests.post(api_url, headers=headers, data=body, timeout=30)

r.raise_for_status()

data = r.json()

# The timeline connection that holds edges and pagination info

conn = data["data"]["xdt_api__v1__feed__user_timeline_graphql_connection"]

edges = conn.get("edges", [])

all_posts.extend(edge["node"] for edge in edges)

page_info = conn["page_info"]

end_cursor = page_info.get("end_cursor")

has_next = page_info.get("has_next_page")

page_num += 1

# Stop conditions

if not has_next:

break

if end_cursor == prev_cursor:

break

if max_pages and page_num >= max_pages:

break

prev_cursor = end_cursor

variables["after"] = end_cursor # advance the cursor

return all_posts

# Example run

if __name__ == "__main__":

posts = scrape_all_posts("bkbagelny", page_size=12, max_pages=3)

print(f"Collected posts: {len(posts)}")

# Show a compact snapshot of the first few items

for p in posts[:3]:

shortcode = p.get("shortcode")

caption_edges = p.get("edge_media_to_caption", {}).get("edges", [])

caption = caption_edges[0]["node"]["text"] if caption_edges else ""

print({

"shortcode": shortcode,

"url": f"https://www.instagram.com/p/{shortcode}/" if shortcode else None,

"likes": p.get("edge_liked_by", {}).get("count"),

"comments": p.get("edge_media_to_comment", {}).get("count"),

"caption": (caption[:120] + "...") if len(caption) > 120 else caption

})

We POST to /graphql/query with the timeline doc_id plus a variables object. Instagram returns twelve posts per page with page_info.end_cursor; we loop until has_next_page is false or max_pages is reached.

The shortcode lets you construct canonical post links for further processing; the edges contain caption, media URL, likes, and comments for each item.

💡 “Shortcode” is a vital information that you will need for the next section:

Scraping Instagram Post Data and Top Comments

Instagram no longer offers an easy way to retrieve post details through a simple API. Instead, it relies on GraphQL queries, which require constructing requests manually.

Unlike scraping profile pages where we could retrieve multiple posts at once, extracting detailed post data (likes, captions, timestamps, video URLs) requires a direct query to Instagram’s internal GraphQL API.

This is where doc_id comes in. Instagram assigns specific predefined query IDs to different types of requests. For example, "8845758582119845" is used to fetch detailed post information, while other IDs exist for fetching comments, user interactions, and stories.

Rather than using a post ID, Instagram’s API requires a shortcode (the unique part of the post’s URL that we extracted in the previous section). This means we must encode the request properly before sending a request using Scrape.do, ensuring we bypass login walls and avoid being blocked.

Extract Post Information

To extract detailed post data, we send a GraphQL request through Scrape.do. This allows us to bypass Instagram’s login restrictions and retrieve key details, including:

- Likes & Comments – Engagement metrics.

- Caption & Location – Post description and tagged location (if available).

- Timestamp – When the post was published.

- Video URL – If the post is a video, we extract its direct URL.

Here’s the full script to fetch post details:

import requests

import urllib.parse

import json

from datetime import datetime

# Required parameters

doc_id = "8845758582119845" # GraphQL query ID for fetching post details

shortcode = "DG8BLeyR8Hc" # Replace with the target post’s shortcode

token = "<your-token>"

# Construct GraphQL variables string

variables_str = f'{{"shortcode":"{shortcode}"}}'

encoded_vars = urllib.parse.quote_plus(variables_str)

# Instagram GraphQL API URL

instagram_url = f"https://www.instagram.com/graphql/query?doc_id={doc_id}&variables={encoded_vars}"

# Encode the URL for Scrape.do

encoded_instagram_url = urllib.parse.quote_plus(instagram_url)

api_url = f"https://api.scrape.do/?token={token}&url={encoded_instagram_url}"

# Send the request through Scrape.do

response = requests.get(api_url)

data = response.json()

# Extract post data

post_data = data["data"]["xdt_shortcode_media"]

post_id = post_data["id"]

shortcode = post_data["shortcode"]

account_name = post_data["owner"]["username"]

like_count = post_data["edge_media_preview_like"]["count"]

comment_count = post_data["edge_media_to_parent_comment"]["count"]

is_video = post_data["is_video"]

video_url = post_data.get("video_url", "N/A") if is_video else "N/A"

# Extract caption

caption_edges = post_data["edge_media_to_caption"]["edges"]

caption_text = caption_edges[0]["node"]["text"] if caption_edges else ""

# Extract location (if available)

location = post_data["location"]["name"] if post_data.get("location") else "N/A"

# Convert timestamp to readable format

published_time = datetime.fromtimestamp(post_data["taken_at_timestamp"]).strftime("%Y-%m-%d %H:%M:%S")

# Display post details

print("=== Post Details ===")

print("Post ID:", post_id)

print("Shortcode:", shortcode)

print("Account Name:", account_name)

print("Like Count:", like_count)

print("Comment Count:", comment_count)

print("Caption:", caption_text)

print("Location:", location)

print("Published Time:", published_time)

print("Is Video:", is_video)

print("Video URL:", video_url)

And this is the output it should print:

=== Post Details ===

Post ID: 3583744590496645596

Shortcode: DG8BLeyR8Hc

Account Name: putaforkinit_

Like Count: 93

Comment Count: 8

Caption: <---redacted--->

Location: Brooklyn Bagel & Coffee Company

Published Time: 2025-03-08 15:07:48

Is Video: False

Video URL: N/A

This information should be enough to understand if a post is getting good engagement and is going viral, but to understand the reasons why it would go viral is another task:

Extracting Top Comments and Replies

When scraping post details, the response we receive also includes the top comments and replies.

This allows us to analyze user engagement and run a sentiment analysis.

Instagram structures comments as a nested hierarchy, where:

- Top-level comments appear first under a post.

- Replies to comments are grouped inside

edge_threaded_comments.

Using this structure, we can extract comments and their replies with the following code:

import requests

import urllib.parse

import json

from datetime import datetime

# Define variables

doc_id = "8845758582119845" # GraphQL query ID for fetching post comments

shortcode = "DG8BLeyR8Hc" # Replace with the target post’s shortcode

token = "<your-token>"

# Construct Instagram query URL

variables_str = f'{{"shortcode":"{shortcode}"}}'

encoded_vars = urllib.parse.quote_plus(variables_str)

instagram_url = f"https://www.instagram.com/graphql/query?doc_id={doc_id}&variables={encoded_vars}"

# Construct Scrape.do API request

encoded_instagram_url = urllib.parse.quote_plus(instagram_url)

api_url = f"https://api.scrape.do/?token={token}&url={encoded_instagram_url}"

# Send the GET request

response = requests.get(api_url)

data = response.json()

post_data = data["data"]["xdt_shortcode_media"]

# Extract comments

comment_edges = post_data["edge_media_to_parent_comment"]["edges"]

for edge in comment_edges:

node = edge["node"]

comment_id = node["id"]

comment_text = node["text"]

created_at = datetime.fromtimestamp(node["created_at"]).strftime("%Y-%m-%d %H:%M:%S")

owner_username = node["owner"]["username"]

like_count = node["edge_liked_by"]["count"]

print("Comment ID:", comment_id)

print("Text:", comment_text)

print("Created At:", created_at)

print("Owner Username:", owner_username)

print("Likes:", like_count)

# Check for replies

if "edge_threaded_comments" in node and node["edge_threaded_comments"]["edges"]:

for reply_edge in node["edge_threaded_comments"]["edges"]:

reply_node = reply_edge["node"]

reply_id = reply_node["id"]

reply_text = reply_node["text"]

reply_created_at = datetime.fromtimestamp(reply_node["created_at"]).strftime("%Y-%m-%d %H:%M:%S")

reply_owner_username = reply_node["owner"]["username"]

reply_like_count = reply_node["edge_liked_by"]["count"]

# Print reply details

print(" └ Reply ID:", reply_id)

print(" └ Text:", reply_text)

print(" └ Created At:", reply_created_at)

print(" └ Owner Username:", reply_owner_username)

print(" └ Likes:", reply_like_count)

print(" ──────────") # Small separator after each reply

print("-" * 40) # Separator for readability

This code will print a structured output of the top comments for this post:

Comment ID: 18059135693073431

Text: That’s stuffed!!

Created At: 2025-03-11 01:08:22

Owner Username: gaogirlsgrubbin

Likes: 2

└ Reply ID: 18040558829165188

└ Text: @gaogirlsgrubbin 🔥🔥🔥

└ Created At: 2025-03-11 07:33:49

└ Owner Username: bkbagelny

└ Likes: 1

──────────

----------------------------------------

<--- Rest of the comments --->

If you want to extract ALL comments from a single post, you will have to work with end_cursor and send a new request for the next batch of comments, but for sentimental analysis the first page of comments, which are highlighted, should be sufficient.

Conclusion

You’ve successfully scraped profile data and post details from Instagram, which can unlock a lot of insights for making data-driven decisions.

With Scrape.do, you don’t need to worry about running into blocks; you access your target domain every single time.