Category:Scraping Basics

Explore The History of Web Scraping

Full Stack Developer

The history of web scraping, which is used for data scraping, data scraping, or data collection through websites, goes back to the times when the internet first emerged. Although the web scraping method is generally described as collecting data from the contents on the internet, it has not always been used for this purpose. Web scraping, which was first developed to automate tasks that are complex or troublesome for people and used for this purpose, has started to be used for commercial purposes over time. The commercial usage areas of this application can be listed as scraping the product prices of the competitors, increasing the number of potential customers by attracting the sales of the competitors, finding the missing aspects of the marketing campaigns, and eliminating these deficiencies. The general purpose of web scraping, which is used for commercial purposes, is to take the top place in the internet trade market by defeating the competitors.

In this article, we told you what web scraping is, what web scraping is for, how web scraping was born, and the timeline of web scraping. We've also included a section at the end of the text about why you chose us, Scrape.do. After reading that section, you will understand in the best way why you should choose us.

With the Scrape.do, which you can use to scrape websites better, you can easily access data on any website on the internet. Under normal circumstances, if your IP address is detected while web scraping, your IP address may be blocked manually by the owner of that website or automatically by the website's codes. But you can overcome all of this by using Scrape.do, and with our very affordable packages! What are you waiting for to use Scrape.do?

What is Web Scraping?

By obtaining the data on any website beyond the control of the website owner, you can increase your online business like never before with web scraping, which allows you to use and categorize the content on the websites as you wish. Although web scraping is used today to retrieve data from a website in a categorized way, this method was formerly used to connect with test frameworks. Companies like IP-Label, which use tools like Selenium, used this method to make it easier for their web developers and administrators to monitor the performance of their websites.

Scraping data from the Internet is very similar to web indexing, the process by which many listed websites come up when you search for any search query in search engines. The most important difference between indexing internet sites and obtaining data from the internet is robots.txt software, this software is software that gives the bots accessing the internet where to go. Web indexers, that is, search engines that index websites, do not conflict with robots.txt software and act in harmony. Web scraper tools, on the other hand, cannot work in harmony with the robots.txt file, as they are responsible for stealing and illegally obtaining content from websites. Let's add that web scrapers obtain data by visiting websites and discover new pages by logging into seed pages, and by this way they can access data on a website.

How Web Scraping Method Was Born?

Although Google is often regarded as the first browser to fully scan websites and the web, let us tell you that surfing and accessing data on websites have a long and rather interesting history than you might think. Although the first browsers on the internet could only scan data and text on websites, today's internet data scanning tools can now monitor web applications other than internet browsing in terms of security vulnerabilities and accessibility.

When the internet was first invented and used, it was not possible to search on the internet. In this period, when there was no search engine, people could access the files shared with them via FTTP (File Transfer Protocol) rather than finding the files they wanted. People needed an automated program to better share data with each other, so they invented and started using a program known as Web Browser and Bot to meet these needs.

It has become possible to organize all files with the Web Browser, which allows them to find files uploaded to the Internet to be shared with other users over the Internet. This automatic software, called a web browser or bot and used to access files on the internet, aims to index all the content on the websites and extracts the data on the website to a certain database to perform the indexing process.

Since the first web browsers were developed for a very small internet, these web browsers could scan approximately one hundred thousand internet pages. If we look at our current position, we can say that some websites only have millions of pages on their own sites. Since millions of new web pages were added to the internet with the help of the search engines created, it was necessary to add internet data such as audio, video, pictures, and texts. After this data was designed to be uploaded to the internet by the developers of the internet, the internet turned into a completely open data source.

Thanks to the internet, which can be easily searched and you can reach the data you want by simply searching the search queries, we have a real data source. As people have also expanded their use of the internet, it has become easier for people to start sharing the information they want publicly. Although sharing data seemed like a simple process, many websites did not allow direct downloading of the data on their pages. The internet has become increasingly inefficient, as websites that cannot be downloaded directly require manual copying of data. There was a need for an application to automatically obtain data on the Internet, which became web scraping.

Timeline of Web Scraping History

The history of web scraping can be examined under six headings: the birth of the World Wide Web, the first web browser, Wanderer, JumpStation, BeautifulSoup, and the birth of visual web scrapers.

Birth of the World Wide Web

If you are wondering how far the history of scraping any website on the Internet goes, we can say that this method has its origins in the creation of the World Wide Web. The birth of the World Wide Web in this timeline dating back to 1989 was accomplished by Tim Berners-Lee. The original purpose of the World Wide Web was to be a platform for information exchange between scientists at universities and institutes around the world who need up-to-date information. But the World Wide Web developed quite quickly and three different features occurred.

- URL

- Used to introduce a particular website to the scraper, which is used to scrape data from the internet.

- Embedded Hyperlinks

- They contribute to our navigation on the websites we specify.

- Web pages

- They can be characterized as data pages containing various data such as text, images, audio, video and visual content.

The First Web Browser

Tim Berners-Lee, who founded the World Wide Web and made it possible to exchange information around the world, did not stop after developing the World Wide Web and decided to develop a way for people to access the World Wide Web. After two years of work, he invented the first web browser with a web page running on a server. Thanks to this web browser, it was possible for people to log into the network and share things with other people over the network.

Wanderer

Wanderer, a Perl-based web browser for measuring the size of the network, was the first of its kind. This web browser was developed by Matthew Gray of the Massachusetts Institute of Technology. After it was decided to develop Wanderer, this web browser was used to create an index called Wandex instead of measuring the size of the World Wide Web. Wanderer with Wandex was a web browser with the potential to become the first general-purpose World Wide Web search engine, although Matthew Gray, the founder of Wanderer, described this idea as a mere assertion.

JumpStation

In 1993, when Wanderer and Wanderer with Wandex emerged, JumpStation, a technology that formed the basis of search engines such as Google, Bing, and Yahoo, began to be used. The first browser-based search engine, this software is a basic and fairly simple version of the search engines we currently have. As JumpStation became more and more popular, it was only a matter of time before millions of web pages appeared and were added to the index of this application. We can easily say that the internet has turned into an open-source data platform with JumpStation.

BeautifulSoup

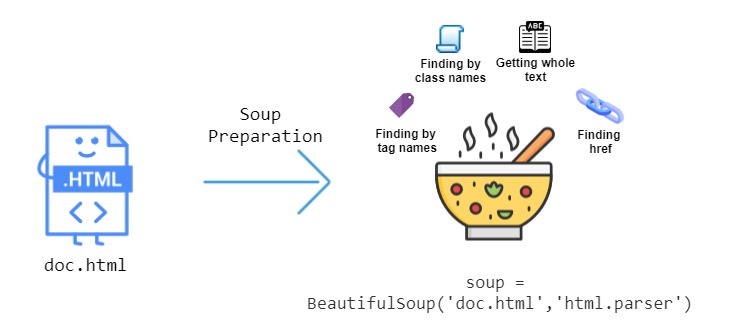

BeautifulSoup was released in 2004, just eleven years after JumpStation, Wanderer, and Wanderer with Wandex came out in 1993. Written in the Python programming language, this library contained widely used algorithms, and it was possible to describe this library as an HTML parser. With BeautifulSoup, the meanings of the structures of the websites could be understood and it became possible to parse all the data contained in HTML containers. The ability to easily parse data in HTML form was a development that reduced the workload and working hours of programmers.

The internet, where anyone with a computer and internet connection can log in and access the information they want with the help of a search engine, has become a real source of information for people. It was also possible for people to receive important information from the information presented to them and to facilitate their lives with the help of this information. Although the internet did not prohibit downloading content on its pages in the early stages of its development, this system has changed over time and data has become difficult to download. In projects where a large amount of data was to be reviewed, manual and human-powered copying and pasting was a difficult task, so people had to find a new way to obtain the data.

The Rise of Visual Web Scrapers

When people started to be unable to download the data they wanted to get from the internet for offline use, a new way had to be discovered, this newly discovered way called web scraping. Visual web scraping software, Web Integration Platform version 6.0, was software developed by Stefan Andresen that could answer people's needs. Thanks to this software, users could highlight the information they deem necessary on the web page. As a result of this emphasis, the data they wanted to have were saved in an excel file or a database, so it became very easy to scrape data over the internet.

The web scraping method, which is an ongoing and frequently used technology today, is a method that develops the more the technology advances. Since the number of companies on the Internet is increasing day by day and people need the advantage to make their companies stand out, they use web scraping to access existing information on the internet and try to find a way to put their companies ahead. Thanks to this method, which is most frequently used to obtain data on a large scale, it becomes possible to gain potential customers by leaving competitors behind.

Why Choose Us For Your Web Scraping Services?

One of the biggest challenges you may encounter when doing web scraping is that websites use software that doesn't allow you to do web scraping. This software may recognize your IP addresses and block you or restrict your access for a short time. However, if you use Scrape.do, you can easily change your IP addresses and perform the web scraping you want without any restrictions, thanks to the rotating proxies already included in our packages.

You need to perform a geo-specific web scraping as the services provided by some websites may be based on geography. Many web scraping tools do not allow you to geo-set your web scraping, but with the Geotargeting feature of Scrape.do, you can easily scrape data from websites in any country in any region.

See can we can do for you, as a web scraping tool!

Full Stack Developer