Category:Scraping Use Cases

How to Scrape Amazon Reviews (Behind Login Too)

Software Engineer

If you are running market research, training an AI model on customer sentiment, or just looking for well-performing products to resell, Amazon’s review data is invaluable.

But it isn’t all in one place.

Featured reviews are embedded directly into the product page, while the full review history sits behind pagination and a login wall.

In this guide, we’ll cover both.

Find full working code for scraping Amazon product data, reviews, and search results here ⚙ and here's more on how to scrape Amazon.

Scrape Featured Reviews

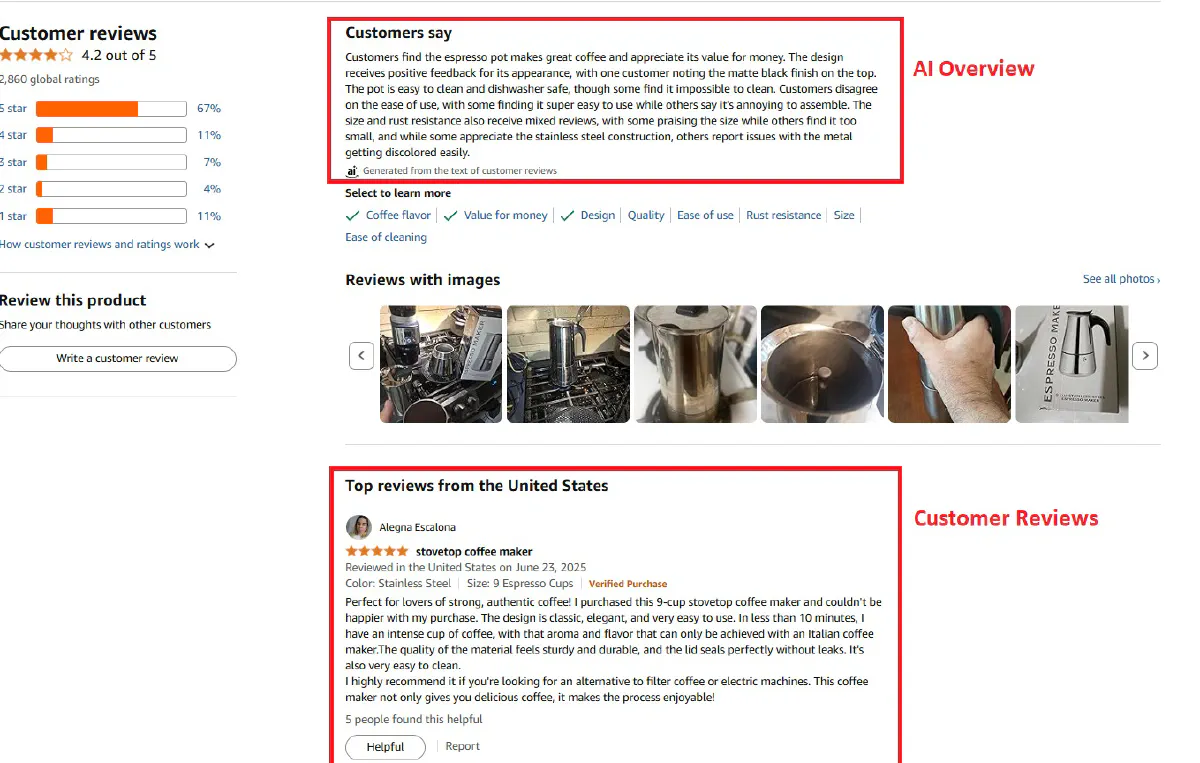

Amazon product pages often display a handful of “Featured Reviews” directly under the main product information.

These reviews usually represent a mix of positive, negative, and most helpful feedback, making them valuable for sentiment analysis, quality checks, or customer research.

These can be enough for quick review scraping, so let's give these a try:

Prerequisites

We'll first need to install required libraries, get our Scrape.do token, and build our request to get access.

1. Install Required Libraries

We’ll be using Python’s requests library for HTTP requests, BeautifulSoup for HTML parsing, re for text cleanup, and csv for saving results.

Install them with:

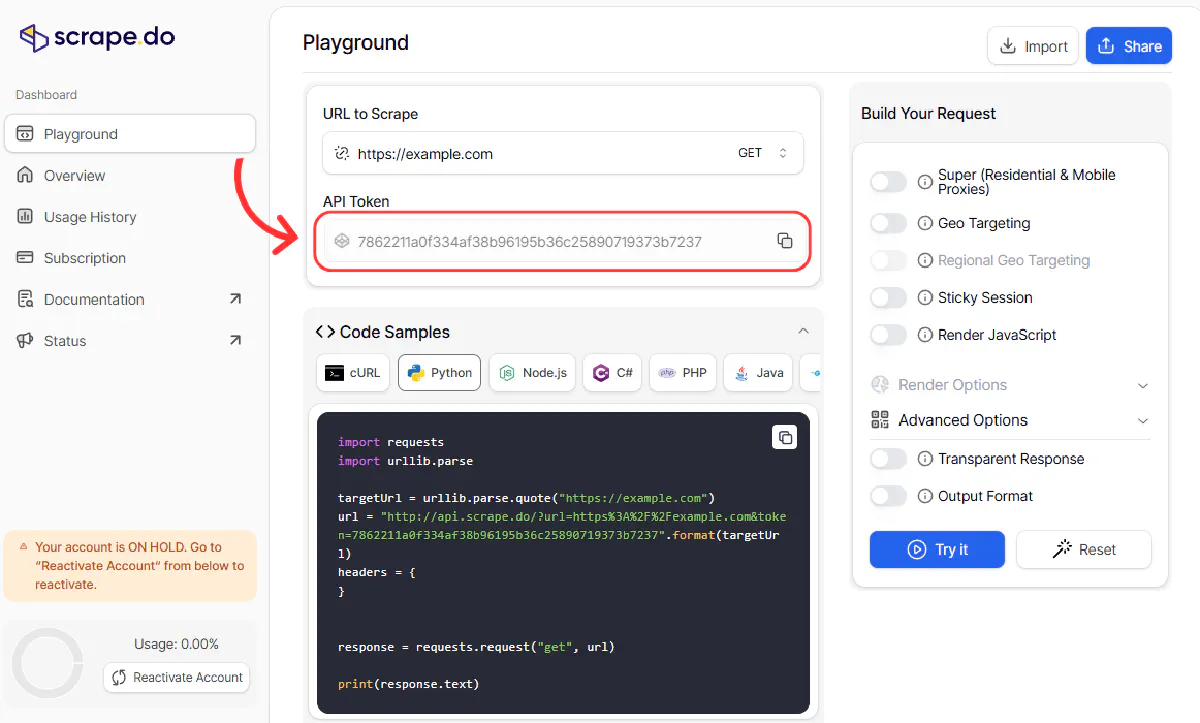

pip install requests beautifulsoup42. Get Your Scrape.do Token

- Create a Scrape.do account for FREE.

- Open the Dashboard and copy your API Token from the top of the screen.

3. Build the Request

We’ll pass our product URL to Scrape.do, along with our token and geoCode=us to ensure we get the US version of the page.

import requests

import urllib.parse

from bs4 import BeautifulSoup

# Scrape.do token and target URL

token = "<SDO-token>"

url = "https://us.amazon.com/Coffee-Stovetop-Espresso-Percolator-Stainless/dp/B06X3SSTD8"

# Build Scrape.do API request

api_url = f"http://api.scrape.do?token={token}&url={urllib.parse.quote_plus(url)}&geoCode=us"

# Fetch and parse the HTML

soup = BeautifulSoup(requests.get(api_url).text, "html.parser")Extract Review Data

Now from the HTML we parsed, we need to extract only the info we need.

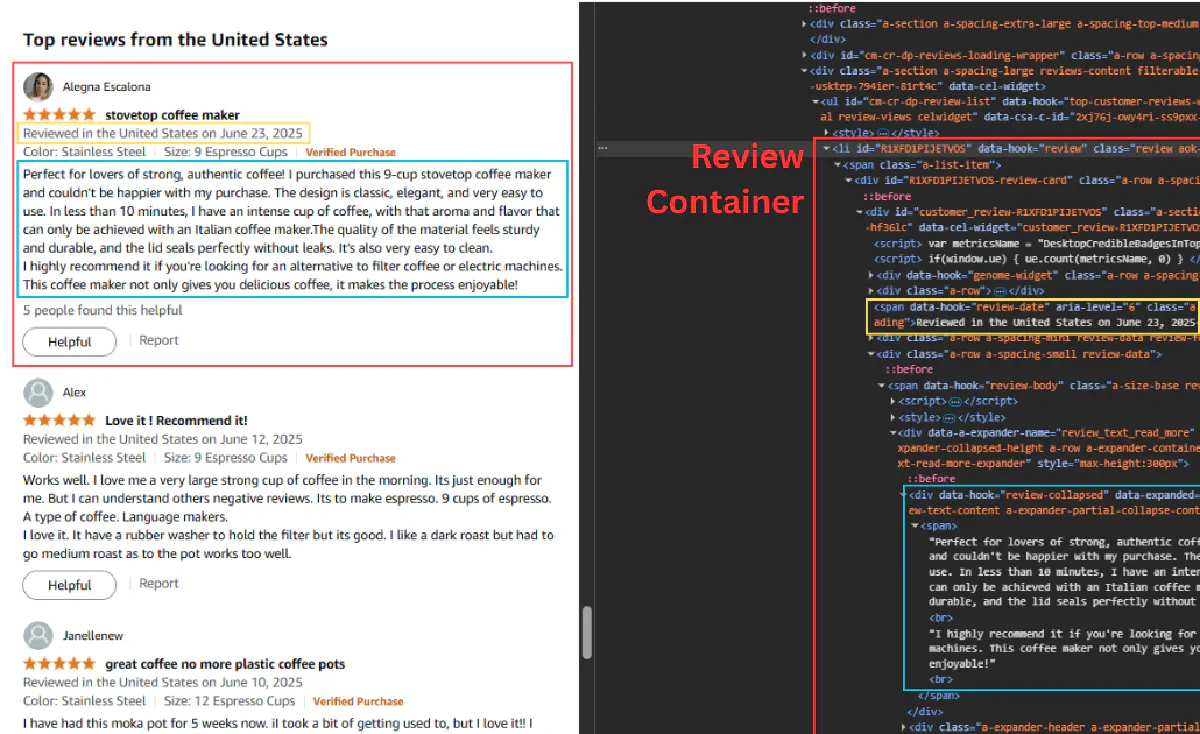

If you inspect the HTML, each review sits inside an <li> element with the attribute data-hook="review".

Inside that container, we can grab:

- Star rating → from

<i data-hook="review-star-rating">or fallback to<i>witha-icon-star - Review date → from

<span data-hook="review-date">(remove the “Reviewed in … on” prefix) - Review content → from

<span data-hook="review-body"> - Helpful votes → from

<span data-hook="helpful-vote-statement">(extract just the number)

Here’s the parsing logic:

import re

reviews = []

for review in soup.find_all("li", {"data-hook": "review"}):

# Get star rating

rating_elem = review.find("i", {"data-hook": "review-star-rating"}) \

or review.find("i", class_=re.compile(r"a-icon-star"))

rating = rating_elem.find("span", class_="a-icon-alt").text.split()[0] if rating_elem else "N/A"

# Get review date (remove country prefix)

date_elem = review.find("span", {"data-hook": "review-date"})

date = re.sub(r"Reviewed in .* on ", "", date_elem.text) if date_elem else "N/A"

# Get review content

content_elem = review.find("span", {"data-hook": "review-body"})

content = content_elem.get_text(strip=True) if content_elem else "N/A"

# Get helpful votes count

helpful_elem = review.find("span", {"data-hook": "helpful-vote-statement"})

helpful = re.findall(r'\d+', helpful_elem.text)[0] if helpful_elem and re.findall(r'\d+', helpful_elem.text) else "0"

reviews.append([review.get("id", ""), rating, date, content, helpful])

print(f"Found {len(reviews)} featured reviews")Our data is now ready to export, so let's do that:

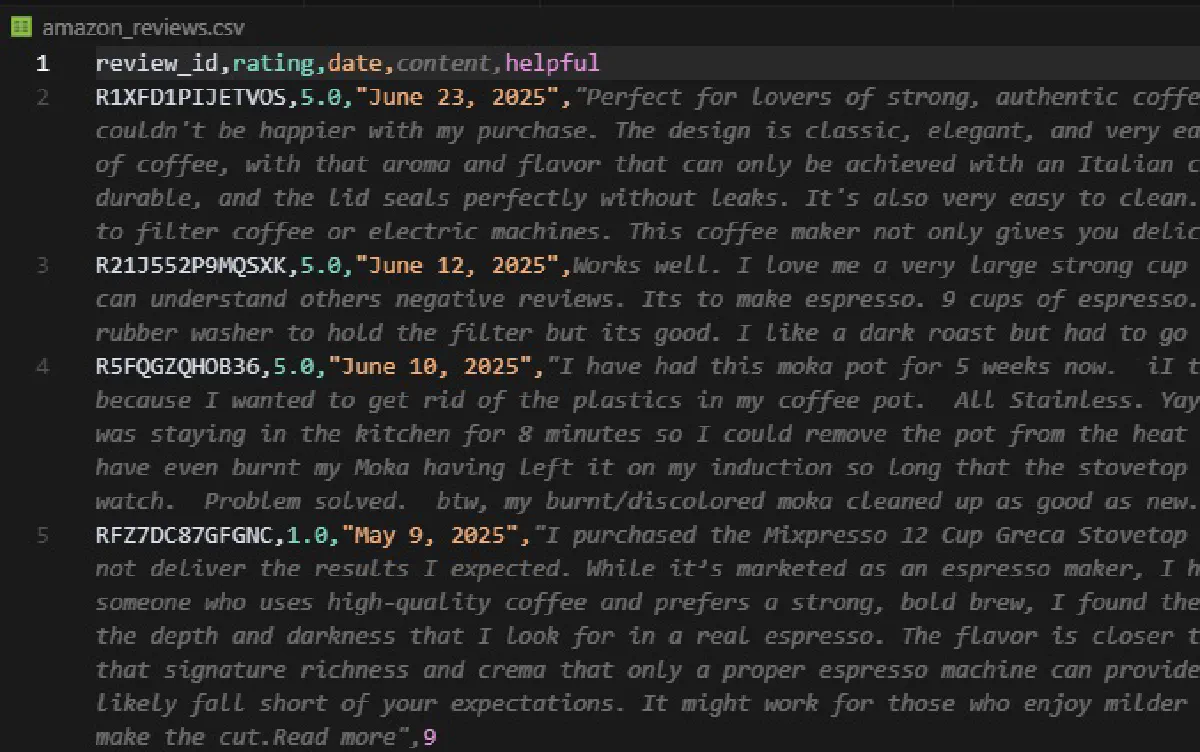

Export Reviews to CSV

We can export reviews stored in our reviews list to a CSV for easier storage. Python’s built-in csv module makes this straightforward:

import csv

with open("amazon_reviews.csv", "w", newline="", encoding="utf-8") as f:

writer = csv.writer(f)

writer.writerow(["review_id", "rating", "date", "content", "helpful"])

writer.writerows(reviews)

print("Reviews saved to amazon_reviews.csv")This will create a file named amazon_reviews.csv in your working directory with columns for review ID, rating, date, content, and helpful votes:

This should give you a decent level of insight of how a product is performing with consumers.

But if you insist on more...

Scrape All Reviews Behind Login

🛑 ⚠ Before we dive into the code, there’s an important warning you need to read carefully.

Scraping Amazon while logged in is not the same as scraping public product pages.

If you log into your Amazon account and then scrape data:

- You are bound by Amazon’s Terms of Service: these explicitly prohibit the use of data mining tools, robots, or data gathering and extraction tools.

- Data behind a login wall may not be considered public data, which means scraping and re-using it can be illegal in many countries.

Doing this at scale can lead to:

- Permanent account bans (losing access to your purchases, history, and Prime membership)

- Legal action from Amazon

We do not endorse or recommend scraping logged-in data at scale.

That said, if you want to scrape review data for personal use without reutilizing it, for example, researching a product you purchased or tracking reviews of your own listings, we’ll walk you through how to do it safely:

Collecting Necessary Cookies

To fetch Amazon’s full review history, you’ll need a handful of your own session cookies from Chrome DevTools.

These prove you’re logged in and let Amazon serve you the complete reviews data.

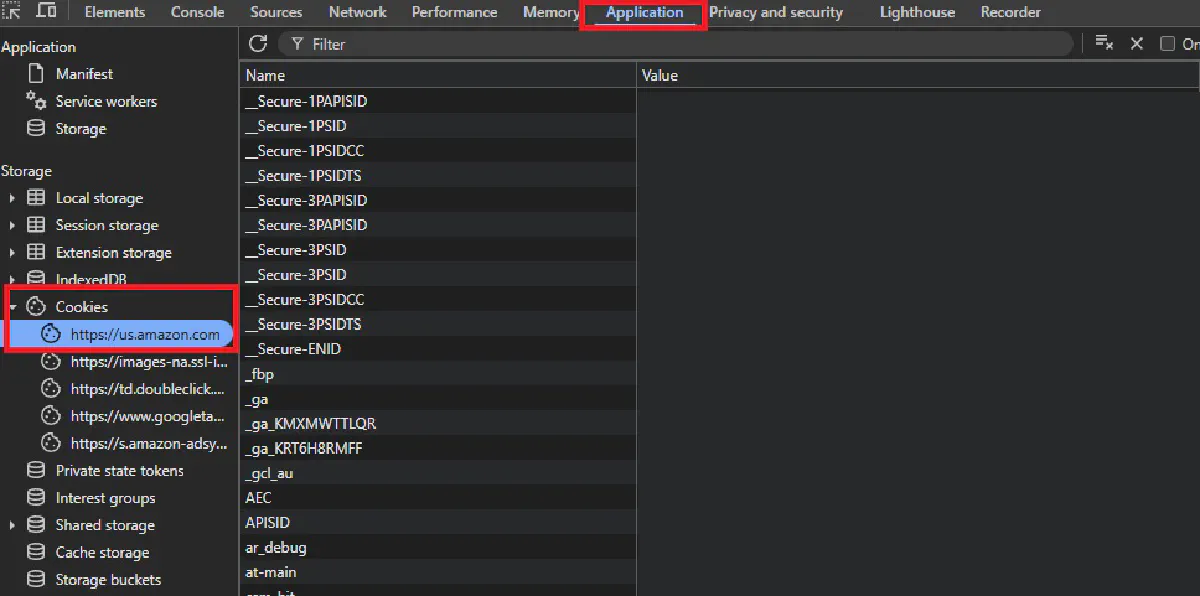

Open the reviews page for your target product while signed in, then right-click and choose Inspect. In the DevTools panel, switch to the Application tab and look under Storage → Cookies for https://us.amazon.com (or whichever Amazon domain matches your geoCode).

Here, locate the cookies named at-main, sess-at-main, session-id, session-id-time, session-token, ubid-main, and x-main.

Copy their Value fields exactly, without quotes or spaces, and join them into a single string separated by semicolons and a space. The final string should look like:

at-main=...; sess-at-main=...; session-id=...; session-id-time=...; session-token=...; ubid-main=...; x-main=...Make sure the region in the cookie domain matches the one you’ll scrape; a mismatch will return a login page instead of reviews, and expired cookies will need to be refreshed before they work again.

Injecting Cookies into Your Request

Once you have your cookie string, you can pass it to Scrape.do using the setCookies parameter.

This tells Amazon’s servers that your scraper is already logged in with your account, so they return the full reviews page instead of a limited public version.

Before sending, URL-encode the cookie string so it can safely travel inside the request URL. Python’s urllib.parse.quote handles this for us.

Then, build the Scrape.do API request with your token, the target reviews page URL, your geoCode, and the encoded cookies.

import requests

import urllib.parse

token = "<SDO-token>"

encodedCookies = urllib.parse.quote(

"at-main=YOUR_AT_MAIN_VALUE; "

"sess-at-main=YOUR_SESS_AT_MAIN_VALUE; "

"session-id=YOUR_SESSION_ID; "

"session-id-time=YOUR_SESSION_ID_TIME; "

"session-token=YOUR_SESSION_TOKEN; "

"ubid-main=YOUR_UBID_MAIN; "

"x-main=YOUR_X_MAIN_VALUE; "

)

target_url = "https://us.amazon.com/product-reviews/B06X3SSTD8/"

targetUrl = urllib.parse.quote(target_url, safe="")

api_url = (

f"https://api.scrape.do/?token={token}"

f"&url={targetUrl}"

f"&geoCode=US"

f"&setCookies={encodedCookies}"

)api_url contains everything needed to make an authenticated request and pull reviews that are normally hidden behind the login wall.

Taking a Full Page Screenshot & More

Let's now add a few extra parameters, so we can have Scrape.do render the page in a headless browser, capture a full-page screenshot, and send back the results as JSON.

This will be useful for confirming that the cookies worked and that we’re seeing the complete logged-in review list before parsing.

We enable rendering with render=true, request a full screenshot with fullScreenShot=true, and choose returnJSON=true so the HTML, screenshots, and other data come in a structured JSON response.

The screenshot is returned as a Base64-encoded string, which we can decode and save locally, the full code looks like this with these added:

import requests

import urllib.parse

import base64

import json

token = "<SDO-token>"

encodedCookies = urllib.parse.quote(

"at-main=YOUR_AT_MAIN_VALUE; "

"sess-at-main=YOUR_SESS_AT_MAIN_VALUE; "

"session-id=YOUR_SESSION_ID; "

"session-id-time=YOUR_SESSION_ID_TIME; "

"session-token=YOUR_SESSION_TOKEN; "

"ubid-main=YOUR_UBID_MAIN; "

"x-main=YOUR_X_MAIN_VALUE; "

)

# Target an authenticated page to verify login

target_url = "https://us.amazon.com/product-reviews/B06X3SSTD8/"

targetUrl = urllib.parse.quote(target_url, safe="")

url = f"https://api.scrape.do/?token={token}&url={targetUrl}&geoCode=US&setCookies={encodedCookies}&fullScreenShot=true&render=true&returnJSON=true"

resp = requests.get(url, timeout=60)

json_map = json.loads(resp.text)

image_b64 = json_map["screenShots"][0]["image"]

image_bytes = base64.b64decode(image_b64)

file_path = "amazon_screenshot.png"

with open(file_path, "wb") as file:

file.write(image_bytes)

print(f"Full screenshot saved as {file_path}")If all works, you should have a clear view of the reviews page with your own account logged in.

To extract all reviews while logged in, you can disable render, returnJson, and fullScreenshot parameters use **the exact parsing logic we used in the previous section.

**To loop through all pages, make the code add&pageNumber=2to the end of the target URL and increase page number until there are no more reviews left.

Next Steps

Featured reviews are easy to scrape for a quick snapshot, while logged-in scraping unlocks the full review history but comes with higher risk and strict legal limits—we only suggest this for personal use.

But you can't just pick random products to scrape reviews from. You need a source of product ASINs first.

To build your product list, you can either scrape Amazon search results for a specific keyword or category, or scrape Amazon best sellers to get the top-performing products in any category. Both methods give you ASINs you can then feed into your review scraper.

If you're evaluating different tools for Amazon scraping, check out our comparison of the best Amazon scraper APIs to find the right solution for your needs.

Scrape.do handles the heavy lifting by providing proxies, geo-targeting, rendering, and session management—so you can focus on extracting the data you need.

Get 1000 free credits and start scraping →

FAQ

Can you view Amazon reviews without logging in?

No, you can't view all reviews of an Amazon product without logging in. Amazon limits the number of reviews visible to logged-out users.

However, Amazon recently increased the number of featured reviews displayed directly on the product page. You can extract these reviews to form an opinion about a product without any logins—which is exactly what our first method covers.

Is scraping Amazon reviews allowed by terms of service?

No. Amazon's Terms of Service explicitly prohibit "the use of data mining, robots, or similar data gathering and extraction tools."

That said, scraping public data like product pages and search results sits in a legal gray area. Since you never agreed to the ToS (you didn't log in), the enforceability of those terms is questionable in many jurisdictions.

But scraping reviews behind a login is different. You've actively agreed to the ToS by creating an account, and the data isn't publicly accessible. Doing this commercially or at scale can result in serious backlash—account bans, legal threats, or worse.

Our recommendation: stick to featured reviews on public product pages for any commercial use.

Software Engineer