Category:Scraping Use Cases

How to Scrape Car Rental Prices from RentalCars.com in 2026

Full Stack Developer

RentalCars.com processes over 9 million bookings annually across 60,000 locations worldwide.

For travel comparison sites, booking platforms, or market analysts, this pricing data drives competitive advantage.

But RentalCars protects its data aggressively. The site blocks basic scraping attempts, requires complex search parameters, and serves different content formats depending on how you approach it.

Here's how to extract it reliably.

Why Scraping RentalCars.com is Complex

RentalCars operates as one of the world's largest car rental aggregators, which means they handle massive traffic while protecting valuable pricing data from competitors.

They've built sophisticated defenses that make automated data extraction challenging.

Advanced Bot Detection Systems

RentalCars employs multiple layers of bot detection beyond basic rate limiting.

The platform analyzes request patterns, browser fingerprints, and session behavior to identify automated traffic.

Send requests without proper headers, cookies, or timing patterns and you'll get blocked before reaching any pricing data.

Dual Content Delivery System

Unlike simple websites, RentalCars serves content in two distinct formats:

- Clean JSON API for modern interfaces (preferred)

- HTML with embedded data for legacy support (fallback)

The JSON API returns structured data that's easy to parse, but requires precise parameter formatting and authentication handling.

Complex Search Parameter Requirements

RentalCars searches require multiple encoded parameters:

- Search criteria (JSON-encoded location, dates, driver age)

- Filter criteria (JSON-encoded sorting and filtering options)

- Service features (array of additional data requirements)

- Regional settings (currency and geo-location cookies)

Missing or incorrectly formatted parameters result in empty responses or errors.

We'll use Scrape.do to handle the bot detection while we focus on constructing the proper API calls and parsing the responses.

Prerequisites and Setup

We'll build a robust RentalCars scraper using Python with libraries for HTTP requests, JSON handling, and HTML parsing as backup.

Install Required Libraries

You need requests for API calls, BeautifulSoup for HTML parsing, and built-in libraries for JSON and date handling:

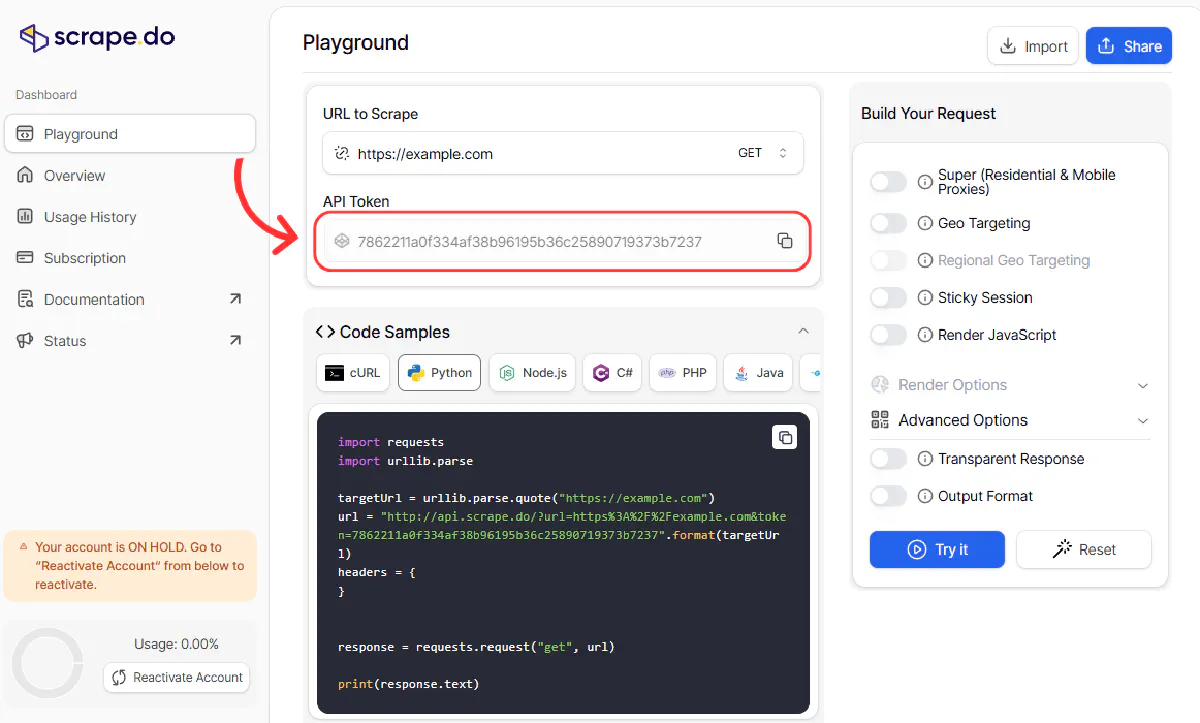

pip install requests beautifulsoup4Get Your Scrape.do Token

- Sign up for a free Scrape.do account - includes 1000 free credits monthly

- Copy your API token from the dashboard

This token handles bot detection, proxy rotation, and regional access automatically.

Basic Configuration

Set up your script with the essential configuration:

import requests

import urllib.parse

import json

import datetime

import csv

from bs4 import BeautifulSoup

# Configuration

SCRAPEDO_API_TOKEN = 'YOUR_TOKEN_HERE'

API_URL_BASE = 'http://api.scrape.do/'

def get_page_html(target_url):

"""

Fetch content using Scrape.do with proper regional settings.

"""

# Set currency and regional preferences

currency_cookie = "tj_conf=\"tj_pref_currency:EUR|tj_pref_lang:en|tjcor:gb|\""

encoded_cookie = urllib.parse.quote_plus(currency_cookie)

params = {

'token': SCRAPEDO_API_TOKEN,

'url': target_url,

'super': 'true',

'regionalGeoCode': 'europe',

'SetCookies': encoded_cookie

}

api_request_url = API_URL_BASE + '?' + urllib.parse.urlencode(params)

try:

response = requests.get(api_request_url, timeout=180)

if response.status_code == 200:

return response.text

else:

print(f"Request failed with status: {response.status_code}")

return None

except requests.exceptions.RequestException as e:

print(f"Connection error: {e}")

return NoneThis function handles the Scrape.do API call with proper cookie and regional settings.

Target the JSON API for Clean Data

The most reliable approach is targeting RentalCars' JSON API directly rather than scraping HTML.

This API returns structured data that's much easier to parse and less likely to break when the website changes.

Construct Search Parameters

RentalCars API requires JSON-encoded search criteria and filter parameters:

def build_search_url(location_code, pickup_date, dropoff_date, driver_age=30):

"""

Build the RentalCars API search URL with proper parameters.

"""

# Search criteria as a dictionary

search_criteria = {

"driversAge": driver_age,

"pickUpLocation": location_code,

"pickUpDateTime": f"{pickup_date}T10:00:00",

"pickUpLocationType": "IATA",

"dropOffLocation": location_code, # Same as pickup for simplicity

"dropOffLocationType": "IATA",

"dropOffDateTime": f"{dropoff_date}T10:00:00",

"searchMetadata": json.dumps({

"pickUpLocationName": f"{location_code} Airport",

"dropOffLocationName": f"{location_code} Airport"

})

}

# Filter criteria for sorting by price

filter_criteria = {

"sortBy": "PRICE",

"sortAscending": True

}

# Encode parameters for URL

search_json = urllib.parse.quote_plus(json.dumps(search_criteria))

filter_json = urllib.parse.quote_plus(json.dumps(filter_criteria))

# Construct the API endpoint URL

api_url = (

f"https://www.rentalcars.com/api/search-results?"

f"searchCriteria={search_json}&"

f"filterCriteria={filter_json}&"

f"serviceFeatures=[\"RETURN_EXTRAS_IN_MULTI_CAR_RESPONSE\"]"

)

return api_url

# Example usage

location = "CMN" # Casablanca airport code

pickup_date = "2025-09-13"

dropoff_date = "2025-09-20"

search_url = build_search_url(location, pickup_date, dropoff_date)

print("API URL:", search_url)This creates a properly formatted API request URL with all required parameters.

Parse JSON Response Data

When the API returns JSON, extract the vehicle data using a structured approach:

def extract_vehicle_data(json_data):

"""

Extract vehicle information from RentalCars API JSON response.

"""

vehicles = []

matches = json_data.get('matches', [])

if not matches:

print("No vehicle matches found in response")

return vehicles

print(f"Found {len(matches)} vehicle options:")

print("=" * 50)

for i, match in enumerate(matches, 1):

vehicle = match.get('vehicle', {})

# Extract key information

make_model = vehicle.get('makeAndModel', 'N/A')

price_info = vehicle.get('price', {})

amount = price_info.get('amount', 'N/A')

currency = price_info.get('currency', '')

# Format price

if isinstance(amount, (int, float)):

formatted_price = f"{amount:.2f} {currency}"

else:

formatted_price = f"{amount} {currency}"

vehicle_data = {

'Make And Model': make_model,

'Price': formatted_price,

'Currency': currency,

'Raw Amount': amount

}

vehicles.append(vehicle_data)

# Display progress

print(f"{i:02d}. {make_model} — {formatted_price}")

return vehicles

def scrape_rental_prices_api(location, pickup_date, dropoff_date):

"""

Main function to scrape rental prices using the API approach.

"""

print(f"Scraping rental prices for {location}...")

print(f"Pickup: {pickup_date}, Dropoff: {dropoff_date}")

# Build API URL

search_url = build_search_url(location, pickup_date, dropoff_date)

# Fetch data through Scrape.do

response_text = get_page_html(search_url)

if not response_text:

print("Failed to fetch data")

return None

# Try parsing as JSON

try:

json_data = json.loads(response_text)

vehicles = extract_vehicle_data(json_data)

return vehicles

except json.JSONDecodeError:

print("Response is not JSON - API might have returned HTML")

return NoneThis approach prioritizes the clean JSON data when available.

HTML Fallback for Robust Scraping

Sometimes RentalCars returns HTML instead of JSON, especially under certain conditions or for specific regions.

Having an HTML parsing fallback ensures your scraper works consistently.

Parse HTML Vehicle Listings

When you get HTML responses, extract data using CSS selectors:

def parse_html_vehicle_data(html_content):

"""

Parse vehicle data from HTML when JSON API is not available.

"""

soup = BeautifulSoup(html_content, 'html.parser')

vehicles = []

# Target vehicle cards using data attributes

car_listings = soup.select('[data-testid="car-card"]')

print(f"Found {len(car_listings)} vehicle listings in HTML")

for car in car_listings:

try:

# Extract using specific selectors

price_element = car.select_one('[data-testid="price-main-price"]')

name_element = car.select_one('[data-testid="car-name"]')

supplier_element = car.select_one('[data-testid="supplier-logo"]')

# Get text content

price = price_element.text.strip() if price_element else 'N/A'

car_name = name_element.text.strip() if name_element else 'N/A'

supplier = supplier_element.get('alt', 'N/A') if supplier_element else 'N/A'

vehicle_data = {

'Supplier': supplier,

'Car Name': car_name,

'Price': price

}

vehicles.append(vehicle_data)

except (AttributeError, KeyError) as e:

print(f"Warning: Could not parse a vehicle listing: {e}")

continue

return vehicles

def scrape_with_html_fallback(location, pickup_date, dropoff_date):

"""

Fallback method using HTML parsing when API fails.

"""

# Construct traditional search URL (not API endpoint)

search_url = (

f"https://www.rentalcars.com/SearchResults.do?"

f"locationCode={location}&"

f"driversAge=30&"

f"puDate={pickup_date.replace('-', '/')}&"

f"puTime=10:00&"

f"doDate={dropoff_date.replace('-', '/')}&"

f"doTime=10:00"

)

response_text = get_page_html(search_url)

if not response_text:

return None

return parse_html_vehicle_data(response_text)This provides a backup method when the API approach doesn't work.

Export Data to CSV

Once you've extracted vehicle data, save it in a structured format for analysis:

def save_vehicles_to_csv(vehicles, location_code, data_type="api"):

"""

Save vehicle data to CSV with appropriate filename.

"""

if not vehicles:

print("No vehicle data to save")

return

# Generate filename with date and data source

today = datetime.date.today()

filename = f"rentalcars_{data_type}_{location_code}_{today}.csv"

# Determine fieldnames based on data structure

if vehicles and 'Make And Model' in vehicles[0]:

fieldnames = ['Make And Model', 'Price', 'Currency']

else:

fieldnames = ['Supplier', 'Car Name', 'Price']

with open(filename, 'w', newline='', encoding='utf-8') as csvfile:

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

writer.writeheader()

writer.writerows(vehicles)

print(f"✅ Saved {len(vehicles)} vehicles to '{filename}'")

return filenameThis creates dated CSV files with appropriate column headers.

Complete Working Script

Here's the full script that combines all approaches with proper error handling:

import requests

import urllib.parse

import json

import datetime

import csv

from bs4 import BeautifulSoup

# Configuration

SCRAPEDO_API_TOKEN = 'YOUR_TOKEN_HERE'

API_URL_BASE = 'http://api.scrape.do/'

def get_page_html(target_url):

"""Fetch content using Scrape.do with regional settings."""

currency_cookie = "tj_conf=\"tj_pref_currency:EUR|tj_pref_lang:en|tjcor:gb|\""

encoded_cookie = urllib.parse.quote_plus(currency_cookie)

params = {

'token': SCRAPEDO_API_TOKEN,

'url': target_url,

'super': 'true',

'regionalGeoCode': 'europe',

'SetCookies': encoded_cookie

}

api_request_url = API_URL_BASE + '?' + urllib.parse.urlencode(params)

try:

response = requests.get(api_request_url, timeout=180)

if response.status_code == 200:

print("✅ Successfully fetched data")

return response.text

else:

print(f"❌ Request failed: {response.status_code}")

return None

except requests.exceptions.RequestException as e:

print(f"❌ Connection error: {e}")

return None

def build_api_search_url(location_code, pickup_date, dropoff_date, driver_age=30):

"""Build RentalCars API URL with proper JSON parameters."""

search_criteria = {

"driversAge": driver_age,

"pickUpLocation": location_code,

"pickUpDateTime": f"{pickup_date}T10:00:00",

"pickUpLocationType": "IATA",

"dropOffLocation": location_code,

"dropOffLocationType": "IATA",

"dropOffDateTime": f"{dropoff_date}T10:00:00",

"searchMetadata": json.dumps({

"pickUpLocationName": f"{location_code} Airport",

"dropOffLocationName": f"{location_code} Airport"

})

}

filter_criteria = {

"sortBy": "PRICE",

"sortAscending": True

}

search_json = urllib.parse.quote_plus(json.dumps(search_criteria))

filter_json = urllib.parse.quote_plus(json.dumps(filter_criteria))

return (

f"https://www.rentalcars.com/api/search-results?"

f"searchCriteria={search_json}&"

f"filterCriteria={filter_json}&"

f"serviceFeatures=[\"RETURN_EXTRAS_IN_MULTI_CAR_RESPONSE\"]"

)

def extract_api_vehicle_data(json_data):

"""Extract vehicle data from API JSON response."""

vehicles = []

matches = json_data.get('matches', [])

print(f"📦 Found {len(matches)} vehicles from API")

for i, match in enumerate(matches, 1):

vehicle = match.get('vehicle', {})

price_info = vehicle.get('price', {})

make_model = vehicle.get('makeAndModel', 'N/A')

amount = price_info.get('amount', 'N/A')

currency = price_info.get('currency', '')

if isinstance(amount, (int, float)):

formatted_price = f"{amount:.2f}"

else:

formatted_price = str(amount)

vehicles.append({

'Make And Model': make_model,

'Price': formatted_price,

'Currency': currency

})

print(f"{i:02d}. {make_model} — {formatted_price} {currency}")

return vehicles

def parse_html_vehicles(html_content):

"""Parse vehicles from HTML when API returns HTML."""

soup = BeautifulSoup(html_content, 'html.parser')

vehicles = []

car_listings = soup.select('[data-testid="car-card"]')

print(f"📦 Found {len(car_listings)} vehicles from HTML")

for car in car_listings:

try:

price_elem = car.select_one('[data-testid="price-main-price"]')

name_elem = car.select_one('[data-testid="car-name"]')

supplier_elem = car.select_one('[data-testid="supplier-logo"]')

vehicles.append({

'Supplier': supplier_elem.get('alt', 'N/A') if supplier_elem else 'N/A',

'Car Name': name_elem.text.strip() if name_elem else 'N/A',

'Price': price_elem.text.strip() if price_elem else 'N/A'

})

except (AttributeError, KeyError):

continue

return vehicles

def save_to_csv(vehicles, location_code, data_source):

"""Save vehicle data to CSV."""

if not vehicles:

print("No data to save")

return

filename = f"rentalcars_{data_source}_{location_code}_{datetime.date.today()}.csv"

if vehicles and 'Make And Model' in vehicles[0]:

fieldnames = ['Make And Model', 'Price', 'Currency']

else:

fieldnames = ['Supplier', 'Car Name', 'Price']

with open(filename, 'w', newline='', encoding='utf-8') as f:

writer = csv.DictWriter(f, fieldnames=fieldnames)

writer.writeheader()

writer.writerows(vehicles)

print(f"✅ Saved to {filename}")

def main():

"""Main scraping function with API-first approach and HTML fallback."""

print("🚗 RentalCars.com Scraper Starting...")

# Configuration

location = "CMN" # Casablanca

pickup_date = "2025-09-13"

dropoff_date = "2025-09-20"

print(f"Target: {location} from {pickup_date} to {dropoff_date}")

# Try API approach first

api_url = build_api_search_url(location, pickup_date, dropoff_date)

response_text = get_page_html(api_url)

if not response_text:

print("❌ Failed to get response")

return

# Try parsing as JSON (API response)

try:

json_data = json.loads(response_text)

print("✅ API JSON response detected")

vehicles = extract_api_vehicle_data(json_data)

save_to_csv(vehicles, location, "api")

return

except json.JSONDecodeError:

print("⚠️ Not JSON - trying HTML parsing...")

# Fallback to HTML parsing

vehicles = parse_html_vehicles(response_text)

if vehicles:

save_to_csv(vehicles, location, "html")

else:

print("❌ No vehicles found in HTML either")

if __name__ == "__main__":

main()Conclusion

Scraping RentalCars.com requires handling multiple content formats, complex parameter encoding, and sophisticated bot detection systems.

The API-first approach gives you clean, structured data that's easy to parse and less likely to break. The HTML fallback ensures reliability when the API behaves unexpectedly.

Scrape.do eliminates the technical barriers - bot detection, proxy management, and regional access - so you can focus on extracting the rental data you need for price monitoring, competitive analysis, or travel platform integration.

This dual approach scales from single location searches to comprehensive market analysis across hundreds of destinations.

Full Stack Developer