Category:Scraping Tools

17 Key Python Libraries for Web Scraping (2025 list)

Full Stack Developer

A crushing majority of web scraping is done in Python.

The reason is obvious; there is a massive Python community and a rich ecosystem of libraries for all sorts of use cases.

And in this blog, we'll take a look at the most important of these libraries under different categories.

Let's get started:

HTTP Clients

These tools will handle the low-level work of making HTTP requests to websites.

They are perfect for fetching HTML pages or API data when you don’t need a full browser engine.

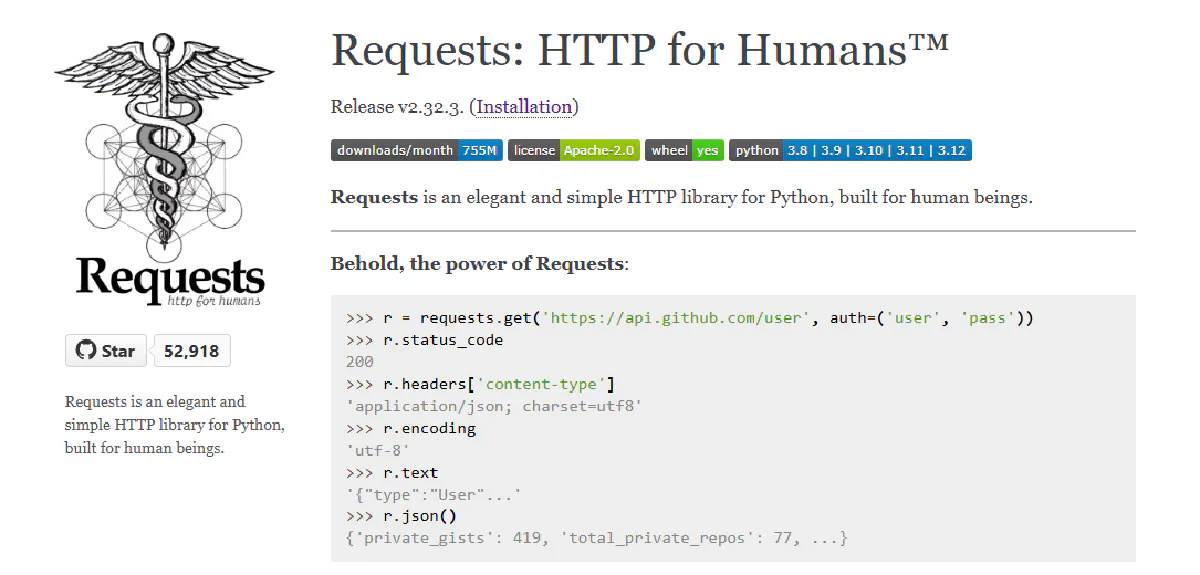

1. Requests

- GitHub Stars: ~52.9K (one of the most popular Python packages ever)

- Simple and intuitive API for sending HTTP requests

- Best for quick, reliable retrieval of static web pages and APIs

- Official Docs

Requests is the go-to Python HTTP library that abstracts away the complexities of sending HTTP/1.1 requests.

It’s incredibly user-friendly; for example, fetching a page is as easy as requests.get(url).

As a result, Requests has become a staple in web scraping and is depended on by over 1 million repositories, pulling in tens of millions of downloads per week.

This library is best used for static sites and REST APIs where data is returned directly in HTML or JSON format.

It supports features like persistent sessions (with cookies), file uploads, SSL verification, and JSON response parsing out of the box. Each request is straightforward: you get the content, status, headers in one call, making it ideal for scripts that need to fetch pages and parse them without any client-side rendering.

However, Requests cannot execute JavaScript or simulate a browser since it’s purely an HTTP client. This means it will struggle with dynamic content that requires a real browser to render.

It’s also single-threaded by default (though you can use it with threading or asyncio via other libraries), so for high concurrency you might consider alternatives.

2. HTTPX

- GitHub Stars: ~14.1K

- Supports async and HTTP/2, while maintaining a Requests-like API

- Best for high-performance scraping with concurrency and newer HTTP features

- Official Docs

HTTPX is a next-generation HTTP client designed to be as comfortable as Requests, but with extra powers. It provides both a synchronous API (mirroring Requests) and an asynchronous API for integration with asyncio. This dual nature means you can gradually adopt async scraping to fetch multiple pages in parallel without blocking. HTTPX also has native support for HTTP/2 and even transport adapters for proxies, which can be crucial for scraping modern websites.

In practice, HTTPX shines for scenarios where you need concurrency or HTTP/2. For example, you can fire off dozens of requests concurrently in an async event loop, dramatically improving throughput when scraping large numbers of pages or API endpoints. It also inherits many conveniences from Requests (like sessions, SSL verification, and cookie handling), so the learning curve is minimal for existing Requests users.

The main downside is that HTTPX is newer and slightly less mature than Requests. While it’s very stable, you might encounter the occasional quirk with emerging features like HTTP/2. Also, using the async capabilities requires familiarity with asyncio, which adds complexity if you’re coming from a synchronous mindset. That said, for most use cases HTTPX is as easy to use as Requests, and the payoff in performance is well worth it when scraping at scale.

3. AIOHTTP

- GitHub Stars: ~15K (robust async HTTP client/server framework)

- Highlight: Fully asynchronous HTTP client with WebSocket support

- Best for: Advanced users who need to scrape with asyncio and stream data

- Official Docs: AIOHTTP Documentation

AIOHTTP is an asynchronous HTTP framework that includes a client for making requests as well as a web server (not needed for scraping). As a client, AIOHTTP lets you leverage Python’s asyncio to perform a large number of requests concurrently. It’s particularly useful if you want fine-grained control over the request/response cycle – for example, streaming response data, handling websockets, or controlling connection pooling and timeouts in detail.

This library is best suited for highly concurrent scraping tasks. You can create an aiohttp ClientSession and gather many GET requests at once, which is useful when scraping paginated data or calling many API endpoints. It’s also one of the few scraping libraries that supports WebSockets out of the box (useful if you need to scrape data from sites that push updates via WebSocket).

The trade-off with AIOHTTP is complexity. Being a low-level framework, it requires you to manage the event loop or use it within an async function. Beginners might find it less straightforward than Requests/HTTPX. Additionally, because it’s so feature-rich, the documentation can be heavy – you often only need a subset of its capabilities. For most standard scraping scenarios, HTTPX might be simpler, but AIOHTTP remains a powerful option for those who need its flexibility or are already working in an async environment.

4. urllib3

- GitHub Stars: ~3.8K (core HTTP library powering many higher-level clients)

- Highlight: Low-level HTTP client with strong reliability and performance

- Best for: Situations requiring fine control or as a foundation for custom tools

- Official Docs: urllib3 Documentation

urllib3 is the underlying HTTP library that many Python tools (like Requests) use under the hood. You typically won’t use urllib3 for everyday scraping tasks because libraries like Requests wrap it in a more user-friendly API. However, urllib3 offers a solid, lower-level interface to HTTP and can be directly useful if you need extra control over connections or to avoid the abstractions that higher-level clients impose.

Key features of urllib3 include connection pooling, pluggable SSL configurations, proxy support, and retries with backoff. It’s extremely reliable – many of the “hard parts” of HTTP (chunked transfers, redirects, keep-alive) are handled gracefully. For example, libraries such as Requests and Scrapy rely on urllib3 internally for robust HTTP behavior.

The downside is that using urllib3 directly means writing more boilerplate (it’s not as simple as requests.get() for basic use). You have to manage making PoolManager objects, crafting Request objects, etc. This is only worth doing in edge cases – for instance, if you’re writing your own scraping framework or you need to intercept low-level HTTP events. In most cases, you’ll stick with the higher-level clients, but it’s good to know that urllib3 is the rock-solid engine under the hood of Python’s web scraping ecosystem.

Parsing and Data Extraction

Once you’ve fetched HTML content, these libraries help parse the raw text into a structured format (like a DOM) so you can navigate and extract the data you need.

5. Beautiful Soup (bs4)

- Popularity: Millions of downloads; de facto standard for HTML parsing in Python

- Highlight: Very simple API for navigating and searching HTML/XML parse trees

- Best for: Beginners or quick projects to extract data from static HTML

- Official Docs: Beautiful Soup Documentation

Beautiful Soup is a Python library for pulling data out of HTML and XML files. It works with a parser (like Python’s built-in parser, or lxml’s parser) underneath, but provides Pythonic idioms to search and navigate the parse tree. With Beautiful Soup, you can find elements by tag name, CSS selector, or XPath (via integration with lxml), and easily extract text or attributes. It’s very forgiving of bad HTML, which is great because real-world web pages are often messy.

This library is ideal for projects of any size when starting out, thanks to its gentle learning curve. With just a few lines, you can parse a downloaded page and do things like soup.find('div', {'class': 'price'}) to get an element. Beautiful Soup excels at handling poorly formed HTML, and it supports different parsing engines (you can choose one for speed or strictness). It’s especially handy for one-off scripts or smaller scale crawls where ease of use matters more than raw performance.

On the flip side, Beautiful Soup is not the fastest parser. For large documents or extremely high volumes of pages, it’s known to be slower compared to lower-level parsing libraries. It also doesn’t fetch pages itself – it relies on an HTTP client (like Requests) to get the content first. In terms of capabilities, it’s limited to parsing HTML/XML; it won’t execute JavaScript or handle dynamic content. In summary, Beautiful Soup is a fantastic tool to have in your arsenal, especially for quick parsing tasks, but you might swap it out for faster alternatives if you hit performance bottlenecks.

6. lxml

- GitHub Stars: ~2.8K (long-standing library for XML/HTML processing)

- Highlight: C-based parser that is very fast and supports XPath & CSS selectors

- Best for: Performance-intensive parsing, or when you need XML support and advanced querying

- Official Docs: lxml Documentation

lxml is a high-performance HTML and XML parsing library that provides a Pythonic API on top of libxml2 and libxslt (C libraries). It can parse HTML extremely quickly and create an element tree that you can navigate. lxml supports both XPath and CSS selectors for finding elements, which makes it very powerful for extracting data. Many other tools (like Scrapy’s selectors or Beautiful Soup’s lxml parser option) use lxml under the hood for speed and compliance.

The strengths of lxml show in large-scale or speed-critical projects. If you need to parse thousands of pages as fast as possible, lxml’s C-backed performance is a major advantage. It also handles XML parsing (including validation, XSLT, etc.), so it’s great if you’re scraping data that comes as XML or need to mix HTML parsing with XML processing. The library is quite feature-rich – for example, you can use .xpath() or .cssselect() on an lxml element to perform very precise queries, enabling complex data extraction from HTML trees.

One thing to note is that lxml, being lower-level, is less beginner-friendly than Beautiful Soup. You have to be comfortable with the concept of an element tree and sometimes deal with bytes vs strings for encodings. Also, installing lxml can be a hurdle on some systems (since it may require C compiler or pre-built wheels). In terms of limitations, lxml doesn’t do JavaScript (it’s still a server-side parser), and very occasionally it can be strict about HTML correctness (though it has an html5parser for dealing with messy HTML). Overall, lxml is a powerhouse for parsing – it’s the engine that powers a lot of other scraping tools – and is recommended when performance and advanced HTML queries are needed.

7. Selectolax

- GitHub Stars: ~1.3K (newer project focusing on speed)

- Highlight: Blazing fast HTML5 parser using C libraries (Modest & Lexbor) under the hood

- Best for: Scenarios where parsing speed is critical (large pages or very many pages)

- Official Docs: Selectolax ReadTheDocs

Selectolax is an ultra-fast HTML parser that has been turning heads for its performance. Built on the Modest and Lexbor parsing engines (written in C), it often outperforms older libraries like Beautiful Soup and lxml on large or complex pages. Selectolax provides a convenient API with CSS selectors to navigate the HTML, much like you would with Beautiful Soup’s select() or lxml’s cssselect(). This means you get speed without having to write low-level parsing code.

This library is best used when efficiency and speed are paramount. If you need to scrape in near real-time or process massive datasets of HTML, Selectolax can offer significant advantages. Benchmarks have shown it can parse HTML several times faster than Beautiful Soup in many cases. Despite its focus on speed, it remains fairly easy to use – you create a HTMLParser tree and then query it for elements or text content.

Being a newer library, Selectolax’s downsides include that it’s not as battle-tested as the veterans. It may not support every obscure HTML quirk or edge-case JavaScript-generated content (it strictly parses HTML). In fact, some advanced CSS selectors or malformed HTML might not be handled perfectly yet. Additionally, because it leverages C libraries, you’ll need a compatible environment (most of the time this is fine via pip wheels). In summary, if parsing speed is your bottleneck, Selectolax is a compelling option – just keep an eye on its development if you run into an HTML snippet it can’t handle, as the project is actively improving.

Headless Browsers

When a site heavily uses JavaScript or requires simulating user interactions, these tools provide a real (or headless) browser environment so you can scrape the rendered HTML after scripts run.

8. Selenium

- GitHub Stars: ~32K (for the Selenium project overall)

- Highlight: Automates actual web browsers (Chrome, Firefox, etc.) via WebDriver

- Best for: Scraping complex, JavaScript-driven sites or scenarios requiring clicks, form submissions, etc.

- Official Docs: Selenium for Python

Selenium is a browser automation framework that allows you to programmatically control a web browser. With Selenium’s Python bindings, you can launch a browser (Chrome, Firefox, etc.), navigate to pages, and interact with the page just as a human would – clicking buttons, filling forms, scrolling, and more. For web scraping, this means you can get fully rendered pages including content that’s loaded via JavaScript. Selenium can operate in visible mode for debugging, or headless mode for performance (headless Chrome/Firefox runs without a GUI).

Use Selenium when you need to scrape highly dynamic websites – for example, pages that load data on scroll, or sites behind login forms and complex user interactions. It’s also useful if you need to scrape a site that aggressively uses anti-scraping JavaScript; since Selenium is literally controlling a real browser, it can slip past some front-end detection (though not all anti-bot measures). Another advantage is its support for multiple browsers and even different programming languages (if you ever need to port your scraping to another ecosystem).

The major downsides of Selenium are performance and overhead. Controlling a full browser is much heavier than sending HTTP requests. It consumes more memory and CPU, and launching browsers can be slow. If you have to scrape thousands of pages, doing it via Selenium will be far slower than using HTTP clients, so it’s recommended only when necessary. Additionally, setting up Selenium can be a bit involved – you often need to manage WebDriver executables (or use tools like Selenium Manager), and handling timeouts or waiting for elements requires careful coding to avoid errors. Finally, some anti-bot systems still recognize automation (e.g., via specific browser tells), so you might need to combine Selenium with stealth plugins or proxies for best results. In short, Selenium is powerful and versatile, but you pay for that power with complexity and resource usage.

9. Playwright

- GitHub Stars: ~13K (official Microsoft-backed browser automation library)

- Highlight: Modern browser automation with multi-browser support and auto-waiting features

- Best for: Scraping modern web apps with fewer headaches – Playwright handles waits and supports headless/human modes seamlessly

- Official Docs: Playwright for Python

Playwright is a newer entrant to browser automation, originally developed by Microsoft. It provides an API to control Chromium, Firefox, and WebKit browsers with one consistent library, and it has excellent Python support. For scraping, Playwright offers the ability to interact with a page’s JavaScript environment easily – you can evaluate scripts in the page context, intercept network requests, and more. It also has smart waiting built-in, which means it can automatically wait for elements to appear or network calls to finish (reducing the need for manual sleep calls).

The strengths of Playwright appear in scraping Single Page Applications (SPAs) or any site where content loads dynamically. Because it was designed with testing in mind, it excels at waiting for the page to be ready. For example, you can navigate to a page and use page.text_content("selector") to get content, and Playwright will ensure that selector is present before returning. It also supports headless mode by default and even allows control of multiple contexts/pages simultaneously, which can be used to parallelize scraping within one process.

One limitation of Playwright is that it’s a bit heavy to install (it downloads browser engines when you install the package). Also, like Selenium, it’s running real browsers under the hood, so it will always be slower than a pure HTTP approach. Another consideration is that Playwright is younger than Selenium, so while it’s quickly growing, the community is not as large yet (though it’s very active). In practice, many scrapers find Playwright to be more convenient and reliable than Selenium for modern sites, but it’s worth noting you’ll need to adjust your code to its async style (Playwright’s Python API can be used in sync via context managers or in async mode). Overall, Playwright is a top choice in 2026 for scraping dynamic sites, offering a fresh take on browser automation that often results in less flaky scripts compared to Selenium.

10. Requests-HTML

- GitHub Stars: 10.5K (was popular, now archived)

- Highlight: All-in-one solution combining Requests (for HTTP), BeautifulSoup (for parsing), and a headless browser for JavaScript

- Best for: Simple projects that need a bit of JS rendering without the complexity of full Selenium/Playwright

- Official Docs: Requests-HTML Documentation (archived)

Requests-HTML is an interesting hybrid library created by the author of Requests. It aims to make web scraping as simple as doing requests_html.get(url), but with the ability to execute JavaScript. Under the hood, it uses Requests for fetching pages, Beautiful Soup for parsing, and Pyppeteer (a headless Chrome driver) for JavaScript rendering when needed. With Requests-HTML, you can do things like response.html.render() to run JS on a page, then use .find() with CSS selectors to extract elements, all in one workflow.

This tool is great for small-scale scrapers or quick scraping scripts where you occasionally need JS rendering but don’t want to dive into full browser automation frameworks. For example, if 90% of your target pages are static and 10% need a script executed, Requests-HTML lets you stay in one library: you can parse the static pages quickly, and for the dynamic ones, just call .render() and it will spin up a headless browser to get the updated HTML. It also has nice convenience methods (like .search() with CSS selectors or XPath) that make data extraction pretty straightforward.

However, Requests-HTML is no longer actively maintained – the repository has been archived. This means that its JavaScript rendering (powered by Pyppeteer) may not keep up with the latest Chrome changes. In some environments it can be tricky to get the rendering to work (it needs an event loop for Pyppeteer). Essentially, while the idea is great, you might encounter issues with modern sites if the underlying headless browser is outdated. Another limitation is performance: rendering with Pyppeteer is heavy, so you wouldn’t want to use this for large-scale crawling. In summary, Requests-HTML can be a convenient tool for one-off tasks or learning purposes, but be cautious with it in 2026 as it may have compatibility issues moving forward.

Anti-Bot Bypass and CAPTCHA Handling

Modern websites often deploy anti-scraping measures like CAPTCHAs, IP blocking, and bot detection. The following tools and services help bypass those defenses so your scraper can keep working.

11. Scrape.do

- GitHub Stars: N/A (commercial API service)

- Highlight: All-in-one scraping API with built-in anti-bot bypass and rotating proxies

- Best for: Scraping highly protected sites (Cloudflare, Akamai, etc.) without managing proxies or headless browsers yourself

- Official Docs: Scrape.do Documentation

Scrape.do is a web scraping API service that handles the messy parts of scraping for you. Instead of using a traditional library, you send your request to Scrape.do’s API endpoint, and it returns the HTML (or JSON) of the target page with all anti-bot hurdles already cleared. Under the hood, Scrape.do manages a network of over 110 million rotating proxies and headless browsers to mimic real user behavior. It can defeat common defenses like complex CAPTCHAs, Cloudflare “under attack” mode, and bot blockers like DataDome or Akamai – all without you having to write special handling code.

The major benefit of Scrape.do is simplicity and success rate. If you’re dealing with a site that detects or blocks your own scrapers, you can offload that to Scrape.do. For example, you can scrape a target URL by calling http://api.scrape.do?key=APIKEY&url=TARGET_URL and you’ll get the raw page data as if it were fetched by a real browser with the right IP and fingerprint. This makes it ideal for projects where setting up proxy pools or browser automation is too time-consuming or unreliable. Scrape.do also offers CAPTCHA solving and session management behind the scenes, meaning it will keep cookies or solve puzzles as needed to get through the front door.

Because Scrape.do is a paid API service, the primary downside is cost. Heavy usage will incur fees (though they do offer a free tier with 1000 credits to get started). Also, using an API means you’re adding an external dependency – you have to trust Scrape.do with the target URLs and data. In some cases (very strict compliance requirements), that might not be acceptable. Lastly, while using an API is easy, it’s less flexible than coding a solution yourself; you might not be able to handle exotic interactions on a page the way you could with a custom Selenium script. That said, for most anti-bot and CAPTCHA scenarios, Scrape.do offers a plug-and-play solution that can save you a ton of time, letting you focus on data extraction rather than the cat-and-mouse game of bypassing defenses.

12. Cloudscraper

- GitHub Stars: ~4K (active community maintaining it)

- Highlight: Bypasses Cloudflare “Under Attack” mode and similar anti-bot challenges using a Python approach

- Best for: Getting past Cloudflare and JavaScript-based blocks when using Requests or other simple HTTP clients

- Official Docs: Cloudscraper GitHub (README)

Cloudscraper is a Python module that specializes in defeating anti-bot pages, especially from Cloudflare. If you’ve ever gotten those “Please wait 5 seconds” or JavaScript challenge pages, Cloudscraper can simulate the necessary browser actions to solve them. It acts as a drop-in replacement for Requests – you use cloudscraper.get() instead of requests.get(), for example – and it will automatically handle the challenge by evaluating the JavaScript or token required. This allows your scraper to proceed and get the real page content.

The tool works best for Cloudflare-protected sites or others using similar JS challenges. It’s updated as Cloudflare changes its tactics, and has moderate community support (over 100K weekly downloads, indicating many use it). Using Cloudscraper means you don’t have to resort to a full browser for these cases; it keeps things lightweight by attempting the challenge solution in Python. It also supports integration with CAPTCHA solving services if a challenge escalates to an actual CAPTCHA.

However, Cloudscraper isn’t a silver bullet for all anti-bot systems. It primarily targets known patterns like Cloudflare IUAM. More advanced behavioral detections or other providers (PerimeterX, etc.) might still block you. Also, whenever Cloudflare updates their challenge (which can happen without notice), Cloudscraper may temporarily break until the maintainers adjust – there could be downtime where scraping those sites fails. Another limitation is that for very sophisticated anti-bot scripts, a headless browser might still be needed (Cloudscraper won’t run a full browser environment). In summary, Cloudscraper is a handy first line of defense to programmatically bypass common anti-scraping shields on the web. It keeps your scraper code simple, but be prepared to upgrade or switch tactics if the target throws something it can’t handle.

13. Undetected-Chromedriver

- GitHub Stars: ~11K (popular for Selenium stealth)

- Highlight: A patched version of ChromeDriver that avoids automation detection

- Best for: Stealth scraping with Selenium – when you need a real browser but want to reduce chances of being flagged as a bot

- Official Docs: undetected-chromedriver PyPI

Undetected-Chromedriver (often abbreviated as uc) is essentially a custom Selenium ChromeDriver that’s been modified to remove or mask the typical fingerprints that websites look for to detect bots. Normally, when you use Selenium, websites can detect it through attributes like the infamous navigator.webdriver flag or specific quirks in headless mode. Undetected-Chromedriver continuously adapts to these and tries to present as a genuine Chrome browser. You use it just like a regular Selenium WebDriver – in fact, it’s a drop-in replacement for webdriver.Chrome().

This tool is ideal for scraping targets that aggressively detect and block Selenium. By using undetected_chromedriver, you can often navigate pages that would otherwise show “Access denied” or CAPTCHA walls to standard Selenium scripts. It automatically downloads the correct version of ChromeDriver internally, and can even update to newer Chrome versions on the fly, which makes maintenance easier. Many people scraping ticketing sites, sneaker sites, or other highly guarded web services leverage this project to stay under the radar.

The downside is that it’s a bit of an arms race: as detection techniques evolve, undetected-chromedriver must also update. The project is quite active, but there might be short periods where a new anti-bot measure breaks the stealth until a fix comes. Also, using this doesn’t mean you can abandon good practices – you should still use random delays, multiple proxies/IPs, and other tricks if scraping a site intensively. Additionally, headless mode might still be less reliable than headful (though recent versions claim even headless is undetectable). In terms of usage, it’s a specific solution for Selenium; if you’re not already using Selenium, this library alone won’t help (you’d use something like Scrape.do or Cloudscraper instead). But for those who need Selenium’s power and are facing bot roadblocks, Undetected-Chromedriver is a lifesaver to make your automated browser look as human as possible.

14. 2Captcha (API and Python SDK)

- GitHub Stars: N/A (proprietary service)

- Highlight: Cloud service that solves CAPTCHAs for you (via real humans or AI), with easy Python integration

- Best for: Bypassing CAPTCHA challenges (reCAPTCHA, hCaptcha, image puzzles) that your scraper encounters

- Official Docs: 2Captcha API Documentation (with Python examples)

2Captcha isn’t a typical library but rather an online CAPTCHA-solving service that provides a Python SDK for convenience. When your scraper encounters a CAPTCHA – be it a simple image CAPTCHA, Google reCAPTCHA, hCaptcha, or others – you can use 2Captcha to solve it. The way it works: you send the CAPTCHA (or the site key for something like reCAPTCHA) to 2Captcha via their API, and after a short time, they return the solution (for image/text CAPTCHAs this is the text, for reCAPTCHA it’s a token code to submit). The heavy lifting is done by a combination of human solvers and AI, depending on the type and complexity.

For scraping, 2Captcha is essential when fully automated approaches hit a wall at a CAPTCHA. For example, if a site shows a reCAPTCHA after several page requests, you can integrate the 2Captcha Python client to submit that challenge and get past it. Many scrapers use this in tandem with Selenium or requests-based scrapers: detect a CAPTCHA form, call 2Captcha, and then feed the answer back into the page to continue scraping. The Python library (twocaptcha or 2captcha-python) makes this relatively straightforward by abstracting the HTTP calls to the API

The obvious drawback is that 2Captcha is a paid service per CAPTCHA. Costs are usually low (fractions of a cent for easy CAPTCHAs to a couple of cents for harder ones), but if you trigger a lot of CAPTCHAs, it can add up. There’s also an inherent delay – solving a CAPTCHA can take anywhere from a few seconds to over 30 seconds depending on difficulty and solver availability, so it will slow down your scraping flow at that point. Lastly, sending data to a third-party might raise security considerations (though usually you’re sending non-sensitive puzzle data). Despite these issues, when you’re stuck with a “Please prove you’re not a robot” roadblock, a service like 2Captcha is often the only practical solution. It integrates well with Python scrapers, allowing you to automate what is literally a human verification step.

Other Useful Libraries

This category covers full-stack scraping frameworks and handy utilities for scheduling, throttling, or exporting data – the glue and engines for larger scraping projects.

15. Scrapy

- GitHub Stars: ~55K (the most-starred web scraping framework

- Highlight: A full-fledged scraping framework with built-in crawling, parsing, pipeline, and throttling mechanisms

- Best for: Large-scale projects or complex crawls that require speed, structure, and out-of-the-box tooling

- Official Docs: Scrapy Documentation

Scrapy is a batteries-included web crawling framework that takes care of the entire scraping pipeline. With Scrapy, you define “spiders” – Python classes that specify how to crawl a website (e.g., start URLs, how to follow links, and how to parse content). Scrapy handles the networking (it has an asynchronous engine under the hood for high performance), scheduling of requests, parsing of responses, and even the storage/export of scraped data via pipelines. It’s built on Twisted (an async networking library), which means it can be extremely fast and handle many requests in parallel.

The framework is best used when you have a bigger project with lots of pages or sites to scrape, or if you need robust features like auto-throttling (to respect site load), caching, or persistent data storage. Scrapy projects are structured with separation of concerns: you have spiders for crawling, item pipelines for cleaning or saving data, and middlewares for things like rotating proxies or user-agents. Scrapy doesn’t depend on Requests or BeautifulSoup – it has its own downloader and uses Parsel (lxml-based) for parsing HTML with CSS or XPath selectors. Many companies and researchers use Scrapy for its proven performance and the fact that it’s been battle-tested on countless scraping jobs.

The main hurdles with Scrapy are its learning curve and project overhead. Because it’s a framework, you have to embrace its way of doing things (for example, running a crawl via the Scrapy command-line or CrawlerProcess, and yielding requests/items from your spider methods). Beginners might find it complex to set up initially. Additionally, Scrapy does not natively execute JavaScript, so dynamic sites need integration with something like Splash or Playwright via middleware. It’s also overkill for quick one-off scrapes – the amount of code and structure might not be worth it if you just have a small script to run. In terms of downsides, there’s not much to fault in Scrapy’s capabilities; it’s more about the overhead of using a big framework. If you invest the time to learn it, Scrapy can manage huge scraping operations efficiently, scheduling requests, handling retries, and yielding data in a streamlined way. It’s the framework of choice in Python for scalable web scraping, provided you don’t mind the upfront complexity.

16. MechanicalSoup

- GitHub Stars: ~4.8K (niche but solid user base)

- Highlight: Automates browser-like interactions (form submission, link navigation) without a real browser

- Best for: Logging into websites or crawling through flows of pages using a lightweight approach

- Official Docs: MechanicalSoup Documentation

MechanicalSoup is a clever library that combines Requests and Beautiful Soup to mimic some browser interactions. It was inspired by an old library called Mechanize. With MechanicalSoup, you can do things like navigate pages and submit forms as if you had a simple browser, but it’s all done with HTTP requests under the hood – no JavaScript or rendering is involved. For instance, you can use it to log into a site: it will automatically fill in the form fields and maintain a session (cookies) for you. It automatically follows redirects and links when instructed, making it convenient to handle multi-page sequences.

This tool is great for use cases like logging into websites, scraping pages behind forms, or any situation where you need to maintain a session state and traverse links. It’s much lighter than using Selenium for those tasks because it doesn’t run a browser; it just parses the HTML and figures out what requests to send next. MechanicalSoup will, for example, parse an HTML form into a Python object, let you fill in values, and then submit it – handling all the cookie and state management along the way. It’s especially handy for sites that don’t heavily rely on JavaScript but do have a lot of forms or need sessions.

The limitation of MechanicalSoup is that it does not execute JavaScriptIf a site requires JS (like a SPA or a heavily dynamic UI), MechanicalSoup won’t help retrieve that content. Another consideration is that for very large crawls, you’re essentially writing a custom crawler with MechanicalSoup; it’s not as scalable or structured as Scrapy. You may need to handle your own parallelism or rate limiting. Also, it’s somewhat a niche tool – fewer users means you might not find as many community examples for complex scenarios (though it’s well-documented). In summary, MechanicalSoup is a perfect fit for logging in and navigating through standard web forms/pages in a lightweight manner. It fills the gap between low-level Requests and high-level browser automation, as long as the target site doesn’t demand a full JS engine.

17. APScheduler

- GitHub Stars: ~6.7K (general-purpose scheduling library

- Highlight: Allows you to schedule Python functions (e.g., your scraping jobs) to run periodically or at specific times

- Best for: Running scrapers on a schedule (daily, hourly, etc.) within your application or maintaining a scraping routine

- Official Docs: APScheduler Documentation

APScheduler (Advanced Python Scheduler) is not a scraping tool per se – it’s a task scheduling library – but it becomes very useful in the context of web scraping when you need to run scrapers at intervals or manage timed jobs. With APScheduler, you can programmatically schedule jobs to run, using cron-like syntax, interval schedules, or specific dates. For example, you could schedule a job to scrape a website every day at 9 AM, or fetch updates every 10 minutes, all from within your Python application.

In a scraping project, APScheduler is best used for automation of recurring scrapes. If you’re not using an external cron service or something like Airflow, APScheduler lets your Python program handle scheduling internally. It supports different “executors” (like threads or process pools) to run jobs in the background, and it can even persist jobs so they survive application restarts (using databases like SQLite, PostgreSQL, etc., as a store). This means you can build a long-running scraping service that knows when to run each spider or task – essentially a custom scraper cron system.

One should note that APScheduler is a library you integrate – it’s not a standalone service. So you’d typically incorporate it into a script or a server that is always on. For pure scraping scripts, if you just need a daily run, sometimes a simple cron job on your server might do; but if you want more control (like dynamically scheduling jobs or running inside an app), APScheduler is ideal. It’s not web-scraping specific, so you have to ensure your scraping code is thread-safe or can run in whatever execution mode you choose. Also, APScheduler will add some complexity – you need to manage the scheduler’s life cycle (start it, keep it running). But overall, it’s a reliable way to handle timed scraping tasks in Python. It saves you from writing custom loops with time.sleep() calls or manually configuring cron, and gives you a programmatic interface to control when and how your scraping jobs fire.

Conclusion

The Python ecosystem in 2026 offers a rich toolbox for web scraping.

With the above libraries at your disposal, you’re equipped to handle everything from basic data extraction to the most challenging scraping projects.

Full Stack Developer