Category:Proxy

Quick Guide to Using Proxies with Python Requests

Full Stack Developer

Proxies are the backbone of web scraping and crawling.

Without them, you're stuck with your real IP address, making you an easy target for rate limits, geo-blocks, and anti-bot systems that can shut down your entire scraping operation in minutes.

But here's the thing: most developers treat proxies as an afterthought, then wonder why their scrapers fail after a few hundred requests.

In this guide, we'll show you exactly how to integrate proxies with Python requests, handle rotation, bypass geo-restrictions, and avoid the common pitfalls that break scrapers at scale.

Any IPs we use in this article are basically duds, I replaced the IPs I found through just typing "free proxies" on Google.

Adding Proxies to Your Python HTML Requests

The most basic integration of a proxy with Python requests is straightforward, but understanding proxy IPs and ports will save you hours of debugging later.

A proxy acts as an intermediary between your scraper and the target website. Instead of sending requests directly from your IP, you route them through a proxy server that forwards your request and returns the response.

Every proxy has two key components you need to know:

- IP Address: The proxy server's location (e.g.,

198.37.121.89) - Port: The specific port the proxy listens on (e.g.,

8080)

Here's the most basic way to integrate a proxy with a Python request:

import requests

# Define your proxy configuration

proxy = {

'http': 'http://198.37.121.89:8080',

'https': 'http://198.37.121.89:8080'

}

# Send request through the proxy to check your IP

response = requests.get('https://httpbin.org/ip', proxies=proxy)

print(response.json())When you run this code, instead of seeing your real IP address, you'll get the proxy's IP:

{

"origin": "198.37.121.89"

}The proxies parameter accepts a dictionary with http and https keys. Both point to the same proxy server in this example, but you can specify different proxies for HTTP and HTTPS traffic if your setup requires it.

Proxy Use Cases: Why Use Them?

In the world of scraping, a proxy might come in handy in various situations where your default setup isn't enough.

- Anonymity: Using your real IP can cause websites to know exactly who you are and where you're connecting from. Proxies hide your real IP address from target websites, making it harder for them to track your scraping activity or connect multiple requests back to the same source.

- Bypassing Rate Limits: Most websites limit how many requests you can make per minute from a single IP address. Proxies let you distribute your requests across multiple IP addresses to avoid hitting rate limits that would otherwise block your scraper after a few hundred requests.

- Bypassing Geo-Blocks: Some content on the internet will be restricted based on your geographic location, blocking access to region-specific data. Proxies let you appear to be browsing from different countries, accessing region-specific content, regional pricing, localized discounts, or ads that aren't available in your actual location.

- Bypassing Anti-Bots: WAFs and anti-bot systems often block poor quality IPs or prompt CAPTCHA challenges to datacenter addresses. Good quality IPs like residential or mobile ISP proxies will not be subject to these restrictions because they appear as legitimate users.

Bypassing Geo-Restrictions with Proxies in Python Requests

This is the same as the basic proxy setup we showed above, with one key difference: you need to find an IP from the country or region you want to access content for.

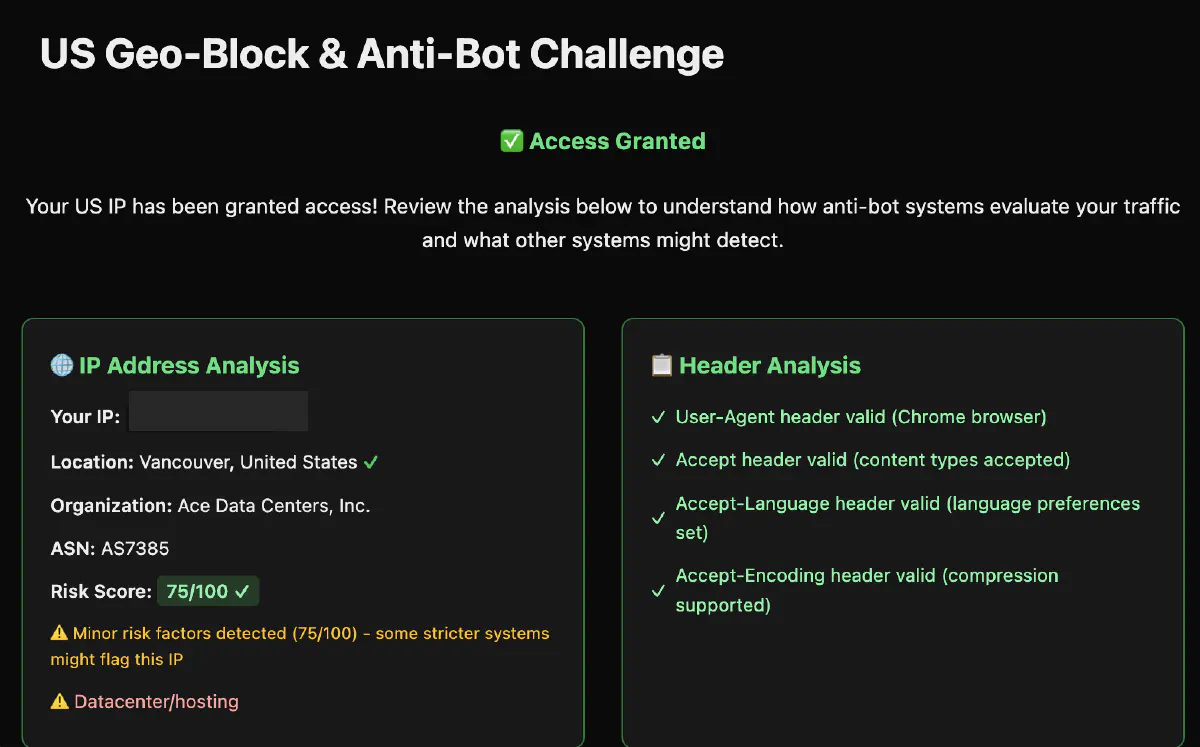

We'll test this by sending a request to https://scrapingtest.com/geo-block-anti-bot-challenge, which is a basic geo-restrictor that looks for US IPs.

The challenge page also looks for these headers: valid user agent, accept, accept-language, and accept-encoding, so we'll add them to the request as well.

Here's how to set up a request with a US proxy and the required headers:

import requests

# US proxy configuration

proxy = {

'http': 'http://198.37.121.89:8080',

'https': 'http://198.37.121.89:8080'

}

# Headers to mimic a real US browser

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8',

'Accept-Language': 'en-US,en;q=0.5',

'Accept-Encoding': 'gzip, deflate'

}

# Send request to geo-blocked page

response = requests.get(

'https://scrapingtest.com/geo-block-anti-bot-challenge',

proxies=proxy,

headers=headers

)

print(response.text)Here's what the output will look like when we succeed:

This request will successfully bypass the geo-block because it appears to come from a US IP address with proper browser headers, giving you access to the restricted content.

Rotating Proxies in Python Requests

In web scraping, a single request is very rare. Usually, you send hundreds of requests in a matter of minutes.

Let's send 10 requests back-to-back to the challenge page and see what happens:

import requests

import time

# Single proxy (will get blocked)

proxy = {

'http': 'http://198.37.121.89:8080',

'https': 'http://198.37.121.89:8080'

}

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en-US,en;q=0.5',

'Accept-Encoding': 'gzip, deflate'

}

for i in range(10):

response = requests.get(

'https://scrapingtest.com/geo-block-anti-bot-challenge',

proxies=proxy,

headers=headers

)

print(f"Request {i+1}: {response.status_code}")

time.sleep(1)Here's what the output looks like:

Request 1: 200

Request 2: 200

Request 3: 200

Request 4: 429

Request 5: 429

Request 6: 429

Request 7: 429

Request 8: 429

Request 9: 429

Request 10: 429You'll see that after the 3rd request, you start getting 429 Rate limit exceeded. Try again later. responses. This is exactly why we need to add more proxies to our pool so that we rotate them to avoid rate limiting.

Building on top of the previous code, we'll implement rotation of proxies to the mix, then we'll send 10 requests:

import requests

import random

import time

# Pool of proxies for rotation

proxy_pool = [

{'http': 'http://198.37.121.89:8080', 'https': 'http://198.37.121.89:8080'},

{'http': 'http://203.45.67.12:3128', 'https': 'http://203.45.67.12:3128'},

{'http': 'http://185.162.231.45:8080', 'https': 'http://185.162.231.45:8080'},

{'http': 'http://192.168.1.100:8080', 'https': 'http://192.168.1.100:8080'},

{'http': 'http://45.32.101.24:8080', 'https': 'http://45.32.101.24:8080'}

]

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en-US,en;q=0.5',

'Accept-Encoding': 'gzip, deflate'

}

for i in range(10):

# Randomly select a proxy from the pool

proxy = random.choice(proxy_pool)

try:

print(f"Sending request through {proxy['http'].split('//')[1]}:")

response = requests.get(

'https://scrapingtest.com/geo-block-anti-bot-challenge',

proxies=proxy,

headers=headers,

timeout=10

)

print(f"Status Code: {response.status_code}")

except Exception as e:

print(f"Failed: {e}")

time.sleep(1)And here's what you will get in the terminal:

Sending request through 198.37.121.89:8080:

Status Code: 200

Sending request through 45.32.101.24:8080:

Status Code: 200

Sending request through 203.45.67.12:3128:

Status Code: 200

Sending request through 192.168.1.100:8080:

Failed: HTTPConnectionPool(host='192.168.1.100', port=8080): Max retries exceeded

Sending request through 185.162.231.45:8080:

Status Code: 200

Sending request through 198.37.121.89:8080:

Status Code: 200

Sending request through 192.168.1.100:8080:

Failed: HTTPConnectionPool(host='192.168.1.100', port=8080): Max retries exceeded

Sending request through 45.32.101.24:8080:

Status Code: 200

Sending request through 203.45.67.12:3128:

Status Code: 200

Sending request through 185.162.231.45:8080:

Status Code: 200You'll see that one specific IP failed: which is the most natural thing in web scraping. I intentionally kept it to show that 100% success rate is far from reality when doing things at scale.

Bypassing Anti-Bot with Proxies

The challenge page we've been testing is very basic. In the real world, websites use sophisticated systems dedicated to detecting anti-bots through IP quality, user behavior patterns, and advanced fingerprinting.

Let's test our same proxy rotation script against a more advanced anti-bot system:

import requests

import random

import time

# Same proxy pool as before

proxy_pool = [

{'http': 'http://198.37.121.89:8080', 'https': 'http://198.37.121.89:8080'},

{'http': 'http://203.45.67.12:3128', 'https': 'http://203.45.67.12:3128'},

{'http': 'http://185.162.231.45:8080', 'https': 'http://185.162.231.45:8080'},

{'http': 'http://45.32.101.24:8080', 'https': 'http://45.32.101.24:8080'}

]

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en-US,en;q=0.5',

'Accept-Encoding': 'gzip, deflate'

}

# Test against Cloudflare challenge

for i in range(10):

proxy = random.choice(proxy_pool)

try:

response = requests.get(

'https://scrapingtest.com/cloudflare-challenge',

proxies=proxy,

headers=headers,

timeout=10

)

print(f"Request {i+1}: {response.status_code}")

except Exception as e:

print(f"Request {i+1}: Failed - {e}")

time.sleep(1)This will fail with 403 Forbidden all 10 times:

Request 1: 403

Request 2: 403

Request 3: 403

Request 4: 403

Request 5: 403

Request 6: 403

Request 7: 403

Request 8: 403

Request 9: 403

Request 10: 403Simple proxies and headers won't bypass any serious WAF out there.

For production scraping, you need dedicated anti-bot bypass tools like Scrape.do:

import requests

# Scrape.do handles everything automatically

TOKEN = "<your-token>"

target_url = "https://scrapingtest.com/cloudflare-challenge"

for i in range(10):

api_url = f"http://api.scrape.do/?token={TOKEN}&url={target_url}&render=true&super=true"

response = requests.get(api_url)

print(f"Request {i+1}: {response.status_code}")

time.sleep(1)Each request gets 200 OK:

Request 1: 200

Request 2: 200

Request 3: 200

Request 4: 200

Request 5: 200

Request 6: 200

Request 7: 200

Request 8: 200

Request 9: 200

Request 10: 200This works because Scrape.do handles proxy rotation, header spoofing, and anti-bot bypass automatically.

Conclusion

Proxies are a very important component of web scraping, and there are hundreds of providers with pools that have millions of datacenter, residential, and mobile IPs.

But managing proxy rotation, handling failures, and bypassing modern anti-bot systems requires significant infrastructure and expertise that most developers don't have time to build and maintain.

Solutions like Scrape.do take the headache of web scraping away, bypassing geo-blocks and anti-bots easily so you can focus on extracting the data you need instead of fighting technical obstacles.

Full Stack Developer