Category:Scraping Use Cases

How to Scrape Car Rental Data from DiscoverCars.com in 2026

R&D Engineer

DiscoverCars processes thousands of car rental searches daily across hundreds of locations worldwide.

For travel agencies, price comparison platforms, or market research firms, this data is essential competitive intelligence.

But DiscoverCars doesn't make it easy. The platform sits behind CloudFlare protection, requires specific location parameters, and embeds critical data in JavaScript that's not immediately visible.

We'll show you exactly how to extract it.

Why Scraping DiscoverCars is Challenging

DiscoverCars operates as a car rental aggregator, which means they need to protect their data from competitors while serving thousands of legitimate searches per minute.

They've implemented several layers of protection that make automated access difficult.

CloudFlare WAF Protection

DiscoverCars uses CloudFlare's Web Application Firewall to analyze incoming requests.

Every request gets inspected for bot-like patterns: TLS fingerprints, header inconsistencies, and request timing.

Send a basic Python requests call and you'll hit a challenge page instead of search results.

Complex API Flow with Session Management

Unlike simple product pages, DiscoverCars requires a multi-step process:

- Location lookup via autocomplete API

- Search creation that returns a unique search ID and security token

- Results fetching using the generated search URL

Each step builds on the previous one, and missing any parameter breaks the entire flow.

Dynamic JavaScript Content

Search results aren't just delivered as clean JSON.

Sometimes the data comes embedded within HTML responses that need JavaScript parsing, requiring careful extraction of JSON from mixed content.

We'll handle all these obstacles using Scrape.do, which automatically manages CloudFlare bypassing, session handling, and JavaScript rendering when needed.

Prerequisites

We'll use Python with a few essential libraries to build our DiscoverCars scraper.

Install Required Libraries

You'll need requests for HTTP calls, urllib.parse for URL encoding, and Python's built-in json for parsing responses:

pip install requestsGet Your Scrape.do Token

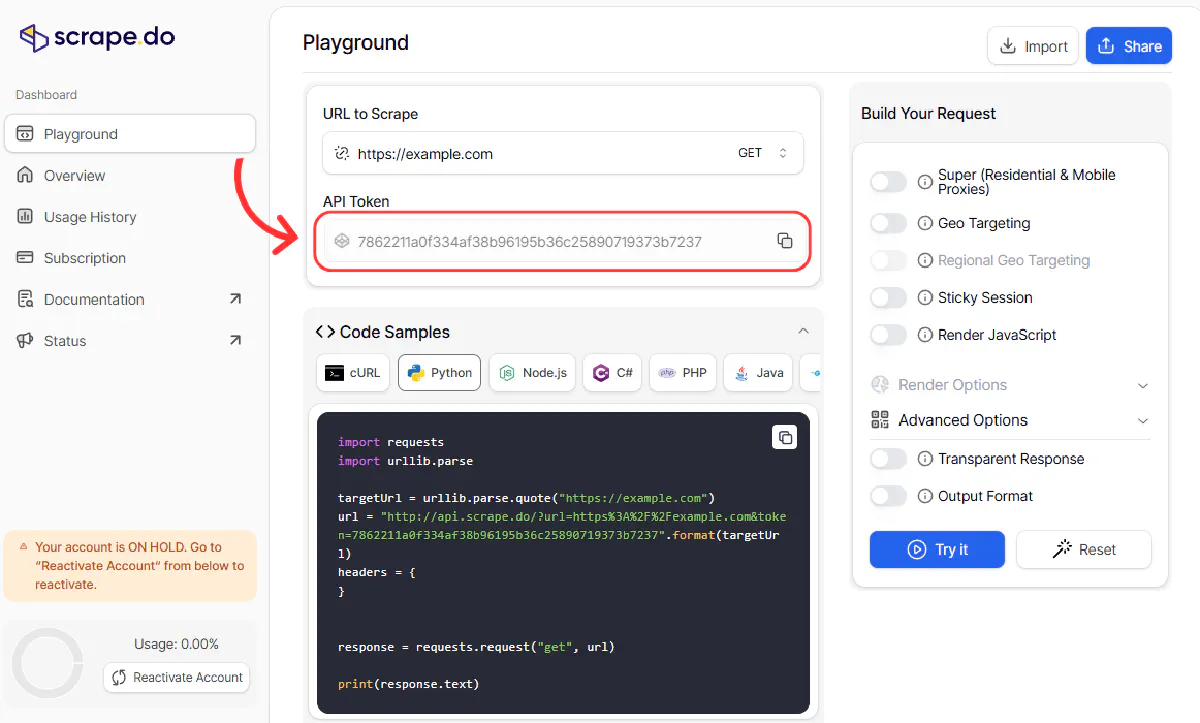

- Create a free Scrape.do account - you get 1000 free credits monthly

- Copy your API token from the dashboard

This token routes your requests through Scrape.do's infrastructure, automatically handling CloudFlare challenges and proxy rotation.

Search for Available Locations

Before creating any rental searches, you need to find the specific location IDs that DiscoverCars uses internally.

The platform provides an autocomplete API that maps search terms to location data including country IDs, city IDs, and place IDs.

Understanding Location Parameters

DiscoverCars requires three key identifiers for any search:

- Country ID: Numeric identifier for the country

- City ID: Numeric identifier for the city

- Location ID: Specific pickup location (airport, city center, etc.)

Let's build a function to search for these using a location name:

import requests

import urllib.parse

def get_cities(search_term, token):

"""

Search for location data using DiscoverCars autocomplete API.

Returns location details including required IDs.

"""

target_url = f"https://www.discovercars.com/en/search/autocomplete/{search_term}"

encoded_url = urllib.parse.quote(target_url)

api_url = f"https://api.scrape.do/?token={token}&url={encoded_url}&super=true"

response = requests.get(api_url)

try:

locations = response.json()

if isinstance(locations, list):

for location in locations:

print("--------------------------------")

print("Location:", location.get("location"))

print("Place:", location.get("place"))

print("City:", location.get("city"))

print("Country:", location.get("country"))

print("Country ID:", location.get("countryID"))

print("City ID:", location.get("cityID"))

print("Place ID:", location.get("placeID"))

print("--------------------------------")

return locations

except Exception as e:

print(f"Error parsing location data: {e}")

return None

# Example usage

token = "YOUR_TOKEN_HERE"

locations = get_cities("CMN", token) # Search for CasablancaThis will output detailed information for each matching location, showing you the exact IDs needed for the next step.

Create a Car Rental Search

Once you have the location IDs, you can create a rental search on DiscoverCars.

This step sends a POST request to their search creation endpoint with pickup/dropoff details and returns a unique search identifier.

Build the Search Creation Request

The search creation requires specific form data including location IDs, dates, and driver information:

def create_search(token, pick_up_country_id, pick_up_city_id, pick_up_location_id,

pickup_from, pickup_to, pick_time="11:00", drop_time="11:00",

driver_age=35, residence_country="GB"):

"""

Create a car rental search on DiscoverCars.

Returns search URL and GUID for fetching results.

"""

target_url = "https://www.discovercars.com/en/search/create-search"

encoded_url = urllib.parse.quote(target_url)

# Prepare form data with all required parameters

form_data = {

'is_drop_off': '0',

'pick_up_country_id': str(pick_up_country_id),

'pick_up_city_id': str(pick_up_city_id),

'pick_up_location_id': str(pick_up_location_id),

'drop_off_country_id': str(pick_up_country_id), # Same as pickup

'drop_off_city_id': str(pick_up_city_id), # Same as pickup

'drop_off_location_id': str(pick_up_location_id), # Same as pickup

'pickup_id': str(pick_up_location_id),

'dropoff_id': str(pick_up_location_id),

'pickup_from': pickup_from, # Format: "2025-09-26 11:00"

'pickup_to': pickup_to, # Format: "2025-09-30 11:00"

'pick_time': pick_time,

'drop_time': drop_time,

'partner_id': '0',

'exclude_locations': '0',

'luxOnly': '0',

'driver_age': str(driver_age),

'residence_country': residence_country,

'abtest': '',

'token': '',

'recent_search': '0'

}

headers = {

'accept': 'application/json, text/javascript, */*; q=0.01',

'content-type': 'application/x-www-form-urlencoded; charset=UTF-8'

}

api_url = f"https://api.scrape.do/?url={encoded_url}&token={token}&super=true"

try:

response = requests.post(api_url, data=form_data, headers=headers)

result = response.json()

if result and result.get('success'):

search_id = result['data']['guid']

search_sq = result['data']['sq']

# Construct the search results URL

search_url = f"https://www.discovercars.com/api/v2/search/{search_id}?sq={search_sq}&searchVersion=2"

return {

'success': True,

'search_url': search_url,

'search_id': search_id

}

else:

print("Search creation failed")

return None

except Exception as e:

print(f"Error creating search: {e}")

return NoneExample Search Creation

Here's how to create a search for a specific location and date range:

token = "YOUR_TOKEN_HERE"

# Using location data from previous step (example for Casablanca)

search_result = create_search(

token=token,

pick_up_country_id=54, # Morocco

pick_up_city_id=350, # Casablanca

pick_up_location_id=1850, # Specific location

pickup_from="2025-09-26 11:00",

pickup_to="2025-09-30 11:00"

)

if search_result and search_result.get('success'):

print("Search created successfully!")

print("Search URL:", search_result['search_url'])

else:

print("Failed to create search")The response includes a search_url that we'll use in the next step to fetch actual vehicle results.

Fetch and Parse Vehicle Results

With a valid search URL, you can now retrieve the actual car rental offers.

DiscoverCars returns vehicle data in JSON format, but sometimes embeds it within HTML responses that require careful extraction.

Handle Different Response Formats

The results endpoint can return data in multiple formats depending on how DiscoverCars processes your request:

def fetch_search_results(search_url, token):

"""

Fetch search results from DiscoverCars search URL.

Handles both JSON and HTML-embedded responses.

"""

encoded_url = urllib.parse.quote(search_url)

api_url = f"https://api.scrape.do/?url={encoded_url}&token={token}&super=true"

try:

response = requests.get(api_url)

# Try parsing as direct JSON first

try:

response_data = response.json()

if (response_data.get('success') and

'data' in response_data and

'offers' in response_data['data']):

return parse_vehicle_offers(response_data['data']['offers'])

except ValueError:

# Response might be HTML with embedded JSON

return extract_json_from_html(response.text)

except Exception as e:

print(f"Error fetching search results: {e}")

return None

def parse_vehicle_offers(offers):

"""

Parse vehicle offers into structured data.

"""

vehicles = []

print(f"Found {len(offers)} vehicles:")

print("=" * 50)

for i, offer in enumerate(offers, 1):

vehicle = offer.get('vehicle', {})

price = offer.get('price', {})

car_name = vehicle.get('carName', 'N/A')

sipp_group = vehicle.get('sippGroup', 'N/A') # Car category

formatted_price = price.get('formatted', 'N/A')

vehicle_data = {

'name': car_name,

'category': sipp_group,

'price': formatted_price,

'supplier': offer.get('supplier', {}).get('name', 'N/A'),

'rating': offer.get('rating', 'N/A')

}

vehicles.append(vehicle_data)

print(f"{i}. {car_name}")

print(f" Category: {sipp_group}")

print(f" Price: {formatted_price}")

print(f" Supplier: {vehicle_data['supplier']}")

print("-" * 30)

if i >= 10: # Limit display to top 10

break

return vehicles

def extract_json_from_html(html_content):

"""

Extract JSON data when embedded in HTML response.

"""

import re

import json

# Look for JSON pattern in HTML

json_pattern = r'(\{.*"success".*\})'

json_match = re.search(json_pattern, html_content, re.DOTALL)

if json_match:

try:

extracted_json = json.loads(json_match.group(1))

if (extracted_json.get('success') and

'data' in extracted_json and

'offers' in extracted_json['data']):

return parse_vehicle_offers(extracted_json['data']['offers'])

except Exception as e:

print(f"Failed to extract JSON from HTML: {e}")

print("No valid vehicle data found in response")

return NoneComplete Example with Results Fetching

Here's how to tie everything together:

token = "YOUR_TOKEN_HERE"

# Create search

search_result = create_search(

token=token,

pick_up_country_id=54,

pick_up_city_id=350,

pick_up_location_id=1850,

pickup_from="2025-09-26 11:00",

pickup_to="2025-09-30 11:00"

)

if search_result and search_result.get('success'):

# Fetch results

vehicles = fetch_search_results(search_result['search_url'], token)

if vehicles:

print(f"\nSuccessfully extracted {len(vehicles)} vehicle offers")

else:

print("No vehicles found")

else:

print("Search creation failed")This will display available vehicles with their names, categories, prices, and suppliers.

Complete Working Script

Here's the full script that combines all the steps above into a working DiscoverCars scraper:

import requests

import urllib.parse

import json

import re

import csv

def get_cities(search_term, token):

"""Search for location data using DiscoverCars autocomplete API."""

target_url = f"https://www.discovercars.com/en/search/autocomplete/{search_term}"

encoded_url = urllib.parse.quote(target_url)

api_url = f"https://api.scrape.do/?token={token}&url={encoded_url}&super=true"

response = requests.get(api_url)

try:

return response.json()

except Exception as e:

print(f"Error parsing location data: {e}")

return None

def create_search(token, pick_up_country_id, pick_up_city_id, pick_up_location_id,

pickup_from, pickup_to, pick_time="11:00", drop_time="11:00",

driver_age=35, residence_country="GB"):

"""Create a car rental search on DiscoverCars."""

target_url = "https://www.discovercars.com/en/search/create-search"

encoded_url = urllib.parse.quote(target_url)

form_data = {

'is_drop_off': '0',

'pick_up_country_id': str(pick_up_country_id),

'pick_up_city_id': str(pick_up_city_id),

'pick_up_location_id': str(pick_up_location_id),

'drop_off_country_id': str(pick_up_country_id),

'drop_off_city_id': str(pick_up_city_id),

'drop_off_location_id': str(pick_up_location_id),

'pickup_id': str(pick_up_location_id),

'dropoff_id': str(pick_up_location_id),

'pickup_from': pickup_from,

'pickup_to': pickup_to,

'pick_time': pick_time,

'drop_time': drop_time,

'partner_id': '0',

'exclude_locations': '0',

'luxOnly': '0',

'driver_age': str(driver_age),

'residence_country': residence_country,

'abtest': '',

'token': '',

'recent_search': '0'

}

headers = {

'accept': 'application/json, text/javascript, */*; q=0.01',

'content-type': 'application/x-www-form-urlencoded; charset=UTF-8'

}

api_url = f"https://api.scrape.do/?url={encoded_url}&token={token}&super=true"

try:

response = requests.post(api_url, data=form_data, headers=headers)

result = response.json()

if result and result.get('success'):

search_id = result['data']['guid']

search_sq = result['data']['sq']

search_url = f"https://www.discovercars.com/api/v2/search/{search_id}?sq={search_sq}&searchVersion=2"

return {

'success': True,

'search_url': search_url,

'search_id': search_id

}

except Exception as e:

print(f"Error creating search: {e}")

return None

def fetch_search_results(search_url, token):

"""Fetch and parse vehicle results from search URL."""

encoded_url = urllib.parse.quote(search_url)

api_url = f"https://api.scrape.do/?url={encoded_url}&token={token}&super=true"

try:

response = requests.get(api_url)

# Try parsing as JSON first

try:

response_data = response.json()

if (response_data.get('success') and

'data' in response_data and

'offers' in response_data['data']):

return parse_vehicle_offers(response_data['data']['offers'])

except ValueError:

# Try extracting JSON from HTML

return extract_json_from_html(response.text)

except Exception as e:

print(f"Error fetching results: {e}")

return None

def parse_vehicle_offers(offers):

"""Parse vehicle offers into structured data."""

vehicles = []

print(f"Found {len(offers)} vehicles:")

print("=" * 50)

for i, offer in enumerate(offers, 1):

vehicle = offer.get('vehicle', {})

price = offer.get('price', {})

vehicle_data = {

'name': vehicle.get('carName', 'N/A'),

'category': vehicle.get('sippGroup', 'N/A'),

'price': price.get('formatted', 'N/A'),

'supplier': offer.get('supplier', {}).get('name', 'N/A'),

'rating': offer.get('rating', 'N/A')

}

vehicles.append(vehicle_data)

print(f"{i}. {vehicle_data['name']}")

print(f" Category: {vehicle_data['category']}")

print(f" Price: {vehicle_data['price']}")

print(f" Supplier: {vehicle_data['supplier']}")

print("-" * 30)

return vehicles

def extract_json_from_html(html_content):

"""Extract JSON from HTML when needed."""

json_pattern = r'(\{.*"success".*\})'

json_match = re.search(json_pattern, html_content, re.DOTALL)

if json_match:

try:

extracted_json = json.loads(json_match.group(1))

if (extracted_json.get('success') and

'data' in extracted_json and

'offers' in extracted_json['data']):

return parse_vehicle_offers(extracted_json['data']['offers'])

except Exception as e:

print(f"Failed to extract JSON: {e}")

return None

def save_to_csv(vehicles, filename="discovercars_results.csv"):

"""Save vehicle data to CSV file."""

if not vehicles:

print("No vehicles to save")

return

with open(filename, 'w', newline='', encoding='utf-8') as f:

writer = csv.DictWriter(f, fieldnames=['name', 'category', 'price', 'supplier', 'rating'])

writer.writeheader()

writer.writerows(vehicles)

print(f"Saved {len(vehicles)} vehicles to {filename}")

def main():

token = "YOUR_TOKEN_HERE" # Replace with your Scrape.do token

# Example search for Casablanca, Morocco

print("Creating search for Casablanca...")

search_result = create_search(

token=token,

pick_up_country_id=54, # Morocco

pick_up_city_id=350, # Casablanca

pick_up_location_id=1850, # Specific location

pickup_from="2025-09-26 11:00",

pickup_to="2025-09-30 11:00"

)

if search_result and search_result.get('success'):

print("Search created successfully!")

print("Fetching vehicle results...")

vehicles = fetch_search_results(search_result['search_url'], token)

if vehicles:

save_to_csv(vehicles)

print(f"\nExtracted {len(vehicles)} vehicle offers successfully")

else:

print("No vehicles found")

else:

print("Failed to create search")

if __name__ == "__main__":

main()Conclusion

Scraping car rental data from DiscoverCars requires navigating CloudFlare protection, complex API flows, and dynamic content rendering.

But with the right approach, you can reliably extract vehicle offers, pricing, and supplier information for any location and date range.

Scrape.do handles the heavy lifting - CloudFlare bypassing, session management, and proxy rotation - so you can focus on extracting the data you need for your travel platform, price comparison service, or market research.

Whether you're building a competitor analysis tool or integrating rental data into your application, this approach scales from single searches to thousands of requests.

R&D Engineer