What Is Content Scraping And Why It Is Necessary?

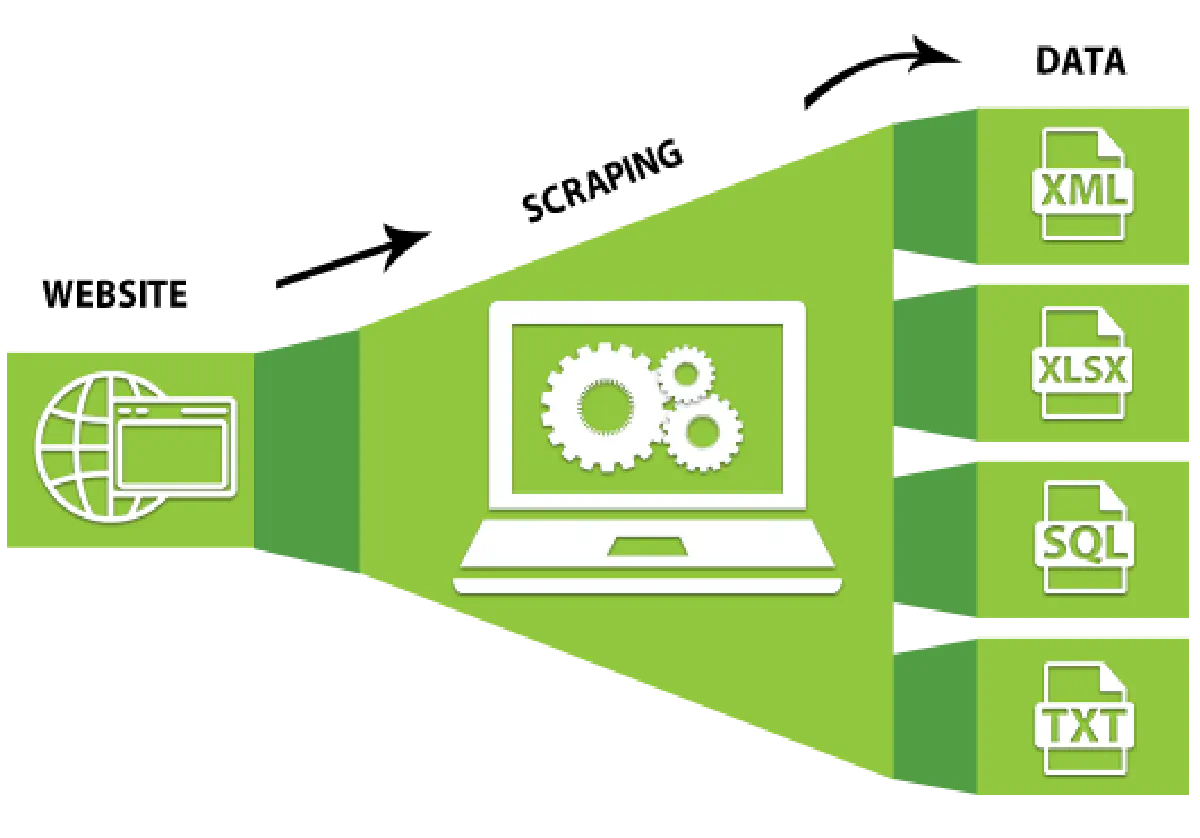

Content scraping, which can be done through web scraping and data scraping, is very confused with data scraping and web scraping because the way it is done is the same. The difference between content scraping and other scraping is to obtain unique and original content from other websites and post it elsewhere. In this scraping method, all the content is completely scraped and the data in it is not obtained. This technique is done without the permission of the original source or the author, so it is completely illegal. Although many people are not comfortable using this technique, the website where the content is reused will suffer in terms of search engine optimization, but this method is still used.

Scrape.do is an online web scraping service provider and content extraction company serving small to big-sized businesses. If you have any web data extraction needs, contact us now. We have a team of web scraping experts who can help you with your data extraction needs. You can use our service to extract data from any website and turn it into a CSV file. We guarantee to meet customers’ demands and provide high-quality services. Contact us now, it will be a good start for your business.

What is Content Scraping?

Content scraping is when a bot downloads most and all of the content on that website, regardless of the website owner’s wishes and copyrights. While content scraping is a data scraping method, content scraping is not data scraping. In this process, which is basically always performed by bots, it is possible to download all the content on a website within a few seconds.

One of the most important reasons why content scraping bots scrape and obtain content is to repost content to increase SEO on the attacker’s website. As a result of this action, copyrights are violated, organic content is stolen and content can be reused for purely malicious purposes. In addition, some forms may need to be filled out and submitted to obtain the content.

Why is Content Scraping Necessary?

All e-commerce sites that want to be up-to-date with their competitors and want to learn about trends can collect the price data of those websites by scanning the data of other websites. Content scrapers can also be used to better evaluate the data they collect. Some websites may also request website content to publish on their websites and applications for free. We can share with you examples of content scraper applications as follows:

- A website owner can use a content scraper to increase the number and volume of pages on his website, and after obtaining this content, he can repost the same things on his website.

- You can use content scrapers to keep the content on their websites up-to-date, and by republishing the content obtained from these content scrapers, you can make the website appear to be renewed regularly.

- In addition, website owners can collect content and use the language switcher by defining the language of this content. After the language of the content is changed, the content will appear as completely original content, so it can be republished.

What Type of Content Do Content Scraping Bots Target?

Bots, which are used to scrape all kinds of data such as text, images, HTML code, and CSS code that are publicly published on the Internet, transmit this data to its owner, and attackers have a chance to use the scraped data as they wish. The texts obtained by using content scraping bots can be reused on another website in order to steal the ranking of the first website on search engine results pages and to deceive internet users. The contents we have listed below are typical content that a content scraping bot can scrape.

- Blogs with thought-oriented and completely original articles

- Comprehensive product reviews on e-commerce sites

- Newly published news articles

- Technical research articles

- New listings are designed in a classified way and on places like job portals, real estate sites

- Publications that contain financial information and are the product of a research

- Product catalog on an e-commerce site

- The information of the sellers of a particular product on a particular website and the selling prices of the products

Can Content Scraping Bots Be Abused?

Let’s also mention that some of the people who use content scraper bots may be cybercriminals. These cybercriminals can use the HTML and CSS code of a website to gain the appearance of a legitimate website and increase the number of websites of a company. The general aim of cybercriminals is to make a website look real and it is aimed that users trust these websites. Immediately afterward, phishing can also be carried out by stealing the personal information of people who shop on this website.

7 Safe Scraping Techniques You Can Use to Scrape Content

As a technique you can use if you want to scrape content from websites, content scraping has tools and services that you can easily obtain online. If you want to be proficient in content scraping, you need to really expertly know all the techniques of data scraping, or you can use a freelancer or an expert team for this. If you want to take advantage of automatic web scraping tools and services, you should know those lime proxies, cURL, Wget, HTTrack, Import.io, Node.js, and others are available. Scrapers used for scraping include headless scanners such as Phantom.js, Slimmer.js, and Casper.js.

- COPY-PASTING: If you are doing manual scraping, what you will do is copy the content of a website and paste it into another file. This process is extremely time-consuming and repetitive, making it ideal for small-scale projects only. In addition, a website’s defense is geared towards automatic scraping techniques rather than manual scraping techniques, so using a manual scraping technique is extremely effective. Although this is a huge advantage, automatic engraving is extremely fast and inexpensive, so manual engraving is generally not preferred.

- HTML PARSING: This scraping method is used for text extraction, screen scraping, and source extraction, as HTML parsing with JavaScript targets linearly advancing or nested HTML pages. In addition, this method is the fastest and most robust method for text, screen, and source scraping.

- DOM PARSING: DOM, short for Document Object Model, is a structure that defines the style structure and content of XML files. If you want to get a very detailed view of the structure of the web page, you should make use of DOM parsers. Additionally, you can use a DOM parser to get the nodes that give you information, and if you want to scrape websites as well, you should prefer a tool like XPath. If you are going to pars the DOM, you should use Internet Explorer and Firefox for this.

- VERTICAL AGGREGATION: Vertical aggregation platforms are actually only used by companies with large-scale computing power to target specific industries, and some companies prefer to run these platforms on the Cloud. Under normal circumstances, people need to create bots for certain verticals and scrape the web with the help of these bots. However, vertical aggregation platforms also perform web scraping and monitoring on their own, and these processes do not involve any human intervention. The quality of the bots created will vary depending on which sector they are in and for what purpose they are created.

- XPATH: XML Path Language used with XML documents, or XPATH for short, can be described as a kind of query language. XPath helps you select nodes based on different parameters, you can also navigate XML documents with ease using XPath which results in a tree-like structure. It is also possible to use it in conjunction with DOM parsing to scrape a website’s page as we mentioned earlier.

- GOOGLE SHEETS: Extremely popular among web scrapers and even one of the most popular, Google Sheets is a web scraping tool. If you want to scrape any data you want from certain web pages, just use the IMPORT XML (,) command. You should know that this method is a method you can use only when you need to scrape certain data or patterns from a single website. You can also tell if your website is reliable against scraping through Google Sheets.

- TEXT PATTERN MATCHING: Different programming languages are used in the Text Pattern Matching method, where you need to use the UNIX grep command. This method is done by pairing with popular programming languages such as Perl or Python.

How to Easily Execute Content Scraping: Tips for Content Scraping Projects

If you want to easily carry out content scraping, you should check for an API, be kind to the website, not follow the same crawl pattern and constantly change your crawl pattern, use CAPTCHA solution services, and scrape data during off-peak hours.

1. You should check if an API exists

An API, or application programming interface, hides the complexity of the website from data consumers, and thanks to this interface, you can easily obtain the data you need using a simple program. If there is an API on a website, sending a search query to the API will be sufficient to obtain the data, it is also possible to take this data and use it as you wish.

2. You should be kind to the website

Each time you make a request, the underlying website whose data you are targeting must use server resources to provide you with a response. For this reason, the volume and frequency of the queries you make will affect the servers of the website. Therefore, the higher the volume of requests you send to the servers of the website and the more frequent requests you make, the more the server of the website will be damaged and the user experience of the website will be seriously affected. If you want to be kind to the website, you should try these methods:

- If you have such a facility, it would be better to do web scraping during off-peak hours as the server load will be minimal compared to peak hours.

- When you scrape a website, you need to limit the number of requests you send.

- You need to send your requests to websites by dividing them into multiple IP addresses.

- If you are going to make consecutive requests, you should add a certain number of delays between these requests so that the website’s server is not overloaded.

3. You should not follow the same scanning pattern and constantly change your scanning pattern

Although both human users and bots consume data from a website, there are some inherent differences between these users. Humans are extremely slow and future movements of humans are absolutely unpredictable, but unlike humans, bots are extremely fast and have predictable behavior. This is also the reality that anti-scraping technologies on the website use to block web scraping tools. For this reason, you can add random and man-made actions to your web scrapers to avoid anti-scraping technologies.

4. You should take advantage of CAPTCHA resolution services

A service that companies use to avoid being scraped by other companies, the CAPTCHA service helps you understand whether the user on a website is real or a bot. If the users are real users and humans, they will be able to solve these puzzles easily, but if a bot is visiting the website, this puzzle will not be solved. However, if you want to have an advanced scraping process and you are going to provide a service for it, you need to take advantage of CAPTCHA solution services to get rid of CAPTCHA problems.

5. You should scrape data during off-peak hours

If you scrape during peak hours when the website is frequently viewed, the server load on the target website will also be extremely heavy. For this reason, if you scrape during peak hours, you will cause a bad user experience for real users who scrape the website. If you want to deal with this problem, you can schedule your web scraping scheduled to occur during off-peak hours.

If you need a web scraping service, Scrape.do is the right choice for you. We offer high-quality services at competitive prices. We can help you extract data from any website, turning it into a CSV file that can be easily imported into your database or spreadsheet software. You can use our service to scrape data from any website and turn it into a CSV file. We provide affordable plans with high-quality services. Our team can help you out anytime if needed. Contact us now!

Interested in content scraping? Maybe the article about how journalists use that can help you.