Category:Scraping Basics

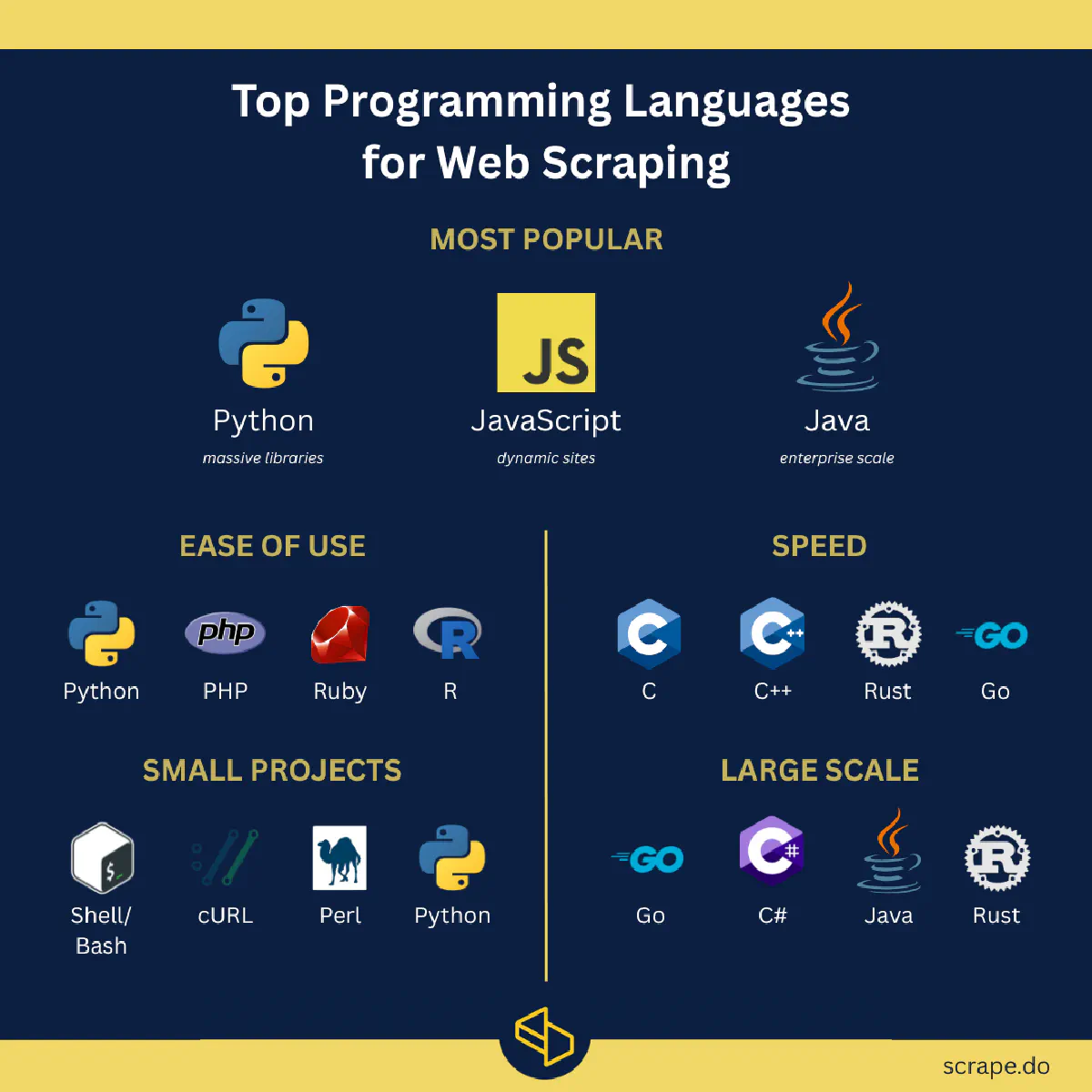

Ranking The 15 Best Languages for Web Scraping

Founder @ Scrape.do

Any language that can send an HTTP request can be used for web scraping.

But should it?

In this guide, we’re ranking the 15 most effective languages and tools for web scraping in 2026 based on performance, ecosystem, and real-world use cases.

Whether you’re crawling millions of pages or building quick one-off scripts, this breakdown will help you choose the right tool for the job.

1. Python

- Best for: Beginners, researchers, data teams building scalable pipelines

- Rich library ecosystem (

Scrapy,Requests,BeautifulSoup,aiohttp,Playwright) - Easy to prototype and scale with multiprocessing or

asyncio

Python is the most popular language for web scraping and that's for a good reason.

Its simple syntax and huge ecosystem make it the first choice for everyone from students writing their first scraper to teams building distributed crawlers.

Its biggest strength is the flexibility: you can build quick one-off scripts or large scraping pipelines with queues, retry logic, and export layers.

While it isn’t the fastest language at the CPU level, it handles network-bound scraping extremely well, especially when paired with asynchronous libraries or horizontal scaling.

The main downside of web scraping in Python performance under heavy CPU load. Python is single-threaded by default, so unless you’re using multiprocessing or async patterns properly, large-scale tasks can get bottlenecked.

It also requires extra effort to handle JavaScript-heavy sites unless you integrate with headless browsers.

That said, Python has the richest scraping ecosystem:

Scrapyfor frameworks,aiohttpfor async requests,PlaywrightorSeleniumfor headless automation,- and

BeautifulSouporlxmlfor HTML parsing.

Here’s what a basic GET request looks like in Python:

import requests

url = "https://example.com"

response = requests.get(url)

print(response.text)2. JavaScript / Node.js

- Best for: Browser-based scraping, single-page apps, full-stack JavaScript workflows

- First-class browser automation with

PuppeteerandPlaywright - Great concurrency with non-blocking, event-driven architecture

If the browser is your playground, JavaScript is your best friend.

Node.js web scraping is especially powerful for scraping dynamic sites and single-page applications, where you need to render content, click elements, or wait for data to load.

Its event-driven model makes it ideal for running hundreds or even thousands of requests in parallel without blocking the thread; perfect for real-time dashboards, notifications, or streaming crawlers.

But scraping in JavaScript comes with its own set of trade-offs.

HTML parsing in Node isn’t as elegant as Python unless you’re using libraries like Cheerio, and managing memory under heavy load can be trickier. If you’re scraping large volumes without proper throttling, you’ll hit backpressure fast.

Still, Node’s integration with Puppeteer and Playwright gives you full control over headless Chrome sessions, letting you bypass JavaScript rendering challenges with ease.

Popular tools in the JavaScript ecosystem are:

axiosornode-fetchfor HTTP requestsCheeriofor parsing static HTMLPuppeteerorPlaywrightfor headless browser automationfast-xml-parserfor working with feeds or legacy formats

Here’s what a basic GET request looks like in Node.js:

const axios = require("axios");

const url = "https://example.com";

axios.get(url).then((response) => {

console.log(response.data);

});3. Go (Golang)

- Best for: Distributed crawlers, performance-heavy backends, proxy-aware scraping APIs

- Super lightweight with excellent concurrency via goroutines

- Easy to compile and deploy as a single binary

Web scraping with Go is fast, efficient, and built for scale.

Its concurrency model (powered by goroutines and channels) makes it ideal for building high-volume crawlers or custom scraping services that need to hit millions of pages per day.

Since Go compiles into a single binary with no runtime dependencies, it’s a favorite for teams building internal tools, containerized scraping jobs, or microservices that need to be deployed globally.

The biggest drawback? Its scraping ecosystem is still catching up.

While libraries like Colly and GoQuery get the job done, they don’t offer the same flexibility or out-of-the-box features as say Python’s.

And Go isn’t great for scraping JavaScript-heavy sites unless you pair it with a remote browser or third-party rendering service.

Most popular Go tools for scraping are:

Collyfor crawling and HTTP requestsGoQueryfor jQuery-like DOM parsing- Native concurrency for massive throughput

Here’s a basic GET request in Go:

package main

import (

"fmt"

"io/ioutil"

"net/http"

)

func main() {

resp, _ := http.Get("https://example.com")

body, _ := ioutil.ReadAll(resp.Body)

fmt.Println(string(body))

}4. Java

- Best for: Enterprise scraping tools, long-running services, heavy multi-threaded jobs

- Strong performance with good garbage collection and JVM tuning

- Mature ecosystem:

Jsoup,HtmlUnit, andSeleniumfor full-stack scraping

Java may not be trendy, but it’s rock solid.

It shines in enterprise environments where teams need stability, scalability, and long-term maintainability, especially when scraping is part of a bigger ETL or analytics pipeline.

Java’s multi-threading support and performance tuning options (via the JVM) make it a strong contender for large-scale scrapers that need to run reliably for months.

The downside?

Java is verbose.

You’ll write more code for the same task compared to Python or JavaScript. And while Jsoup is excellent for static HTML parsing, handling dynamic content means bringing in HtmlUnit, Selenium, or integrating with external tools.

Key libraries for Java web scraping are:

Jsoupfor HTML parsingHtmlUnitfor headless browsingSeleniumfor browser automation

Here’s what a basic GET request looks like in Java:

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

public class Main {

public static void main(String[] args) throws Exception {

Document doc = Jsoup.connect("https://example.com").get();

System.out.println(doc.html());

}

}5. C# (.NET)

- Best for: Microsoft-heavy environments, desktop apps, scheduled scraping services

- Modern scraping stack with

HtmlAgilityPack,AngleSharp, andPlaywright for .NET - High performance with async/await and seamless Windows integration

If your stack runs on Windows, web scraping with C# is one of the smoothest options available.

Its native async capabilities and strong HTTP client support make it perfect for automated scraping services, especially in corporate environments where Excel exports, scheduled jobs, or desktop tooling is involved.

Performance-wise, it’s comparable to Java. And with .NET Core, it runs just as well on Linux servers, opening it up to cloud-based deployments too.

However, the ecosystem is smaller than Python’s, and you’ll need to wire together a few different libraries.

But once set up, C# offers a solid foundation for scraping with modern features and reliable concurrency.

Popular libraries and tools in the C# scraping stack:

HttpClientfor async HTTP requestsHtmlAgilityPackandAngleSharpfor DOM parsingPlaywright for .NETfor headless browser scraping

Here’s how to make a basic GET request in C#:

using System;

using System.Net.Http;

using System.Threading.Tasks;

class Program {

static async Task Main() {

HttpClient client = new HttpClient();

string html = await client.GetStringAsync("https://example.com");

Console.WriteLine(html);

}

}6. PHP

- Best for: WordPress plugins, scheduled tasks on shared hosts, scraping inside web apps

- No-friction deployment on most servers with

cURL,Guzzle, andSymfony DomCrawler - Lightweight and great for periodic scraping jobs

PHP isn’t the first language you think of for scraping—but it’s already on the server.

For web apps and CMS platforms (especially WordPress or Laravel), PHP is often the easiest way to add scraping functionality without spinning up extra infrastructure.

You can run scraping scripts as scheduled jobs, artisan commands, or background processes inside your app.

The ecosystem is simple but capable: Guzzle for HTTP requests, Symfony DomCrawler for parsing, and ReactPHP or Swoole if you want to go async.

But let’s be honest.

PHP isn’t built for long-running jobs or complex headless scraping. If you’re doing high-volume, proxy-rotated scraping, you’ll quickly hit limits.

Still, for embedded features and server-side automations, PHP web scraping is often the most convenient solution already in place.

Top libraries and tools are:

Guzzlefor HTTP requestsSymfony DomCrawlerfor HTML parsingSwooleorReactPHPfor async capabilities

Here’s a basic GET request using file_get_contents:

<?php

$html = file_get_contents("https://example.com");

echo $html;Or using Guzzle:

<?php

require 'vendor/autoload.php';

use GuzzleHttp\Client;

$client = new Client();

$response = $client->get('https://example.com');

echo $response->getBody();7. Ruby

- Best for: Rails developers, background jobs, quick integrations into existing apps

- Readable syntax and intuitive libraries like

Nokogiri,Mechanize, andFerrum - Works well for small-to-medium data tasks or automation scripts

Ruby makes scraping feel like writing poetry.

Its clean syntax and developer-friendly libraries make it a joy to work with, especially for people already in the Rails ecosystem.

Whether you're scheduling jobs with Sidekiq or pulling data into your app via background workers, Ruby makes it fast to build and easy to maintain.

Ruby web scraping is a solid pick when its part of a broader workflow, like importing events into a calendar app or syncing content across systems.

The trade-off is speed. Ruby is slower than Go or Java, and not ideal for CPU-heavy scraping jobs. For large-scale operations, performance and memory usage will be a limitation.

Still, for lean jobs or team tools that need clean data, Ruby’s developer experience is hard to beat.

Most popular tools are:

Nokogirifor HTML/XML parsingMechanizefor automating browser-like behaviorFerrumfor headless Chrome (no external browser needed)

Here’s what a basic GET request looks like in Ruby:

require 'net/http'

require 'uri'

uri = URI.parse("https://example.com")

response = Net::HTTP.get(uri)

puts response8. Rust

- Best for: Long-running CLI tools, data pipelines, resource-constrained environments

- Memory-safe and high-performance with async runtimes like

Tokio - Tooling includes

reqwest,scraper, andfantoccinifor headless control

Rust is fast and dependable.

When you’re building a scraping tool that needs to run for months without leaks or crashes, web scraping with Rust shines.

Its strict type system, ownership model, and async support give you the power to write resilient, high-performance bots that behave like native tools.

And thanks to libraries like scraper (which mimics jQuery-like selectors), writing scrapers in Rust is more ergonomic than it ever was.

The main friction point is the learning curve and development speed. You’ll write more code, and setup isn’t as plug-and-play as Python or Node.

Top scraping libraries in Rust:

reqwestfor HTTPscraperfor HTML parsingfantoccinifor headless browser automation via WebDriver

Here’s a simple GET request in Rust:

use reqwest;

#[tokio::main]

async fn main() -> Result<(), Box<dyn std::error::Error>> {

let body = reqwest::get("https://example.com").await?.text().await?;

println!("{}", body);

Ok(())

}9. R

- Best for: Academics, analysts, data scientists who want scraping + analysis in one workflow

- Tight integration with packages like

rvest,httr, andxml2 - Perfect for turning scraped data into graphs, reports, or dashboards

R is the language of analysis, and scraping is just the first step.

For data professionals already working in R, scraping with rvest or httr feels seamless. You can go from collecting data to plotting it with ggplot2 in the same script, with no context switching.

R isn’t built for large-scale crawling or complex scraping systems, but it handles modest, structured extraction tasks with elegance.

It can be used for simpler tasks like fetching COVID stats, aggregating government reports, or scraping structured datasets for modeling.

R web scraping lacks async support and isn’t great for working across thousands of URLs or pages. But in the right context, it’s incredibly productive.

Most used scraping tools and libraries in R are:

rvestfor scraping and DOM traversalhttrfor making requestsxml2for parsing HTML/XML documents

Here’s a basic GET request with rvest:

library(rvest)

url <- "https://example.com"

page <- read_html(url)

print(page)10. C++

- Best for: Embedded tools, scrapers on edge devices, low-level performance-critical scraping

- Maximum control with libraries like

libcurl,Gumbo, orBoost.Beast - Unmatched speed, but higher development and maintenance costs

C++ gives you total control over memory, networking, and threading.

It’s the go-to for building custom scrapers that run on limited-resource devices or need to integrate with other native systems.

But this control comes at a cost.

Manual memory management, longer dev time, and fewer scraping-specific libraries are the biggest downsides. HTML parsing requires external tools like Gumbo or Boost, and anything beyond simple requests takes serious setup.

C++ web scraping is a rare case at the application layer, but when you need a tiny, fast, and purpose-built scraper, there’s nothing faster.

Top tools in the C++ scraping stack are:

libcurlfor HTTPGumbo-parserfor HTMLBoost.Beastfor modern C++ networking and HTTP support

Here’s a minimal GET request using libcurl:

#include <curl/curl.h>

#include <iostream>

size_t write_callback(void* contents, size_t size, size_t nmemb, void* userp) {

std::cout << std::string((char*)contents, size * nmemb);

return size * nmemb;

}

int main() {

CURL* curl = curl_easy_init();

if (curl) {

curl_easy_setopt(curl, CURLOPT_URL, "https://example.com");

curl_easy_setopt(curl, CURLOPT_WRITEFUNCTION, write_callback);

curl_easy_perform(curl);

curl_easy_cleanup(curl);

}

return 0;

}11. C

- Best for: Low-level network tools, custom proxy layers, DNS sniffers, or packet inspection

- Fastest execution and minimal footprint with libraries like

libcurl - Ideal for supporting scraping workflows—not leading them

C isn’t used to scrape websites, it’s used to build the tools that scraping depends on.

Its raw performance and tiny memory usage make it perfect for writing custom HTTP clients, proxy balancers, or even scraping sensors on embedded systems.

It’s also often used in systems that monitor or analyze traffic at the network layer.

That said, writing scrapers in C directly is *risky and time-consuming***.** You’ll be managing memory manually, parsing HTML without guardrails, and debugging socket-level failures with minimal tooling.

But for the right use case like writing a DNS-aware scraper that runs on a router or implementing an IP rotation proxy, C web scraping has no equal.

Typical libraries used are:

libcurlfor HTTP requestslibxml2or hand-rolled string parsing for content- OS-level tools for file and socket handling

Here’s a basic GET request using libcurl:

#include <curl/curl.h>

#include <stdio.h>

size_t write_callback(void* contents, size_t size, size_t nmemb, void* userp) {

fwrite(contents, size, nmemb, stdout);

return size * nmemb;

}

int main() {

CURL *curl = curl_easy_init();

if (curl) {

curl_easy_setopt(curl, CURLOPT_URL, "https://example.com");

curl_easy_setopt(curl, CURLOPT_WRITEFUNCTION, write_callback);

curl_easy_perform(curl);

curl_easy_cleanup(curl);

}

return 0;

}12. Perl

- Best for: Legacy scripts, heavy text processing, sysadmin-style scraping tasks

- Unmatched regex power and scripting convenience

- Still shines in one-off parsing jobs or internal pipelines

If you’re dealing with thousands of saved HTML files, messy server output, or custom feed formats, Perl can chew through it like nothing else.

It’s still used in many legacy systems, and many teams have internal tools that rely on it for quick parsing, cleanup, and extraction.

Where Perl really excels is when HTML parsing is secondary, and text processing is the main task. Its regex engine and string manipulation tools are still best-in-class.

But for modern, large-scale web scraping?

It’s mostly been replaced by Python, Node, and Go.

Still, with modules like Mojo::UserAgent and HTML::TreeBuilder, Perl web scraping can absolutely hold its own for internal tools or one-off jobs.

Top Perl tools are:

LWP::UserAgentorMojo::UserAgentfor HTTPHTML::TreeBuilderorHTML::Parserfor DOM traversal- Perl’s native regex engine for post-processing

Basic GET request in Perl would look like:

use LWP::Simple;

my $html = get("https://example.com");

print $html;Or you could be using Mojo::UserAgent:

use Mojo::UserAgent;

my $ua = Mojo::UserAgent->new;

my $res = $ua->get("https://example.com")->result;

print $res->body if $res->is_success;13. cURL (CLI Tool)

- Best for: Simple API calls, cron jobs, shell scripts, or quick debugging

- No setup needed, comes pre-installed on most systems

- Blazing fast, but limited to fetching raw content only

*If all you need is to send a request and save the response, cURL is undefeated.*

It's not a programming language, but it’s often the first scraping tool developers reach for especially for API endpoints, HTML snapshots, or heartbeat checks.

It works everywhere, requires no setup, and handles HTTP headers, cookies, and even file uploads with ease.

But that’s where it ends.

You can’t parse HTML, click buttons, or do anything dynamic. You’d need to pipe the output to other tools (like grep, awk, or jq) to make it useful beyond raw capture.

cURL web scraping often serves as the first step in a shell-based workflow or a quick test before deploying larger jobs.

Typical use cases:

- Bash scripts for daily scraping

- API uptime checks

- Downloading files or HTML for later parsing

Here’s a basic request with cURL:

curl https://example.comAdd headers, user agents, and save to file:

curl -A "Mozilla/5.0" -o page.html https://example.com14. Shell / Bash (wget, grep, awk)

- Best for: Server-side tasks, cron jobs, scraping CLI-style HTML content

- Zero dependencies on Linux servers, great for automation

- Limited to static pages, so no JavaScript, no browser context

Shell scraping is the bare-metal version of data extraction.

For sysadmins and DevOps engineers, scraping with wget, grep, and awk is often enough.

If you just need to pull a status page, check uptime, or extract a value from static HTML, there’s no need for a full scraping framework.

It’s not elegant, and it definitely doesn’t scale, but Bash excels at simple, repeatable scraping tasks that run quietly in the background.

It’s also useful for chaining tools: scrape with wget, filter with grep, format with awk, and alert with mail all in a single script.

Major limitations to web scraping with Shell / Bash are:

❌ no JS execution,

❌ weak parsing control,

❌ and painful debugging.

But for monitoring or simple log-style scraping, Bash is still incredibly practical.

Most-used tools are:

wgetorcurlfor fetchinggrep,awk,sedfor parsingcronfor scheduling

As I mentioned, Shell / Bash is great for doing scraping tasks like checking uptimes etc., so here's a script that does that:

wget -qO- https://status.example.com | grep "service outage"And if the phrase is found, we trigger an alert:

if wget -qO- https://status.example.com | grep -q "service outage"; then

echo "Outage detected!" | mail -s "Alert" [email protected]

fi15. Dart

- Best for: Flutter developers, mobile/web apps that integrate scraping logic

- Modern async model with solid HTTP and HTML libraries

- Compiles to native code or JavaScript, flexible across platforms

Dart isn't a scraping-first language, but it fits beautifully in cross-platform apps.

If you're already using Flutter, Dart is a natural choice for scraping tasks baked into your app, like fetching news headlines, syncing product data or building an internal search engine that pulls live data from the web.

Its async await syntax makes network operations clean and readable, and libraries like http, html, and puppeteer_dart cover most scraping needs.

On the other hand, Dart isn’t built for heavy scraping at scale.

There's no built-in concurrency model like Go’s, and fewer tools exist for headless automation or large scraping pipelines.

But for lightweight scraping inside mobile or web apps? It's more than capable.

Here are the popular scraping tools in Dart:

httpfor requestshtmlfor parsing DOMpuppeteer_dartfor headless Chrome control

Here’s a basic GET request in Dart:

import 'package:http/http.dart' as http;

void main() async {

final response = await http.get(Uri.parse('https://example.com'));

print(response.body);

}Conclusion

Every language on this list can scrape the web, but each one has its strengths, limitations, and best-fit scenarios.

- Need to scrape fast and scale horizontally? Use Go or Node.js for high-throughput crawlers with efficient concurrency.

- Need deep parsing and data analysis? Python or R gives you the ecosystem and flexibility to go from raw HTML to clean insights.

- Working inside a mobile or desktop app? Dart or C# integrates scraping directly into your existing stack.

- Scraping from cron jobs or shell scripts? cURL, Bash, or PHP keeps things lean and server-friendly.

That said, one thing never changes: you’ll eventually face anti-bot defenses, rate limits, TLS fingerprinting, or CAPTCHA walls.

That’s where Scrape.do steps in.

We work with any programming language and help you bypass:

- Complex headers and TLS fingerprinting

- IP bans and geo-restrictions

- JavaScript rendering and CAPTCHA

- WAFs like Cloudflare, Akamai, and more

Build in whatever language you love. We’ll make sure your requests go through.

Founder @ Scrape.do