4 Ways Journalists Can Use Web Scraping | Scrape.do

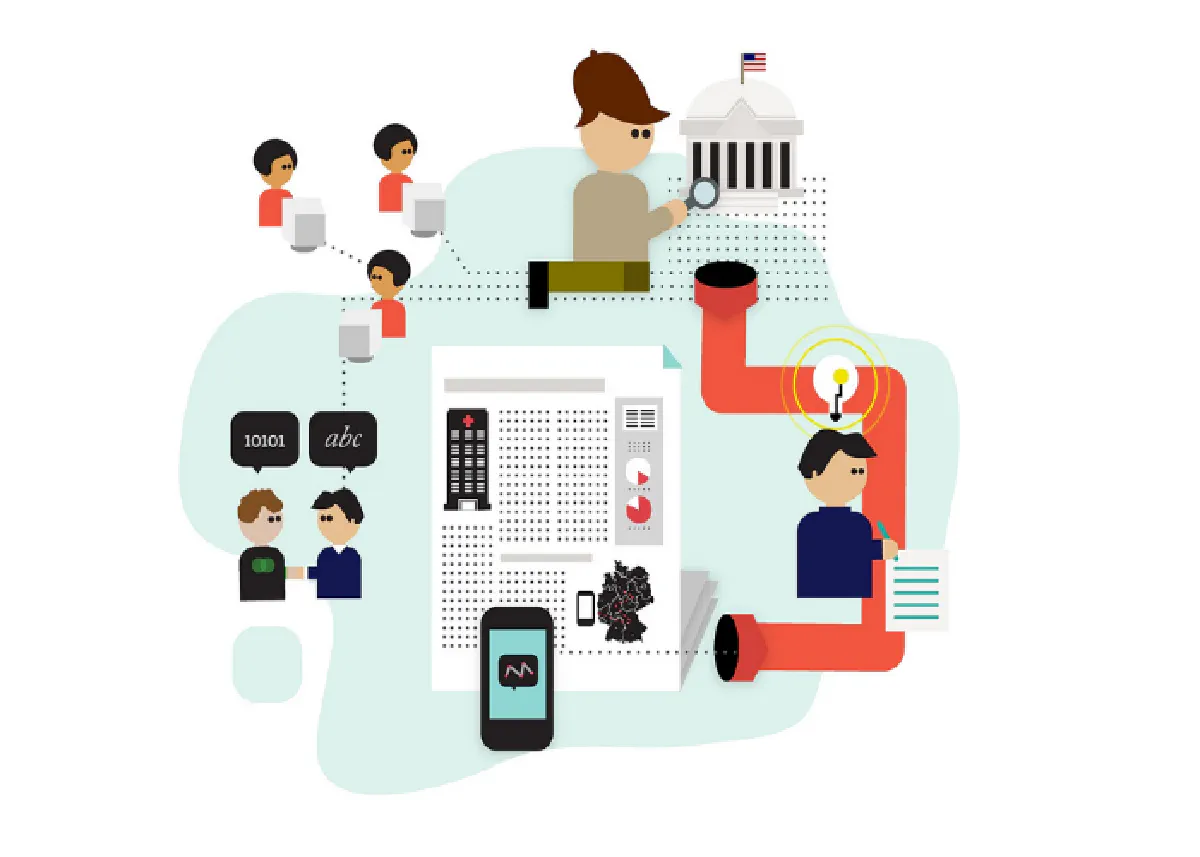

Almost the most important thing for a journalist is to get the most up-to-date and accurate information. While there are many ways to get up-to-date and accurate information, not all of them are that secure. One of the most popular methods of accessing accurate and up-to-date information in today’s digital world is web scraping. If used correctly, web scraping is a great information gathering tool to gather needed information at the fastest, most efficient, and lowest cost possible. In this article, we will talk about 4 different ways to use web scraping that journalist can use to improve their content.

The Scrape.do team is here for you! We guarantee the satisfaction of our valued users with our expert staff in the field! We strive to provide you with 24/7 service with an average response time of 10 minutes. For detailed information, you can review our website and contact our expert staff through our communication channels. Let’s start our article now!

What is Web Scraping?

Web scraping is one of the most indispensable data collection methods in today’s world. The scraping of data on one or more websites is done legally through various programs, software, or extensions. The purpose of scraping the data may vary. Some of these are price monitoring, sentiment analysis, and market research. The scraped data is extracted and structured according to its purpose. Web scraping is one of the most frequently preferred data collection methods in today’s digital world.

Concept Needed to Know: Data Journalism

Before we talk about these 4 methods, let’s talk about “data journalism”, which is an important concept that we need to know. What we call data journalism involves interpreting information inside large datasets that have been dug up. Therefore, it is a discipline that requires the journalist to know what the data in question means in real life and to convey this to his readers. Data journalists must have the ability to compile and make sense of large amounts of data, be able to describe the collected data with graphs, tables, pictures, and words, and report their findings.

Before we came to the present day, data journalists would sacrifice weeks and maybe even months to compile statistics and information one by one from disparate sources. Today, they can perform these operations automatically in a few hours, maybe even minutes, by means of technological facilities such as web scraping.

First Method: Scraping Datasets

The reliability of the data they collect and will collect is of the utmost importance for journalists. As we mentioned, collecting data manually can be time-consuming and costly in some cases. In such cases, you can collect the data that you will obtain with web scraping, that is, you will scrape, much faster and cost-effectively. You can use web scraping in several different ways to collect datasets. One of them is scraping data from government websites. The data on such a website hosts datasets that are too large to be sorted manually.

Another way is to scrape scientific articles from academic journals, which are used to develop studies specifically on specialized fields. As we constantly emphasize, the most important thing for a journalist is up-to-date and accurate information, and academic articles are great sources of such information.

Method Two: Scraping Press Releases

Press releases, which are one of the great sources of information for journalists, contain a lot of data about the story that a journalist is researching, from sources, contact information, and even the background information of that story. This process takes a lot less time than thought thanks to web scraping technology because it sorts press releases automatically rather than manually. This automatic sorting process we’re talking about should not be taken lightly because it saves an incredible amount of time.

One of the ways to use web scraping to collect data from press releases is to use a web scraper that aggregates all the press releases from a website and saves the combined press releases into some kind of database. If you have enough technical knowledge, you can create such a web scraper yourself, if you do not have enough technical knowledge, you can either use an existing web scraper or get support from a programmer who can create a web scraper for you. If you have technical knowledge but not enough for such a scraper or if you are avoiding creating such a scraper, there is another way. You can try to create and set up a scraper that collects data from press releases via specified keywords. Especially if you are doing research on a particular subject, this method will be ideal for you.

Method Three: News Sites with Great Sources of Information

One of the enormous sources of information and data for journalists is news sites on the web. News sites usually share news on many topics actively 24/7. You can quickly access breaking news, background information, and details about the subject you are researching, and collect the data you need from such sites. Just like press release websites, the process of ranking news sites with web scraping is automatic and saves more time than you might think.

One of the ways to scrape data for your research from news sites is to create a scraper or install an existing scraper that, as we mentioned earlier, collects all the headlines on a website and records the data it has collected into some kind of database. Another way is a scraper that scrapes data based on keywords, as we mentioned earlier. This method is the method that will be more beneficial for you, especially if you are researching news about a certain subject in a certain field.

The Last and Hard Method: Creating Your Own Data

Sometimes things don’t go that easy for journalists. So much so that under some conditions, they may not be able to find the data they need on the internet. When such situations are encountered, sometimes journalists may need to create their own data. One of the ways you can generate your own data like a journalist is, of course, again web scraping. However, it will not be as simple as the other three methods I have mentioned before. But although it is not simple, it is a method that will most likely help your business.

One of the ways to use data scraping so journalists can generate their own data would be to scrape product websites. Especially if you are doing research where you will need data from certain sectors, then web scraping will come to your rescue. Another, and perhaps the most effective way, is to scrape social media websites, as you can imagine. Naturally, social media sites are the place where you can scrape a lot of data, from sentiment analysis to current news, in the most comfortable but also the most difficult place. People tend to be more comfortable on social media than they normally are, and they can openly express their opinions and views. This inevitably makes social media sites a very comprehensive data source.

After legally scraping the data you need from the web, there is another very important thing to do, and that is to structure the data you have collected. Unstructured data doesn’t mean much on its own. As defined above, data journalism does not directly present the excavated data to its readers, it extracts, structures interpret, and transfers that data. That is, it does not present the data naked to its readers because it is meaningless to the readers.

Scrape.do, which has been experienced in the sector for a long time, is waiting to serve you, our valuable users, with its expert team! Moreover, thanks to the geo-targeting feature, you can target any country you need before you even start browsing the Web, and you will be where you want to be. For detailed information, you can review our website, and to get more information about your questions and services, you can contact our expert staff through our communication channels.