Getting Started

Scrape.do provides unblocked access to public web data at scale by:

- Avoiding all anti-bot, WAF, and CAPTCHA through custom bypass solutions.

- Rotating 110M+ datacenter, residential, and mobile proxies in 150 countries.

- Seamlessly rendering and interacting web pages with its managed headless browser.

After signing up for Scrape.do and generating your API key, test your setup with a basic request:

curl --location --request GET 'https://api.scrape.do/?token=YOUR_TOKEN&url=https://httpbin.co/anything'import requests

import urllib.parse

token = "YOUR_TOKEN"

targetUrl = urllib.parse.quote("https://httpbin.co/anything")

url = "http://api.scrape.do/?token={}&url={}".format(token, targetUrl)

response = requests.request("GET", url)

print(response.text)const axios = require('axios');

const token = "YOUR_TOKEN";

const targetUrl = encodeURIComponent("https://httpbin.co/anything");

const config = {

'method': 'GET',

'url': `https://api.scrape.do/?token=${token}&url=${targetUrl}`,

'headers': {}

};

axios(config)

.then(function (response) {

console.log(response.data);

})

.catch(function (error) {

console.log(error);

});package main

import (

"fmt"

"io/ioutil"

"net/http"

"net/url"

)

func main() {

token := "YOUR_TOKEN"

encoded_url := url.QueryEscape("https://httpbin.co/anything")

url := fmt.Sprintf("https://api.scrape.do/?token=%s&url=%s", token, encoded_url)

method := "GET"

client := &http.Client{}

req, err := http.NewRequest(method, url, nil)

if err != nil {

fmt.Println(err)

return

}

res, err := client.Do(req)

if err != nil {

fmt.Println(err)

return

}

defer res.Body.Close()

body, err := ioutil.ReadAll(res.Body)

if err != nil {

fmt.Println(err)

return

}

fmt.Println(string(body))

}require "uri"

require "net/http"

require 'cgi'

str = CGI.escape "https://httpbin.co/anything"

url = URI("https://api.scrape.do/?url=" + str + "&token=YOUR_TOKEN")

https = Net::HTTP.new(url.host, url.port)

https.use_ssl = true

request = Net::HTTP::Get.new(url)

response = https.request(request)

puts response.read_bodyOkHttpClient client = new OkHttpClient().newBuilder()

.build();

MediaType mediaType = MediaType.parse("text/plain");

RequestBody body = RequestBody.create(mediaType, "");

String encoded_url = URLEncoder.encode("https://httpbin.co/anything", "UTF-8");

Request request = new Request.Builder()

.url("https://api.scrape.do/?token=YOUR_TOKEN&url=" + encoded_url +"")

.method("GET", body)

.build();

Response response = client.newCall(request).execute();string token = "YOUR_TOKEN";

string url = WebUtility.UrlEncode("https://httpbin.co/anything");

var client = new HttpClient();

var requestURL = $"https://api.scrape.do/?token={token}&url={url}";

var request = new HttpRequestMessage(HttpMethod.Get, requestURL);

var response = client.SendAsync(request).Result;

var content = response.Content.ReadAsStringAsync().Result;

Console.WriteLine(content);<?php

$curl = curl_init();

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

curl_setopt($curl, CURLOPT_HEADER, false);

$data = [

"url" => "https://httpbin.co/anything",

"token" => "YOUR_TOKEN",

];

curl_setopt($curl, CURLOPT_CUSTOMREQUEST, 'GET');

curl_setopt($curl, CURLOPT_URL, "https://api.scrape.do/?".http_build_query($data));

curl_setopt($curl, CURLOPT_HTTPHEADER, array(

"Accept: */*",

));

$response = curl_exec($curl);

curl_close($curl);

echo $response;

?>Generate API Token

All your requests are authorized with your account token, which is automatically generated when you first sign up.

Your token is located in your dashboard here:

If you haven't signed up yet, create a free-forever account here.

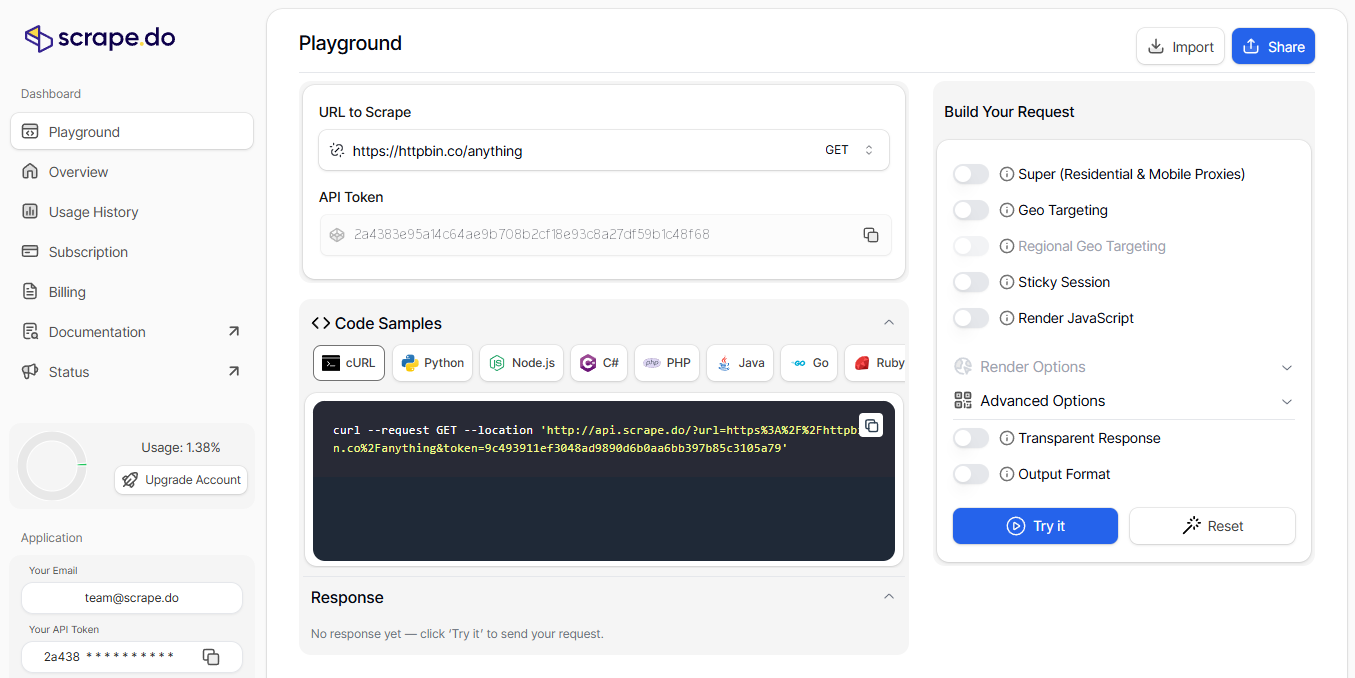

API Playground

The API Playground is your interactive testing environment where you can experiment with Scrape.do parameters without writing code. Use it to:

- Test different parameter combinations in real-time

- Generate code snippets in multiple programming languages (Python, Node.js, PHP, cURL, etc.)

- Preview API responses before implementing in your application

- Learn how parameters affect request behavior

Access the playground from your dashboard to build and refine your scraping requests with instant feedback.

Encode Your Target URL

Pass the target website URL you want to scrape using the url parameter.

When using API mode, you must URL-encode the parameter to prevent it from being misinterpreted as multiple query parameters (supported protocols: HTTP and HTTPS).

sudo apt-get install gridsite-clients

urlencode "YOUR_URL"import urllib.parse

encoded_url = urllib.parse.quote("YOUR_URL")let encoded_url = encodeURIComponent("YOUR_URL")package main

import (

"fmt"

"net/url"

)

func main() {

encoded_url := url.QueryEscape("YOUR_URL")

fmt.Println(string(encoded_url))

}require 'cgi'

encoded_url = CGI.escape strString encoded_url = URLEncoder.encode("YOUR_URL", "UTF-8");var encoded_url = System.Web.HttpUtility.UrlEncode("YOUR_URL")<?php

$url_encoded = urlencode("YOUR_URL");

?>No need to encode the URL if you use Proxy Mode.

API Parameters Overview

You can view all the parameters of Scrape.do from the table below and have an overview of all of them quickly.

| Parameter | Type | Default | Description | Details |

|---|---|---|---|---|

| token* | string | The token to use for authentication. | more | |

| url* | string | Target web page URL. | more | |

| super | bool | false | Use Residential & Mobile Proxy Networks | more |

| geoCode | string | Choose the right country for your target web page | more | |

| regionalGeoCode | string | Choose continent for your target web page | more | |

| sessionId | int | Use the same IP address continuously with a session | more | |

| customHeaders | bool | false | Handle all request headers for the target web page | more |

| extraHeaders | bool | false | Use it to change header values or add new headers over the ones we have added | more |

| forwardHeaders | bool | false | Forward your own headers to the target website | more |

| setCookies | string | Set cookies for the target web page | more | |

| disableRedirection | bool | false | Disable request redirection for your use-case | more |

| callback | string | Get results via a webhook without waiting for your requests | more | |

| timeout | int | 60000 | Set maximum timeout for your requests | more |

| retryTimeout | int | 15000 | Set maximum timeout for retry mechanism | more |

| disableRetry | bool | false | Disable retry mechanism for your use-case | more |

| device | string | desktop | Specify the device type (desktop, mobile, tablet) | more |

| render | bool | false | Use a headless browser to render JavaScript and wait for content to load | more |

| waitUntil | string | domcontentloaded | Control when the browser considers the page loaded | more |

| customWait | int | 0 | Set the browser wait time on the target web page after content loaded | more |

| waitSelector | string | CSS selector to wait for in the target web page | more | |

| width | int | 1920 | Browser viewport width in pixels | more |

| height | int | 1080 | Browser viewport height in pixels | more |

| blockResources | bool | true | Block CSS, images, and fonts on your target web page | more |

| screenShot | bool | false | Return a screenshot from your target web page | more |

| fullScreenShot | bool | false | Return a full page screenshot from your target web page | more |

| particularScreenShot | string | Return a screenshot of a particular area from your target web page | more | |

| playWithBrowser | string | Simulate browser actions like click, scroll, execute js, etc. | more | |

| output | string | raw | Get the output in raw or markdown format | more |

| transparentResponse | bool | false | Return pure response from target web page without Scrape.do processing | more |

| returnJSON | bool | false | Returns network requests with content as a property string | more |

| showFrames | bool | false | Returns all iframe content from the target webpage (requires render=true and returnJSON=true) | more |

| showWebsocketRequests | bool | false | Display websocket requests (requires render=true and returnJSON=true) | more |

| pureCookies | bool | false | Returns the original Set-Cookie headers from the target website | more |

How Does Scrape.do Work?

Modern websites use sophisticated anti-bot systems (Cloudflare, PerimeterX, DataDome, Akamai) that fingerprint TLS handshakes, validate HTTP headers, and blacklist datacenter IPs to block automated traffic.

Scrape.do solves this by handling all anti-bot evasion on your behalf.

Technically, we act as an intelligent proxy layer between your application and target websites. Every request is intercepted, upgraded to mimic legitimate browser behavior at multiple layers (TLS, HTTP, JavaScript), routed through our residential/mobile proxy network, and delivered as if from a real user. Your request goes through our infrastructure which:

-

Routes through rotating proxies — Your request is forwarded through our pool of 110M+ datacenter, residential, and mobile IPs across 150 countries, automatically rotating to avoid rate limits and IP bans.

-

Mimics real browser behavior — We manipulate TLS fingerprints and HTTP headers to match legitimate browser traffic, making your requests indistinguishable from organic users to bypass WAFs and anti-bot systems.

-

Renders JavaScript if needed — Setting

render=truespins up a headless browser (Chromium) to execute JavaScript and load dynamic content, essential for modern SPAs built with React, Vue, or Angular. -

Handles CAPTCHAs automatically — When target sites present CAPTCHA challenges (reCAPTCHA, hCaptcha, etc.), our system detects and solves them transparently without your intervention.

-

Retries intelligently — If a request fails due to temporary issues (502/503, timeouts, rate limits), we automatically retry with a different IP until success or timeout.

-

Returns clean data — You receive the raw HTML, JSON, or any content type the target website returns. API credits are only consumed on successful requests (2xx status codes).

For asynchronous processing of long-running jobs, use the callback parameter to receive results via webhook instead of keeping connections open.